- 12.1 - Logistic Regression

Logistic regression models a relationship between predictor variables and a categorical response variable. For example, we could use logistic regression to model the relationship between various measurements of a manufactured specimen (such as dimensions and chemical composition) to predict if a crack greater than 10 mils will occur (a binary variable: either yes or no). Logistic regression helps us estimate a probability of falling into a certain level of the categorical response given a set of predictors. We can choose from three types of logistic regression, depending on the nature of the categorical response variable:

Binary Logistic Regression :

Used when the response is binary (i.e., it has two possible outcomes). The cracking example given above would utilize binary logistic regression. Other examples of binary responses could include passing or failing a test, responding yes or no on a survey, and having high or low blood pressure.

Nominal Logistic Regression :

Used when there are three or more categories with no natural ordering to the levels. Examples of nominal responses could include departments at a business (e.g., marketing, sales, HR), type of search engine used (e.g., Google, Yahoo!, MSN), and color (black, red, blue, orange).

Ordinal Logistic Regression :

Used when there are three or more categories with a natural ordering to the levels, but the ranking of the levels do not necessarily mean the intervals between them are equal. Examples of ordinal responses could be how students rate the effectiveness of a college course (e.g., good, medium, poor), levels of flavors for hot wings, and medical condition (e.g., good, stable, serious, critical).

Particular issues with modelling a categorical response variable include nonnormal error terms, nonconstant error variance, and constraints on the response function (i.e., the response is bounded between 0 and 1). We will investigate ways of dealing with these in the binary logistic regression setting here. Nominal and ordinal logistic regression are not considered in this course.

The multiple binary logistic regression model is the following:

\[\begin{align}\label{logmod} \pi(\textbf{X})&=\frac{\exp(\beta_{0}+\beta_{1}X_{1}+\ldots+\beta_{k}X_{k})}{1+\exp(\beta_{0}+\beta_{1}X_{1}+\ldots+\beta_{k}X_{k})}\notag \\ & =\frac{\exp(\textbf{X}\beta)}{1+\exp(\textbf{X}\beta)}\\ & =\frac{1}{1+\exp(-\textbf{X}\beta)}, \end{align}\]

where here \(\pi\) denotes a probability and not the irrational number 3.14....

- \(\pi\) is the probability that an observation is in a specified category of the binary Y variable, generally called the "success probability."

- Notice that the model describes the probability of an event happening as a function of X variables. For instance, it might provide estimates of the probability that an older person has heart disease.

- The numerator \(\exp(\beta_{0}+\beta_{1}X_{1}+\ldots+\beta_{k}X_{k})\) must be positive, because it is a power of a positive value ( e ).

- The denominator of the model is (1 + numerator), so the answer will always be less than 1.

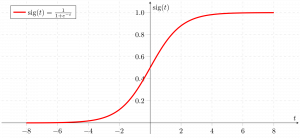

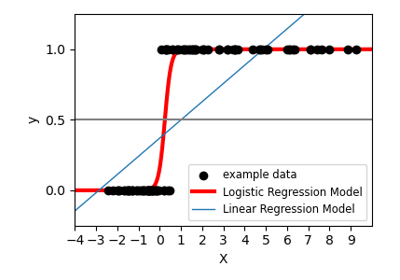

- With one X variable, the theoretical model for \(\pi\) has an elongated "S" shape (or sigmoidal shape) with asymptotes at 0 and 1, although in sample estimates we may not see this "S" shape if the range of the X variable is limited.

For a sample of size n , the likelihood for a binary logistic regression is given by:

\[\begin{align*} L(\beta;\textbf{y},\textbf{X})&=\prod_{i=1}^{n}\pi_{i}^{y_{i}}(1-\pi_{i})^{1-y_{i}}\\ & =\prod_{i=1}^{n}\biggl(\frac{\exp(\textbf{X}_{i}\beta)}{1+\exp(\textbf{X}_{i}\beta)}\biggr)^{y_{i}}\biggl(\frac{1}{1+\exp(\textbf{X}_{i}\beta)}\biggr)^{1-y_{i}}. \end{align*}\]

This yields the log likelihood:

\[\begin{align*} \ell(\beta)&=\sum_{i=1}^{n}[y_{i}\log(\pi_{i})+(1-y_{i})\log(1-\pi_{i})]\\ & =\sum_{i=1}^{n}[y_{i}\textbf{X}_{i}\beta-\log(1+\exp(\textbf{X}_{i}\beta))]. \end{align*}\]

Maximizing the likelihood (or log likelihood) has no closed-form solution, so a technique like iteratively reweighted least squares is used to find an estimate of the regression coefficients, $\hat{\beta}$.

To illustrate, consider data published on n = 27 leukemia patients. The data ( leukemia_remission.txt ) has a response variable of whether leukemia remission occurred (REMISS), which is given by a 1.

The predictor variables are cellularity of the marrow clot section (CELL), smear differential percentage of blasts (SMEAR), percentage of absolute marrow leukemia cell infiltrate (INFIL), percentage labeling index of the bone marrow leukemia cells (LI), absolute number of blasts in the peripheral blood (BLAST), and the highest temperature prior to start of treatment (TEMP).

The following output shows the estimated logistic regression equation and associated significance tests

- Select Stat > Regression > Binary Logistic Regression > Fit Binary Logistic Model.

- Select "REMISS" for the Response (the response event for remission is 1 for this data).

- Select all the predictors as Continuous predictors.

- Click Options and choose Deviance or Pearson residuals for diagnostic plots.

- Click Graphs and select "Residuals versus order."

- Click Results and change "Display of results" to "Expanded tables."

- Click Storage and select "Coefficients."

Coefficients Term Coef SE Coef 95% CI Z-Value P-Value VIF Constant 64.3 75.0 ( -82.7, 211.2) 0.86 0.391 CELL 30.8 52.1 ( -71.4, 133.0) 0.59 0.554 62.46 SMEAR 24.7 61.5 ( -95.9, 145.3) 0.40 0.688 434.42 INFIL -25.0 65.3 (-152.9, 103.0) -0.38 0.702 471.10 LI 4.36 2.66 ( -0.85, 9.57) 1.64 0.101 4.43 BLAST -0.01 2.27 ( -4.45, 4.43) -0.01 0.996 4.18 TEMP -100.2 77.8 (-252.6, 52.2) -1.29 0.198 3.01

The Wald test is the test of significance for individual regression coefficients in logistic regression (recall that we use t -tests in linear regression). For maximum likelihood estimates, the ratio

\[\begin{equation*} Z=\frac{\hat{\beta}_{i}}{\textrm{s.e.}(\hat{\beta}_{i})} \end{equation*}\]

can be used to test $H_{0}: \beta_{i}=0$. The standard normal curve is used to determine the $p$-value of the test. Furthermore, confidence intervals can be constructed as

\[\begin{equation*} \hat{\beta}_{i}\pm z_{1-\alpha/2}\textrm{s.e.}(\hat{\beta}_{i}). \end{equation*}\]

Estimates of the regression coefficients, $\hat{\beta}$, are given in the Coefficients table in the column labeled "Coef." This table also gives coefficient p -values based on Wald tests. The index of the bone marrow leukemia cells (LI) has the smallest p -value and so appears to be closest to a significant predictor of remission occurring. After looking at various subsets of the data, we find that a good model is one which only includes the labeling index as a predictor:

Coefficients Term Coef SE Coef 95% CI Z-Value P-Value VIF Constant -3.78 1.38 (-6.48, -1.08) -2.74 0.006 LI 2.90 1.19 ( 0.57, 5.22) 2.44 0.015 1.00

Regression Equation P(1) = exp(Y')/(1 + exp(Y')) Y' = -3.78 + 2.90 LI

Since we only have a single predictor in this model we can create a Binary Fitted Line Plot to visualize the sigmoidal shape of the fitted logistic regression curve:

Odds, Log Odds, and Odds Ratio

There are algebraically equivalent ways to write the logistic regression model:

The first is

\[\begin{equation}\label{logmod1} \frac{\pi}{1-\pi}=\exp(\beta_{0}+\beta_{1}X_{1}+\ldots+\beta_{k}X_{k}), \end{equation}\]

which is an equation that describes the odds of being in the current category of interest. By definition, the odds for an event is π / (1 - π ) such that P is the probability of the event. For example, if you are at the racetrack and there is a 80% chance that a certain horse will win the race, then his odds are 0.80 / (1 - 0.80) = 4, or 4:1.

The second is

\[\begin{equation}\label{logmod2} \log\biggl(\frac{\pi}{1-\pi}\biggr)=\beta_{0}+\beta_{1}X_{1}+\ldots+\beta_{k}X_{k}, \end{equation}\]

which states that the (natural) logarithm of the odds is a linear function of the X variables (and is often called the log odds ). This is also referred to as the logit transformation of the probability of success, \(\pi\).

The odds ratio (which we will write as $\theta$) between the odds for two sets of predictors (say $\textbf{X}_{(1)}$ and $\textbf{X}_{(2)}$) is given by

\[\begin{equation*} \theta=\frac{(\pi/(1-\pi))|_{\textbf{X}=\textbf{X}_{(1)}}}{(\pi/(1-\pi))|_{\textbf{X}=\textbf{X}_{(2)}}}. \end{equation*}\]

For binary logistic regression, the odds of success are:

\[\begin{equation*} \frac{\pi}{1-\pi}=\exp(\textbf{X}\beta). \end{equation*}\]

By plugging this into the formula for $\theta$ above and setting $\textbf{X}_{(1)}$ equal to $\textbf{X}_{(2)}$ except in one position (i.e., only one predictor differs by one unit), we can determine the relationship between that predictor and the response. The odds ratio can be any nonnegative number. An odds ratio of 1 serves as the baseline for comparison and indicates there is no association between the response and predictor. If the odds ratio is greater than 1, then the odds of success are higher for higher levels of a continuous predictor (or for the indicated level of a factor). In particular, the odds increase multiplicatively by $\exp(\beta_{j})$ for every one-unit increase in $\textbf{X}_{j}$. If the odds ratio is less than 1, then the odds of success are less for higher levels of a continuous predictor (or for the indicated level of a factor). Values farther from 1 represent stronger degrees of association.

For example, when there is just a single predictor, \(X\), the odds of success are:

\[\begin{equation*} \frac{\pi}{1-\pi}=\exp(\beta_0+\beta_1X). \end{equation*}\]

If we increase \(X\) by one unit, the odds ratio is

\[\begin{equation*} \theta=\frac{\exp(\beta_0+\beta_1(X+1))}{\exp(\beta_0+\beta_1X)}=\exp(\beta_1). \end{equation*}\]

To illustrate, the relevant output from the leukemia example is:

Odds Ratios for Continuous Predictors Odds Ratio 95% CI LI 18.1245 (1.7703, 185.5617)

The regression parameter estimate for LI is $2.89726$, so the odds ratio for LI is calculated as $\exp(2.89726)=18.1245$. The 95% confidence interval is calculated as $\exp(2.89726\pm z_{0.975}*1.19)$, where $z_{0.975}=1.960$ is the $97.5^{\textrm{th}}$ percentile from the standard normal distribution. The interpretation of the odds ratio is that for every increase of 1 unit in LI, the estimated odds of leukemia remission are multiplied by 18.1245. However, since the LI appears to fall between 0 and 2, it may make more sense to say that for every 0.1 unit increase in L1, the estimated odds of remission are multiplied by $\exp(2.89726\times 0.1)=1.336$. Then

- At LI=0.9, the estimated odds of leukemia remission is $\exp\{-3.77714+2.89726*0.9\}=0.310$.

- At LI=0.8, the estimated odds of leukemia remission is $\exp\{-3.77714+2.89726*0.8\}=0.232$.

- The resulting odds ratio is $\frac{0.310}{0.232}=1.336$, which is the ratio of the odds of remission when LI=0.9 compared to the odds when L1=0.8.

Notice that $1.336\times 0.232=0.310$, which demonstrates the multiplicative effect by $\exp(0.1\hat{\beta_{1}})$ on the odds.

Likelihood Ratio (or Deviance) Test

The likelihood ratio test is used to test the null hypothesis that any subset of the $\beta$'s is equal to 0. The number of $\beta$'s in the full model is k +1 , while the number of $\beta$'s in the reduced model is r +1 . (Remember the reduced model is the model that results when the $\beta$'s in the null hypothesis are set to 0.) Thus, the number of $\beta$'s being tested in the null hypothesis is \((k+1)-(r+1)=k-r\). Then the likelihood ratio test statistic is given by:

\[\begin{equation*} \Lambda^{*}=-2(\ell(\hat{\beta}^{(0)})-\ell(\hat{\beta})), \end{equation*}\]

where $\ell(\hat{\beta})$ is the log likelihood of the fitted (full) model and $\ell(\hat{\beta}^{(0)})$ is the log likelihood of the (reduced) model specified by the null hypothesis evaluated at the maximum likelihood estimate of that reduced model. This test statistic has a $\chi^{2}$ distribution with \(k-r\) degrees of freedom. Statistical software often presents results for this test in terms of "deviance," which is defined as \(-2\) times log-likelihood. The notation used for the test statistic is typically $G^2$ = deviance (reduced) – deviance (full).

This test procedure is analagous to the general linear F test procedure for multiple linear regression. However, note that when testing a single coefficient, the Wald test and likelihood ratio test will not in general give identical results.

To illustrate, the relevant software output from the leukemia example is:

Deviance Table Source DF Adj Dev Adj Mean Chi-Square P-Value Regression 1 8.299 8.299 8.30 0.004 LI 1 8.299 8.299 8.30 0.004 Error 25 26.073 1.043 Total 26 34.372

Since there is only a single predictor for this example, this table simply provides information on the likelihood ratio test for LI ( p -value of 0.004), which is similar but not identical to the earlier Wald test result ( p -value of 0.015). The Deviance Table includes the following:

- The null (reduced) model in this case has no predictors, so the fitted probabilities are simply the sample proportion of successes, \(9/27=0.333333\). The log-likelihood for the null model is \(\ell(\hat{\beta}^{(0)})=-17.1859\), so the deviance for the null model is \(-2\times-17.1859=34.372\), which is shown in the "Total" row in the Deviance Table.

- The log-likelihood for the fitted (full) model is \(\ell(\hat{\beta})=-13.0365\), so the deviance for the fitted model is \(-2\times-13.0365=26.073\), which is shown in the "Error" row in the Deviance Table.

- The likelihood ratio test statistic is therefore \(\Lambda^{*}=-2(-17.1859-(-13.0365))=8.299\), which is the same as \(G^2=34.372-26.073=8.299\).

- The p -value comes from a $\chi^{2}$ distribution with \(2-1=1\) degrees of freedom.

When using the likelihood ratio (or deviance) test for more than one regression coefficient, we can first fit the "full" model to find deviance (full), which is shown in the "Error" row in the resulting full model Deviance Table. Then fit the "reduced" model (corresponding to the model that results if the null hypothesis is true) to find deviance (reduced), which is shown in the "Error" row in the resulting reduced model Deviance Table. For example, the relevant Deviance Tables for the Disease Outbreak example on pages 581-582 of Applied Linear Regression Models (4th ed) by Kutner et al are:

Full model:

Source DF Adj Dev Adj Mean Chi-Square P-Value Regression 9 28.322 3.14686 28.32 0.001 Error 88 93.996 1.06813 Total 97 122.318

Reduced model:

Source DF Adj Dev Adj Mean Chi-Square P-Value Regression 4 21.263 5.3159 21.26 0.000 Error 93 101.054 1.0866 Total 97 122.318

Here the full model includes four single-factor predictor terms and five two-factor interaction terms, while the reduced model excludes the interaction terms. The test statistic for testing the interaction terms is \(G^2 = 101.054-93.996 = 7.058\), which is compared to a chi-square distribution with \(10-5=5\) degrees of freedom to find the p -value = 0.216 > 0.05 (meaning the interaction terms are not significant at a 5% significance level).

Alternatively, select the corresponding predictor terms last in the full model and request the software to output Sequential (Type I) Deviances. Then add the corresponding Sequential Deviances in the resulting Deviance Table to calculate \(G^2\). For example, the relevant Deviance Table for the Disease Outbreak example is:

Source DF Seq Dev Seq Mean Chi-Square P-Value Regression 9 28.322 3.1469 28.32 0.001 Age 1 7.405 7.4050 7.40 0.007 Middle 1 1.804 1.8040 1.80 0.179 Lower 1 1.606 1.6064 1.61 0.205 Sector 1 10.448 10.4481 10.45 0.001 Age*Middle 1 4.570 4.5697 4.57 0.033 Age*Lower 1 1.015 1.0152 1.02 0.314 Age*Sector 1 1.120 1.1202 1.12 0.290 Middle*Sector 1 0.000 0.0001 0.00 0.993 Lower*Sector 1 0.353 0.3531 0.35 0.552 Error 88 93.996 1.0681 Total 97 122.318

The test statistic for testing the interaction terms is \(G^2 = 4.570+1.015+1.120+0.000+0.353 = 7.058\), the same as in the first calculation.

Goodness-of-Fit Tests

Overall performance of the fitted model can be measured by several different goodness-of-fit tests. Two tests that require replicated data (multiple observations with the same values for all the predictors) are the Pearson chi-square goodness-of-fit test and the deviance goodness-of-fit test (analagous to the multiple linear regression lack-of-fit F-test). Both of these tests have statistics that are approximately chi-square distributed with c - k - 1 degrees of freedom, where c is the number of distinct combinations of the predictor variables. When a test is rejected, there is a statistically significant lack of fit. Otherwise, there is no evidence of lack of fit.

By contrast, the Hosmer-Lemeshow goodness-of-fit test is useful for unreplicated datasets or for datasets that contain just a few replicated observations. For this test the observations are grouped based on their estimated probabilities. The resulting test statistic is approximately chi-square distributed with c - 2 degrees of freedom, where c is the number of groups (generally chosen to be between 5 and 10, depending on the sample size) .

Goodness-of-Fit Tests Test DF Chi-Square P-Value Deviance 25 26.07 0.404 Pearson 25 23.93 0.523 Hosmer-Lemeshow 7 6.87 0.442

Since there is no replicated data for this example, the deviance and Pearson goodness-of-fit tests are invalid, so the first two rows of this table should be ignored. However, the Hosmer-Lemeshow test does not require replicated data so we can interpret its high p -value as indicating no evidence of lack-of-fit.

The calculation of R 2 used in linear regression does not extend directly to logistic regression. One version of R 2 used in logistic regression is defined as

\[\begin{equation*} R^{2}=\frac{\ell(\hat{\beta_{0}})-\ell(\hat{\beta})}{\ell(\hat{\beta_{0}})-\ell_{S}(\beta)}, \end{equation*}\]

where $\ell(\hat{\beta_{0}})$ is the log likelihood of the model when only the intercept is included and $\ell_{S}(\beta)$ is the log likelihood of the saturated model (i.e., where a model is fit perfectly to the data). This R 2 does go from 0 to 1 with 1 being a perfect fit. With unreplicated data, $\ell_{S}(\beta)=0$, so the formula simplifies to:

\[\begin{equation*} R^{2}=\frac{\ell(\hat{\beta_{0}})-\ell(\hat{\beta})}{\ell(\hat{\beta_{0}})}=1-\frac{\ell(\hat{\beta})}{\ell(\hat{\beta_{0}})}. \end{equation*}\]

Model Summary Deviance Deviance R-Sq R-Sq(adj) AIC 24.14% 21.23% 30.07

Recall from above that \(\ell(\hat{\beta})=-13.0365\) and \(\ell(\hat{\beta}^{(0)})=-17.1859\), so:

\[\begin{equation*} R^{2}=1-\frac{-13.0365}{-17.1859}=0.2414. \end{equation*}\]

Note that we can obtain the same result by simply using deviances instead of log-likelihoods since the $-2$ factor cancels out:

\[\begin{equation*} R^{2}=1-\frac{26.073}{34.372}=0.2414. \end{equation*}\]

Raw Residual

The raw residual is the difference between the actual response and the estimated probability from the model. The formula for the raw residual is

\[\begin{equation*} r_{i}=y_{i}-\hat{\pi}_{i}. \end{equation*}\]

Pearson Residual

The Pearson residual corrects for the unequal variance in the raw residuals by dividing by the standard deviation. The formula for the Pearson residuals is

\[\begin{equation*} p_{i}=\frac{r_{i}}{\sqrt{\hat{\pi}_{i}(1-\hat{\pi}_{i})}}. \end{equation*}\]

Deviance Residuals

Deviance residuals are also popular because the sum of squares of these residuals is the deviance statistic. The formula for the deviance residual is

\[\begin{equation*} d_{i}=\pm\sqrt{2\biggl[y_{i}\log\biggl(\frac{y_{i}}{\hat{\pi}_{i}}\biggr)+(1-y_{i})\log\biggl(\frac{1-y_{i}}{1-\hat{\pi}_{i}}\biggr)\biggr]}. \end{equation*}\]

Here are the plots of the Pearson residuals and deviance residuals for the leukemia example. There are no alarming patterns in these plots to suggest a major problem with the model.

The hat matrix serves a similar purpose as in the case of linear regression – to measure the influence of each observation on the overall fit of the model – but the interpretation is not as clear due to its more complicated form. The hat values (leverages) are given by

\[\begin{equation*} h_{i,i}=\hat{\pi}_{i}(1-\hat{\pi}_{i})\textbf{x}_{i}^{\textrm{T}}(\textbf{X}^{\textrm{T}}\textbf{W}\textbf{X})\textbf{x}_{i}, \end{equation*}\]

where W is an $n\times n$ diagonal matrix with the values of $\hat{\pi}_{i}(1-\hat{\pi}_{i})$ for $i=1 ,\ldots,n$ on the diagonal. As before, we should investigate any observations with $h_{i,i}>3p/n$ or, failing this, any observations with $h_{i,i}>2p/n$ and very isolated .

Studentized Residuals

We can also report Studentized versions of some of the earlier residuals. The Studentized Pearson residuals are given by

\[\begin{equation*} sp_{i}=\frac{p_{i}}{\sqrt{1-h_{i,i}}} \end{equation*}\]

and the Studentized deviance residuals are given by

\[\begin{equation*} sd_{i}=\frac{d_{i}}{\sqrt{1-h_{i, i}}}. \end{equation*}\]

Cook's Distances

An extension of Cook's distance for logistic regression measures the overall change in fitted logits due to deleting the $i^{\textrm{th}}$ observation. It is defined by:

\[\begin{equation*} \textrm{C}_{i}=\frac{p_{i}^{2}h _{i,i}}{(k+1)(1-h_{i,i})^{2}}. \end{equation*}\]

Fits and Diagnostics for Unusual Observations Observed Obs Probability Fit SE Fit 95% CI Resid Std Resid Del Resid HI 8 0.000 0.849 0.139 (0.403, 0.979) -1.945 -2.11 -2.19 0.149840 Obs Cook’s D DFITS 8 0.58 -1.08011 R R Large residual

The residuals in this output are deviance residuals, so observation 8 has a deviance residual of \(-1.945\), a studentized deviance residual of \(-2.19\), a leverage (h) of \(0.149840\), and a Cook's distance (C) of 0.58.

Start Here!

- Welcome to STAT 462!

- Search Course Materials

- Lesson 1: Statistical Inference Foundations

- Lesson 2: Simple Linear Regression (SLR) Model

- Lesson 3: SLR Evaluation

- Lesson 4: SLR Assumptions, Estimation & Prediction

- Lesson 5: Multiple Linear Regression (MLR) Model & Evaluation

- Lesson 6: MLR Assumptions, Estimation & Prediction

- Lesson 7: Transformations & Interactions

- Lesson 8: Categorical Predictors

- Lesson 9: Influential Points

- Lesson 10: Regression Pitfalls

- Lesson 11: Model Building

- 12.2 - Further Logistic Regression Examples

- 12.3 - Poisson Regression

- 12.4 - Generalized Linear Models

- 12.5 - Nonlinear Regression

- 12.6 - Exponential Regression Example

- 12.7 - Population Growth Example

- Website for Applied Regression Modeling, 2nd edition

- Notation Used in this Course

- R Software Help

- Minitab Software Help

Copyright © 2018 The Pennsylvania State University Privacy and Legal Statements Contact the Department of Statistics Online Programs

Logistic Regression

28 Aug 2013

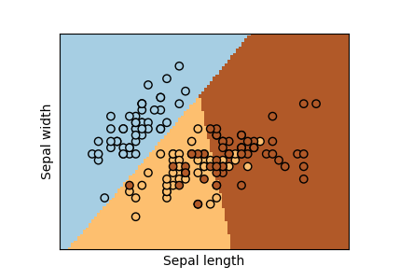

Previously we learned how to predict continuous-valued quantities (e.g., housing prices) as a linear function of input values (e.g., the size of the house). Sometimes we will instead wish to predict a discrete variable such as predicting whether a grid of pixel intensities represents a “0” digit or a “1” digit. This is a classification problem. Logistic regression is a simple classification algorithm for learning to make such decisions.

In linear regression we tried to predict the value of y^{(i)} for the i ‘th example x^{(i)} using a linear function y = h_\theta(x) = \theta^\top x. . This is clearly not a great solution for predicting binary-valued labels \left(y^{(i)} \in \{0,1\}\right) . In logistic regression we use a different hypothesis class to try to predict the probability that a given example belongs to the “1” class versus the probability that it belongs to the “0” class. Specifically, we will try to learn a function of the form:

The function \sigma(z) \equiv \frac{1}{1 + \exp(-z)} is often called the “sigmoid” or “logistic” function – it is an S-shaped function that “squashes” the value of \theta^\top x into the range [0, 1] so that we may interpret h_\theta(x) as a probability. Our goal is to search for a value of \theta so that the probability P(y=1|x) = h_\theta(x) is large when x belongs to the “1” class and small when x belongs to the “0” class (so that P(y=0|x) is large). For a set of training examples with binary labels \{ (x^{(i)}, y^{(i)}) : i=1,\ldots,m\} the following cost function measures how well a given h_\theta does this:

Note that only one of the two terms in the summation is non-zero for each training example (depending on whether the label y^{(i)} is 0 or 1). When y^{(i)} = 1 minimizing the cost function means we need to make h_\theta(x^{(i)}) large, and when y^{(i)} = 0 we want to make 1 - h_\theta large as explained above. For a full explanation of logistic regression and how this cost function is derived, see the CS229 Notes on supervised learning.

We now have a cost function that measures how well a given hypothesis h_\theta fits our training data. We can learn to classify our training data by minimizing J(\theta) to find the best choice of \theta . Once we have done so, we can classify a new test point as “1” or “0” by checking which of these two class labels is most probable: if P(y=1|x) > P(y=0|x) then we label the example as a “1”, and “0” otherwise. This is the same as checking whether h_\theta(x) > 0.5 .

To minimize J(\theta) we can use the same tools as for linear regression. We need to provide a function that computes J(\theta) and \nabla_\theta J(\theta) for any requested choice of \theta . The derivative of J(\theta) as given above with respect to \theta_j is:

Written in its vector form, the entire gradient can be expressed as:

This is essentially the same as the gradient for linear regression except that now h_\theta(x) = \sigma(\theta^\top x) .

Exercise 1B

Starter code for this exercise is included in the Starter Code GitHub Repo in the ex1/ directory.

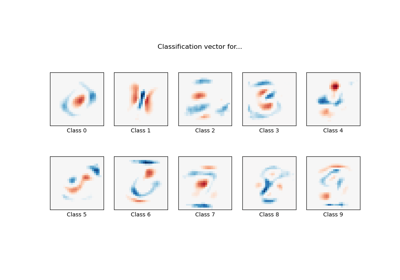

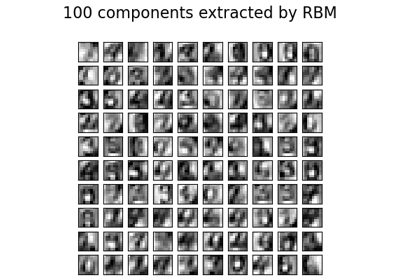

In this exercise you will implement the objective function and gradient computations for logistic regression and use your code to learn to classify images of digits from the MNIST dataset as either “0” or “1”. Some examples of these digits are shown below:

Each of the digits is is represented by a 28x28 grid of pixel intensities, which we will reformat as a vector x^{(i)} with 28*28 = 784 elements. The label is binary, so y^{(i)} \in \{0,1\} .

You will find starter code for this exercise in the ex1/ex1b_logreg.m file. The starter code file performs the following tasks for you:

Calls ex1_load_mnist.m to load the MNIST training and testing data. In addition to loading the pixel values into a matrix X (so that that j’th pixel of the i’th example is X_{ji} = x^{(i)}_j ) and the labels into a row-vector y , it will also perform some simple normalizations of the pixel intensities so that they tend to have zero mean and unit variance. Even though the MNIST dataset contains 10 different digits (0-9), in this exercise we will only load the 0 and 1 digits — the ex1_load_mnist function will do this for you.

The code will append a row of 1’s so that \theta_0 will act as an intercept term.

The code calls minFunc with the logistic_regression.m file as objective function. Your job will be to fill in logistic_regression.m to return the objective function value and its gradient.

After minFunc completes, the classification accuracy on the training set and test set will be printed out.

As for the linear regression exercise, you will need to implement logistic_regression.m to loop over all of the training examples x^{(i)} and compute the objective J(\theta; X,y) . Store the resulting objective value into the variable f . You must also compute the gradient \nabla_\theta J(\theta; X,y) and store it into the variable g . Once you have completed these tasks, you will be able to run the ex1b_logreg.m script to train the classifier and test it.

If your code is functioning correctly, you should find that your classifier is able to achieve 100% accuracy on both the training and testing sets! It turns out that this is a relatively easy classification problem because 0 and 1 digits tend to look very different. In future exercises it will be much more difficult to get perfect results like this.

Applied Data Science Meeting, July 4-6, 2023, Shanghai, China . Register for the workshops: (1) Deep Learning Using R, (2) Introduction to Social Network Analysis, (3) From Latent Class Model to Latent Transition Model Using Mplus, (4) Longitudinal Data Analysis, and (5) Practical Mediation Analysis. Click here for more information .

- Example Datasets

- Basics of R

- Graphs in R

- Hypothesis testing

- Confidence interval

- Simple Regression

- Multiple Regression

- Logistic regression

- Moderation analysis

- Mediation analysis

- Path analysis

- Factor analysis

- Multilevel regression

- Longitudinal data analysis

- Power analysis

Logistic Regression

Logistic regression is widely used in social and behavioral research in analyzing the binary (dichotomous) outcome data. In logistic regression, the outcome can only take two values 0 and 1. Some examples that can utilize the logistic regression are given in the following.

- The election of Democratic or Republican president can depend on the factors such as the economic status, the amount of money spent on the campaign, as well as gender and income of the voters.

- Whether an assistant professor can be tenured may be predicted from the number of publications and teaching performance in the first three years.

- Whether or not someone has a heart attack may be related to age, gender and living habits.

- Whether a student is admitted may be predicted by her/his high school GPA, SAT score, and quality of recommendation letters.

We use an example to illustrate how to conduct logistic regression in R.

In this example, the aim is to predict whether a woman is in compliance with mammography screening recommendations from four predictors, one reflecting medical input and three reflecting a woman's psychological status with regarding to screening.

- Outcome y: whether a woman is in compliance with mammography screening recommendations (1: in compliance; 0: not in compliance)

- x1: whether she has received a recommendation for screening from a physician;

- x2: her knowledge about breast cancer and mammography screening;

- x3: her perception of benefit of such a screening;

- x4: her perception of the barriers to being screened.

Basic ideas

With a binary outcome, the linear regression does not work any more. Simply speaking, the predictors can take any value but the outcome cannot. Therefore, using a linear regression cannot predict the outcome well. In order to deal with the problem, we model the probability to observe an outcome 1 instead, that is $p = \Pr(y=1)$. Using the mammography example, that'll be the probability for a woman to be in compliance with the screening recommendation.

Even directly modeling the probability would work better than predicting the 1/0 outcome, intuitively. A potential problem is that the probability is bound between 0 and 1 but the predicted values are generally not. To further deal with the problem, we conduct a transformation using

\[ \eta = \log\frac{p}{1-p}.\]

After transformation, $\eta$ can take any value from $-\infty$ when $p=0$ to $\infty$ when $p=1$. Such a transformation is called logit transformation, denoted by $\text{logit}(p)$. Note that $p_{i}/(1-p_{i})$ is called odds, which is simply the ratio of the probability for the two possible outcomes. For example, if for one woman, the probability that she is in compliance is 0.8, then the odds is 0.8/(1-0.2)=4. Clearly, for equal probability of the outcome, the odds=1. If odds>1, there is a probability higher than 0.5 to observe the outcome 1. With the transformation, the $\eta$ can be directly modeled.

Therefore, the logistic regression is

\[ \mbox{logit}(p_{i})=\log(\frac{p_{i}}{1-p_{i}})=\eta_i=\beta_{0}+\beta_{1}x_{1i}+\ldots+\beta_{k}x_{ki} \]

where $p_i = \Pr(y_i = 1)$. Different from the regular linear regression, no residual is used in the model.

Why is this?

For a variable $y$ with two and only two outcome values, it is often assumed it follows a Bernoulli or binomial distribution with the probability $p$ for the outcome 1 and probability $1-p$ for 0. The density function is

\[ p^y (1-p)^{1-y}. \]

Note that when $y=1$, $p^y (1-p)^{1-y} = p$ exactly.

Furthermore, we assume there is a continuous variable $y^*$ underlying the observed binary variable. If the continuous variable takes a value larger than certain threshold, we would observe 1, otherwise 0. For logistic regression, we assume the continuous variable has a logistic distribution with the density function:

\[ \frac{e^{-y^*}}{1+e^{-y^*}} .\]

The probability for observing 1 is therefore can be directly calculated using the logistic distribution as:

\[ p = \frac{1}{1 + e^{-y^*}},\]

which transforms to

\[ \log\frac{p}{1-p} = y^*.\]

For $y^*$, since it is a continuous variable, it can be predicted as in a regular regression model.

Fitting a logistic regression model in R

In R, the model can be estimated using the glm() function. Logistic regression is one example of the generalized linear model (glm). Below gives the analysis of the mammography data.

- glm uses the model formula same as the linear regression model.

- family = tells the distribution of the outcome variable. For binary data, the binomial distribution is used.

- link = tell the transformation method. Here, the logit transformation is used.

- The output includes the regression coefficients and their z-statistics and p-values.

- The dispersion parameter is related to the variance of the response variable.

Interpret the results

We first focus on how to interpret the parameter estimates from the analysis. For the intercept, when all the predictors take the value 0, we have

\[ \beta_0 = \log(\frac{p}{1-p}), \]

which is the log odds that the observed outcome is 1.

We now look at the coefficient for each predictor. For the mammography example, let's assume $x_2$, $x_3$, and $x_4$ are the same and look at $x_1$ only. If a woman has received a recommendation ($x_1=1$), then the odds is

\[ \log(\frac{p}{1-p})|(x_1=1)=\beta_{0}+\beta_{1}+\beta_{2}x_{2}+\beta_{3}x_{3}+\beta_{4}x_{4}.\]

If a woman has not received a recommendation ($x_1=0$), then the odds is

\[\log(\frac{p}{1-p})|(x_1=0)=\beta_{0}+\beta_{2}x_{2}+\beta_{3}x_{3}+\beta_{4}x_{4}.\]

The difference is

\[\log(\frac{p}{1-p})|(x_1=1)-\log(\frac{p}{1-p})|(x_1=0)=\beta_{1}.\]

Therefore, the logistic regression coefficient for a predictor is the difference in the log odds when the predictor changes 1 unit given other predictors unchanged.

This above equation is equivalent to

\[\log\left(\frac{\frac{p(x_1=1)}{1-p(x_1=1)}}{\frac{p(x_1=0)}{1-p(x_1=0)}}\right)=\beta_{1}.\]

More descriptively, we have

\[\log\left(\frac{\mbox{ODDS(received recommendation)}}{\mbox{ODDS(not received recommendation)}}\right)=\beta_{1}.\]

Therefore, the regression coefficients is the log odds ratio. By a simple transformation, we have

\[\frac{\mbox{ODDS(received recommendation)}}{\mbox{ODDS(not received recommendation)}}=\exp(\beta_{1})\]

\[\mbox{ODDS(received recommendation)} = \exp(\beta_{1})*\mbox{ODDS(not received recommendation)}.\]

Therefore, the exponential of a regression coefficient is the odds ratio. For the example, $exp(\beta_{1})$=exp(1.7731)=5.9. Thus, the odds in compliance to screening for those who received recommendation is about 5.9 times of those who did not receive recommendation.

For continuous predictors, the regression coefficients can also be interpreted the same way. For example, we may say that if high school GPA increase one unit, the odds a student to be admitted can be increased to 6 times given other variables the same.

Although the output does not directly show odds ratio, they can be calculated easily in R as shown below.

By using odds ratios, we can intercept the parameters in the following.

- For x1, if a woman receives a screening recommendation, the odds for her to be in compliance with screening is about 5.9 times of the odds of a woman who does not receive a recommendation given x2, x3, x4 the same. Alternatively (may be more intuitive), if a woman receives a screening recommendation, the odds for her to be in compliance with screening will increase 4.9 times (5.889 – 1 = 4.889 =4.9), given other variables the same.

- For x2, if a woman has one unit more knowledge on breast cancer and mammography screening, the odds for her to be in compliance with screening decreases 58.1% (.419-1=-58.1%, negative number means decrease), keeping other variables constant.

- For x3, if a woman's perception about the benefit increases one unit, the odds for her to be in compliance with screening increases 81% (1.81-1=81%, positive number means increase), keeping other variables constant.

- For x4, if a woman's perception about the barriers increases one unit, the odds for her to be in compliance with screening decreases 14.2% (.858-1=-14.2%, negative number means decrease), keeping other variables constant.

Statistical inference for logistic regression

Statistical inference for logistic regression is very similar to statistical inference for simple linear regression. We can (1) conduct significance testing for each parameter, (2) test the overall model, and (3) test the overall model.

Test a single coefficient (z-test and confidence interval)

For each regression coefficient of the predictors, we can use a z-test (note not the t-test). In the output, we have z-values and corresponding p-values. For x1 and x3, their coefficients are significant at the alpha level 0.05. But for x2 and x4, they are not. Note that some software outputs Wald statistic for testing significance. Wald statistic is the square of the z-statistic and thus Wald test gives the same conclusion as the z-test.

We can also conduct the hypothesis testing by constructing confidence intervals. With the model, the function confint() can be used to obtain the confidence interval. Since one is often interested in odds ratio, its confidence interval can also be obtained.

Note that if the CI for odds ratio includes 1, it means nonsignificance. If it does not include 1, the coefficient is significant. This is because for the original coefficient, we compare the CI with 0. For odds ratio, exp(0)=1.

If we were reporting the results in terms of the odds and its CI, we could say, “The odds of in compliance to screening increases by a factor of 5.9 if receiving screening recommendation (z=3.66, P = 0.0002; 95% CI = 2.38 to 16.23) given everything else the same.”

Test the overall model

For the linear regression, we evaluate the overall model fit by looking at the variance explained by all the predictors. For the logistic regression, we cannot calculate a variance. However, we can define and evaluate the deviance instead. For a model without any predictor, we can calculate a null deviance, which is similar to variance for the normal outcome variable. After including the predictors, we have the residual deviance. The difference between the null deviance and the residual deviance tells how much the predictors help predict the outcome. If the difference is significant, then overall, the predictors are significant statistically.

The difference or the decease in deviance after including the predictors follows a chi-square ($\chi^{2}$) distribution. The chi-square ($\chi^{2}$) distribution is a widely used distribution in statistical inference. It has a close relationship to F distribution. For example, the ratio of two independent chi-square distributions is a F distribution. In addition, a chi-square distribution is the limiting distribution of an F distribution as the denominator degrees of freedom goes to infinity.

There are two ways to conduct the test. From the output, we can find the Null and Residual deviances and the corresponding degrees of freedom. Then we calculate the difference. For the mammography example, we first get the difference between the Null deviance and the Residual deviance, 203.32-155.48= 47.84. Then, we find the difference in the degrees of freedom 163-159=4. Then, the p-value can be calculated based on a chi-square distribution with the degree of freedom 4. Because the p-value is smaller than 0.05, the overall model is significant.

The test can be conducted simply in another way. We first fit a model without any predictor and another model with all the predictors. Then, we can use anova() to get the difference in deviance and the chi-square test result.

Test a subset of predictors

We can also test the significance of a subset of predictors. For example, whether x3 and x4 are significant above and beyond x1 and x2. This can also be done using the chi-square test based on the difference. In this case, we can compare a model with all predictors and a model without x3 and x4 to see if the change in the deviance is significant. In this example, the p-value is 0.002, indicating the change is signficant. Therefore, x3 and x4 are statistically significant above and beyond x1 and x2

To cite the book, use: Zhang, Z. & Wang, L. (2017-2022). Advanced statistics using R . Granger, IN: ISDSA Press. https://doi.org/10.35566/advstats. ISBN: 978-1-946728-01-2. To take the full advantage of the book such as running analysis within your web browser, please subscribe .

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Logistic Regression in Machine Learning

Logistic regression is a supervised machine learning algorithm used for classification tasks where the goal is to predict the probability that an instance belongs to a given class or not. Logistic regression is a statistical algorithm which analyze the relationship between two data factors. The article explores the fundamentals of logistic regression, it’s types and implementations.

Table of Content

What is Logistic Regression?

Logistic function – sigmoid function, types of logistic regression, assumptions of logistic regression, how does logistic regression work, code implementation for logistic regression, precision-recall tradeoff in logistic regression threshold setting, how to evaluate logistic regression model, differences between linear and logistic regression.

Logistic regression is used for binary classification where we use sigmoid function , that takes input as independent variables and produces a probability value between 0 and 1.

For example, we have two classes Class 0 and Class 1 if the value of the logistic function for an input is greater than 0.5 (threshold value) then it belongs to Class 1 otherwise it belongs to Class 0. It’s referred to as regression because it is the extension of linear regression but is mainly used for classification problems.

Key Points:

- Logistic regression predicts the output of a categorical dependent variable. Therefore, the outcome must be a categorical or discrete value.

- It can be either Yes or No, 0 or 1, true or False, etc. but instead of giving the exact value as 0 and 1, it gives the probabilistic values which lie between 0 and 1.

- In Logistic regression, instead of fitting a regression line, we fit an “S” shaped logistic function, which predicts two maximum values (0 or 1).

- The sigmoid function is a mathematical function used to map the predicted values to probabilities.

- It maps any real value into another value within a range of 0 and 1. The value of the logistic regression must be between 0 and 1, which cannot go beyond this limit, so it forms a curve like the “S” form.

- The S-form curve is called the Sigmoid function or the logistic function.

- In logistic regression, we use the concept of the threshold value, which defines the probability of either 0 or 1. Such as values above the threshold value tends to 1, and a value below the threshold values tends to 0.

On the basis of the categories, Logistic Regression can be classified into three types:

- Binomial: In binomial Logistic regression, there can be only two possible types of the dependent variables, such as 0 or 1, Pass or Fail, etc.

- Multinomial: In multinomial Logistic regression, there can be 3 or more possible unordered types of the dependent variable, such as “cat”, “dogs”, or “sheep”

- Ordinal: In ordinal Logistic regression, there can be 3 or more possible ordered types of dependent variables, such as “low”, “Medium”, or “High”.

We will explore the assumptions of logistic regression as understanding these assumptions is important to ensure that we are using appropriate application of the model. The assumption include:

- Independent observations: Each observation is independent of the other. meaning there is no correlation between any input variables.

- Binary dependent variables: It takes the assumption that the dependent variable must be binary or dichotomous, meaning it can take only two values. For more than two categories SoftMax functions are used.

- Linearity relationship between independent variables and log odds: The relationship between the independent variables and the log odds of the dependent variable should be linear.

- No outliers: There should be no outliers in the dataset.

- Large sample size: The sample size is sufficiently large

Terminologies involved in Logistic Regression

Here are some common terms involved in logistic regression:

- Independent variables: The input characteristics or predictor factors applied to the dependent variable’s predictions.

- Dependent variable: The target variable in a logistic regression model, which we are trying to predict.

- Logistic function: The formula used to represent how the independent and dependent variables relate to one another. The logistic function transforms the input variables into a probability value between 0 and 1, which represents the likelihood of the dependent variable being 1 or 0.

- Odds: It is the ratio of something occurring to something not occurring. it is different from probability as the probability is the ratio of something occurring to everything that could possibly occur.

- Log-odds: The log-odds, also known as the logit function, is the natural logarithm of the odds. In logistic regression, the log odds of the dependent variable are modeled as a linear combination of the independent variables and the intercept.

- Coefficient: The logistic regression model’s estimated parameters, show how the independent and dependent variables relate to one another.

- Intercept: A constant term in the logistic regression model, which represents the log odds when all independent variables are equal to zero.

- Maximum likelihood estimation : The method used to estimate the coefficients of the logistic regression model, which maximizes the likelihood of observing the data given the model.

The logistic regression model transforms the linear regression function continuous value output into categorical value output using a sigmoid function, which maps any real-valued set of independent variables input into a value between 0 and 1. This function is known as the logistic function.

Let the independent input features be:

[Tex]X = \begin{bmatrix} x_{11} & … & x_{1m}\\ x_{21} & … & x_{2m} \\ \vdots & \ddots & \vdots \\ x_{n1} & … & x_{nm} \end{bmatrix}[/Tex]

and the dependent variable is Y having only binary value i.e. 0 or 1.

[Tex]Y = \begin{cases} 0 & \text{ if } Class\;1 \\ 1 & \text{ if } Class\;2 \end{cases} [/Tex]

then, apply the multi-linear function to the input variables X.

[Tex]z = \left(\sum_{i=1}^{n} w_{i}x_{i}\right) + b [/Tex]

Here [Tex]x_i [/Tex] is the ith observation of X, [Tex]w_i = [w_1, w_2, w_3, \cdots,w_m] [/Tex] is the weights or Coefficient, and b is the bias term also known as intercept. simply this can be represented as the dot product of weight and bias.

[Tex]z = w\cdot X +b [/Tex]

whatever we discussed above is the linear regression .

Sigmoid Function

Now we use the sigmoid function where the input will be z and we find the probability between 0 and 1. i.e. predicted y.

[Tex]\sigma(z) = \frac{1}{1+e^{-z}} [/Tex]

Sigmoid function

As shown above, the figure sigmoid function converts the continuous variable data into the probability i.e. between 0 and 1.

- [Tex]\sigma(z) [/Tex] tends towards 1 as [Tex]z\rightarrow\infty [/Tex]

- [Tex]\sigma(z) [/Tex] tends towards 0 as [Tex]z\rightarrow-\infty [/Tex]

- [Tex]\sigma(z) [/Tex] is always bounded between 0 and 1

where the probability of being a class can be measured as:

[Tex]P(y=1) = \sigma(z) \\ P(y=0) = 1-\sigma(z) [/Tex]

Logistic Regression Equation

The odd is the ratio of something occurring to something not occurring. it is different from probability as the probability is the ratio of something occurring to everything that could possibly occur. so odd will be:

[Tex]\frac{p(x)}{1-p(x)} = e^z[/Tex]

Applying natural log on odd. then log odd will be:

[Tex]\begin{aligned} \log \left[\frac{p(x)}{1-p(x)} \right] &= z \\ \log \left[\frac{p(x)}{1-p(x)} \right] &= w\cdot X +b \\ \frac{p(x)}{1-p(x)}&= e^{w\cdot X +b} \;\;\cdots\text{Exponentiate both sides} \\ p(x) &=e^{w\cdot X +b}\cdot (1-p(x)) \\p(x) &=e^{w\cdot X +b}-e^{w\cdot X +b}\cdot p(x)) \\p(x)+e^{w\cdot X +b}\cdot p(x))&=e^{w\cdot X +b} \\p(x)(1+e^{w\cdot X +b}) &=e^{w\cdot X +b} \\p(x)&= \frac{e^{w\cdot X +b}}{1+e^{w\cdot X +b}} \end{aligned}[/Tex]

then the final logistic regression equation will be:

[Tex]p(X;b,w) = \frac{e^{w\cdot X +b}}{1+e^{w\cdot X +b}} = \frac{1}{1+e^{-w\cdot X +b}}[/Tex]

Likelihood Function for Logistic Regression

The predicted probabilities will be:

- for y=1 The predicted probabilities will be: p(X;b,w) = p(x)

- for y = 0 The predicted probabilities will be: 1-p(X;b,w) = 1-p(x)

[Tex]L(b,w) = \prod_{i=1}^{n}p(x_i)^{y_i}(1-p(x_i))^{1-y_i}[/Tex]

Taking natural logs on both sides

[Tex]\begin{aligned}\log(L(b,w)) &= \sum_{i=1}^{n} y_i\log p(x_i)\;+\; (1-y_i)\log(1-p(x_i)) \\ &=\sum_{i=1}^{n} y_i\log p(x_i)+\log(1-p(x_i))-y_i\log(1-p(x_i)) \\ &=\sum_{i=1}^{n} \log(1-p(x_i)) +\sum_{i=1}^{n}y_i\log \frac{p(x_i)}{1-p(x_i} \\ &=\sum_{i=1}^{n} -\log1-e^{-(w\cdot x_i+b)} +\sum_{i=1}^{n}y_i (w\cdot x_i +b) \\ &=\sum_{i=1}^{n} -\log1+e^{w\cdot x_i+b} +\sum_{i=1}^{n}y_i (w\cdot x_i +b) \end{aligned}[/Tex]

Gradient of the log-likelihood function

To find the maximum likelihood estimates, we differentiate w.r.t w,

[Tex]\begin{aligned} \frac{\partial J(l(b,w)}{\partial w_j}&=-\sum_{i=n}^{n}\frac{1}{1+e^{w\cdot x_i+b}}e^{w\cdot x_i+b} x_{ij} +\sum_{i=1}^{n}y_{i}x_{ij} \\&=-\sum_{i=n}^{n}p(x_i;b,w)x_{ij}+\sum_{i=1}^{n}y_{i}x_{ij} \\&=\sum_{i=n}^{n}(y_i -p(x_i;b,w))x_{ij} \end{aligned} [/Tex]

Binomial Logistic regression:

Target variable can have only 2 possible types: “0” or “1” which may represent “win” vs “loss”, “pass” vs “fail”, “dead” vs “alive”, etc., in this case, sigmoid functions are used, which is already discussed above.

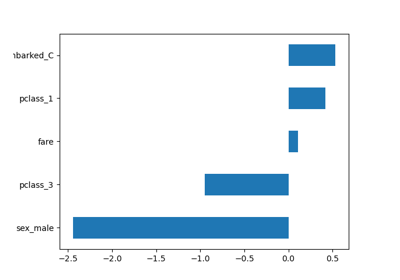

Importing necessary libraries based on the requirement of model. This Python code shows how to use the breast cancer dataset to implement a Logistic Regression model for classification.

# import the necessary libraries from sklearn.datasets import load_breast_cancer from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.metrics import accuracy_score # load the breast cancer dataset X , y = load_breast_cancer ( return_X_y = True ) # split the train and test dataset X_train , X_test , \ y_train , y_test = train_test_split ( X , y , test_size = 0.20 , random_state = 23 ) # LogisticRegression clf = LogisticRegression ( random_state = 0 ) clf . fit ( X_train , y_train ) # Prediction y_pred = clf . predict ( X_test ) acc = accuracy_score ( y_test , y_pred ) print ( "Logistic Regression model accuracy (in %):" , acc * 100 )

Logistic Regression model accuracy (in %): 95.6140350877193

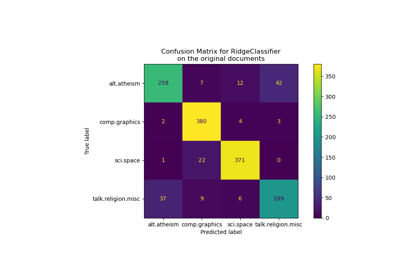

Multinomial Logistic Regression:

Target variable can have 3 or more possible types which are not ordered (i.e. types have no quantitative significance) like “disease A” vs “disease B” vs “disease C”.

In this case, the softmax function is used in place of the sigmoid function. Softmax function for K classes will be:

[Tex]\text{softmax}(z_i) =\frac{ e^{z_i}}{\sum_{j=1}^{K}e^{z_{j}}}[/Tex]

Here, K represents the number of elements in the vector z, and i, j iterates over all the elements in the vector.

Then the probability for class c will be:

[Tex]P(Y=c | \overrightarrow{X}=x) = \frac{e^{w_c \cdot x + b_c}}{\sum_{k=1}^{K}e^{w_k \cdot x + b_k}}[/Tex]

In Multinomial Logistic Regression, the output variable can have more than two possible discrete outputs . Consider the Digit Dataset.

from sklearn.model_selection import train_test_split from sklearn import datasets , linear_model , metrics # load the digit dataset digits = datasets . load_digits () # defining feature matrix(X) and response vector(y) X = digits . data y = digits . target # splitting X and y into training and testing sets X_train , X_test , \ y_train , y_test = train_test_split ( X , y , test_size = 0.4 , random_state = 1 ) # create logistic regression object reg = linear_model . LogisticRegression () # train the model using the training sets reg . fit ( X_train , y_train ) # making predictions on the testing set y_pred = reg . predict ( X_test ) # comparing actual response values (y_test) # with predicted response values (y_pred) print ( "Logistic Regression model accuracy(in %):" , metrics . accuracy_score ( y_test , y_pred ) * 100 )

Logistic Regression model accuracy(in %): 96.52294853963839

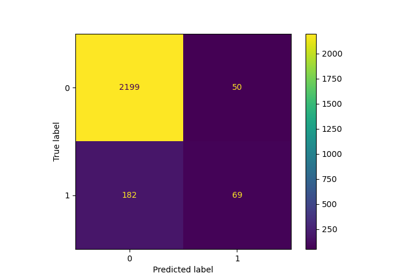

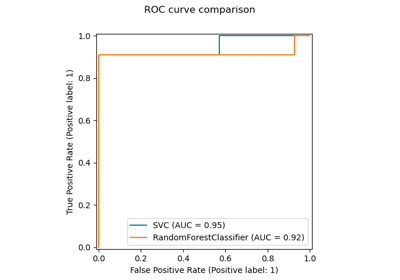

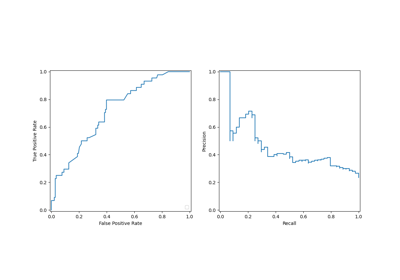

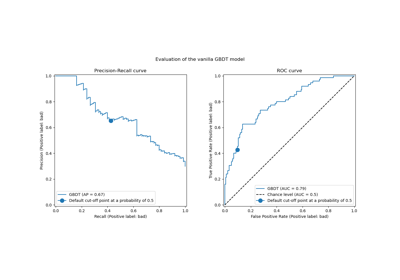

We can evaluate the logistic regression model using the following metrics:

- Accuracy: Accuracy provides the proportion of correctly classified instances. [Tex]Accuracy = \frac{True \, Positives + True \, Negatives}{Total} [/Tex]

- Precision: Precision focuses on the accuracy of positive predictions. [Tex]Precision = \frac{True \, Positives }{True\, Positives + False \, Positives} [/Tex]

- Recall (Sensitivity or True Positive Rate): Recall measures the proportion of correctly predicted positive instances among all actual positive instances. [Tex]Recall = \frac{ True \, Positives}{True\, Positives + False \, Negatives} [/Tex]

- F1 Score: F1 score is the harmonic mean of precision and recall. [Tex]F1 \, Score = 2 * \frac{Precision * Recall}{Precision + Recall} [/Tex]

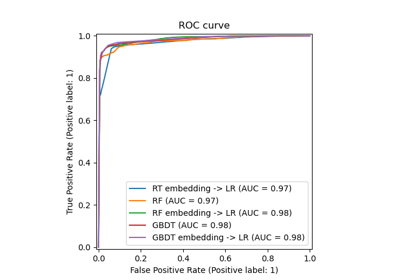

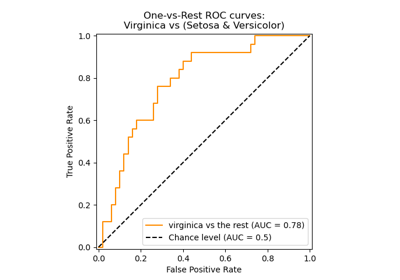

- Area Under the Receiver Operating Characteristic Curve (AUC-ROC): The ROC curve plots the true positive rate against the false positive rate at various thresholds. AUC-ROC measures the area under this curve, providing an aggregate measure of a model’s performance across different classification thresholds.

- Area Under the Precision-Recall Curve (AUC-PR): Similar to AUC-ROC, AUC-PR measures the area under the precision-recall curve, providing a summary of a model’s performance across different precision-recall trade-offs.

Logistic regression becomes a classification technique only when a decision threshold is brought into the picture. The setting of the threshold value is a very important aspect of Logistic regression and is dependent on the classification problem itself.

The decision for the value of the threshold value is majorly affected by the values of precision and recall. Ideally, we want both precision and recall being 1, but this seldom is the case.

In the case of a Precision-Recall tradeoff , we use the following arguments to decide upon the threshold:

- Low Precision/High Recall: In applications where we want to reduce the number of false negatives without necessarily reducing the number of false positives, we choose a decision value that has a low value of Precision or a high value of Recall. For example, in a cancer diagnosis application, we do not want any affected patient to be classified as not affected without giving much heed to if the patient is being wrongfully diagnosed with cancer. This is because the absence of cancer can be detected by further medical diseases, but the presence of the disease cannot be detected in an already rejected candidate.

- High Precision/Low Recall: In applications where we want to reduce the number of false positives without necessarily reducing the number of false negatives, we choose a decision value that has a high value of Precision or a low value of Recall. For example, if we are classifying customers whether they will react positively or negatively to a personalized advertisement, we want to be absolutely sure that the customer will react positively to the advertisement because otherwise, a negative reaction can cause a loss of potential sales from the customer.

The difference between linear regression and logistic regression is that linear regression output is the continuous value that can be anything while logistic regression predicts the probability that an instance belongs to a given class or not.

Linear Regression | Logistic Regression |

|---|---|

Linear regression is used to predict the continuous dependent variable using a given set of independent variables. | Logistic regression is used to predict the categorical dependent variable using a given set of independent variables. |

Linear regression is used for solving regression problem. | It is used for solving classification problems. |

In this we predict the value of continuous variables | In this we predict values of categorical variables |

In this we find best fit line. | In this we find S-Curve. |

Least square estimation method is used for estimation of accuracy. | Maximum likelihood estimation method is used for Estimation of accuracy. |

The output must be continuous value, such as price, age, etc. | Output must be categorical value such as 0 or 1, Yes or no, etc. |

It required linear relationship between dependent and independent variables. | It not required linear relationship. |

There may be collinearity between the independent variables. | There should be little to no collinearity between independent variables. |

Logistic Regression – Frequently Asked Questions (FAQs)

What is logistic regression in machine learning.

Logistic regression is a statistical method for developing machine learning models with binary dependent variables, i.e. binary. Logistic regression is a statistical technique used to describe data and the relationship between one dependent variable and one or more independent variables.

What are the three types of logistic regression?

Logistic regression is classified into three types: binary, multinomial, and ordinal. They differ in execution as well as theory. Binary regression is concerned with two possible outcomes: yes or no. Multinomial logistic regression is used when there are three or more values.

Why logistic regression is used for classification problem?

Logistic regression is easier to implement, interpret, and train. It classifies unknown records very quickly. When the dataset is linearly separable, it performs well. Model coefficients can be interpreted as indicators of feature importance.

What distinguishes Logistic Regression from Linear Regression?

While Linear Regression is used to predict continuous outcomes, Logistic Regression is used to predict the likelihood of an observation falling into a specific category. Logistic Regression employs an S-shaped logistic function to map predicted values between 0 and 1.

What role does the logistic function play in Logistic Regression?

Logistic Regression relies on the logistic function to convert the output into a probability score. This score represents the probability that an observation belongs to a particular class. The S-shaped curve assists in thresholding and categorising data into binary outcomes.

Please Login to comment...

Similar reads.

- How to Get a Free SSL Certificate

- Best SSL Certificates Provider in India

- Elon Musk's xAI releases Grok-2 AI assistant

- What is OpenAI SearchGPT? How it works and How to Get it?

- Full Stack Developer Roadmap [2024 Updated]

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Introduction to Statistics and Data Science

Chapter 18 logistic regression, 18.1 what is logistic regression used for.

Logistic regression is useful when we have a response variable which is categorical with only two categories. This might seem like it wouldn’t be especially useful, however with a little thought we can see that this is actually a very useful thing to know how to do. Here are some examples where we might use logistic regression .

- Predict whether a customer will visit your website again using browsing data

- Predict whether a voter will vote for the democratic candidate in an upcoming election using demographic and polling data

- Predict whether a patient given a surgery will survive for 5+ years after the surgery using health data

- Given the history of a stock, market trends predict if the closing price tomorrow will be higher or lower than today?

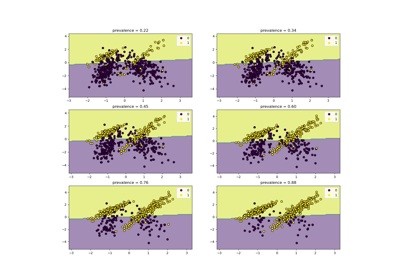

With many other possible examples. We can often phrase important questions as yes/no or (0-1) answers where we want to use some data to better predict the outcome. This is a simple case of what is called a classification problem in the machine learning/data science community. Given some information we want to use a computer to decide make a prediction which can be sorted into some finite number of outcomes.

18.2 GLM: Generalized Linear Models

Our linear regression techniques thus far have focused on cases where the response ( \(Y\) ) variable is continuous in nature. Recall, they take the form: \[ \begin{equation} Y_i=\alpha+ \sum_{j=1}^N \beta_j X_{ij} \end{equation} \] Where \(alpha\) is the intercept and \(\{\beta_1, \beta_2, ... \beta_N\}\) are the slope parameters for the explanatory variables ( \(\{X_1, X_2, ...X_N\}\) ). However, our outputs \(Y_i\) should give the probability that \(Y_i\) takes the value 1 given the \(X_j\) values. The right hand side of our model above will produce values in \(\mathbb{R}=(-\infty, \infty)\) while the left hand side should live in \([0,1]\) .

Therefore to use a model like this we need to transform our outputs from [0,1] to the whole real line \(\mathbb{R}\) .

\[y_i=g \left( \alpha+ \sum_{j=1}^N \beta_j X_{ij} \right)\]

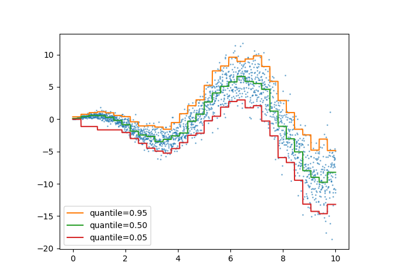

18.3 A Starting Example

Let’s consider the shot logs data set again. We will use the shot distance column SHOT_DIST and the FGM columns for a logistic regression. The FGM column is 1 if the shot was made and 0 otherwise (perfect candidate for the response variable in a logistic regression). We expect that the further the shot is from the basket (SHOT_DIST) the less likely it will be that the shot is made (FGM=1).

To build this model in R we will use the glm() command and specify the link function we are using a the logit function.

\[logit(p)=0.392-0.04 \times SD \implies p=logit^{-1}(0.392-0.04 \times SD)\] So we can find the probability of a shot going in 12 feet from the basket as:

Here is a plot of the probability of a shot going in as a function of the distance from the basket using our best fit coefficients.

18.3.1 Confidence Intervals for the Parameters

A major point of this book is that you should never be satisfied with a single number summary in statistics. Rather than just considering a single best fit for our coefficients we should really form some confidence intervals for their values.

As we saw for simple regression we can look at the confidence intervals for our intercepts and slopes using the confint command.

Note, these values are still in the logit transformed scale.

18.4 Equivalence of Logistic Regression and Proportion Tests

Suppose we want to use the categorical variable of the individual player in our analysis. In the interest of keeping our tables and graphs visible we will limit our players to just those who took more than 820 shots in the data set.

| Name | Number of Shots |

|---|---|

| blake griffin | 878 |

| chris paul | 851 |

| damian lillard | 925 |

| gordon hayward | 833 |

| james harden | 1006 |

| klay thompson | 953 |

| kyle lowry | 832 |

| kyrie irving | 919 |

| lamarcus aldridge | 1010 |

| lebron james | 947 |

| mnta ellis | 1004 |

| nikola vucevic | 889 |

| rudy gay | 861 |

| russell westbrook | 943 |

| stephen curry | 941 |

| tyreke evans | 875 |

Now we can get a reduced data set with just these players.

Lets form a logistic regression using just a categorical variable as the explanatory variable. \[ \begin{equation} logit(p)=\beta Player \end{equation} \]

If we take the inverse logit of the coefficients we get the field goal percentage of the players in our data set.

Now suppose we want to see if the players in our data set truly differ in their field goal percentages or whether the differences we observe could just be caused by random effects. To do this we want to compare a model without the players information included with one that includes this information. Let’s create a null model to compare against our player model.

This null model contains no explanatory variables and takes the form: \[logit(p_i)=\alpha\]

Thus, the shooting percentage is not allowed to vary between the players. We find based on this data an overall field goal percentage of:

Now we may compare logistic regression models using the anova command in R.

The second line contains a p value of 2.33e-5 telling us to reject the null hypothesis that the two models are equivalent. So we found that knowledge of the player does matter in calculating the probability of a shot being made.

Notice we could have performed this analysis as a proportion test using the null that all players shooting percentages are the same \(p_1=p_2=...p_{15}\)

Notice the p-value obtained matches the logistic regression ANOVA almost exactly. Thus, a proportion test can be viewed as a special case of a logistic regression.

18.5 Example: Building a More Accurate Model

Now we can form a model for the shooting percentages using the individual players data:

\[ logit(p_i)=\alpha+\beta_1 SF+\beta_2DD+\beta_3 \text{player_dummy} \]

18.6 Example: Measuring Team Defense Using Logistic Regression

\[ logit(p_i)=\alpha+\beta_1 SD+\beta_2 \text{Team}+\beta_3 (\text{Team}) (SD) \] Since the team defending is a categorical variable R will store it as a dummy variable when forming the regression. Thus the first level of this variable will not appear in our regression (or more precisely it will be included in the intercept \(\alpha\) and slope \(\beta_1\) ). Before we run the model we can see which team will be missing.

The below plot shows the expected shooting percentages at each distance for the teams in the data set.

#Better Approach

Kahneman, Daniel. 2011. Thinking, Fast and Slow . Macmillan.

Wickham, Hadley, and Garrett Grolemund. 2016. R for Data Science: Import, Tidy, Transform, Visualize, and Model Data . " O’Reilly Media, Inc.".

Xie, Yihui. 2019. Bookdown: Authoring Books and Technical Documents with R Markdown . https://CRAN.R-project.org/package=bookdown .

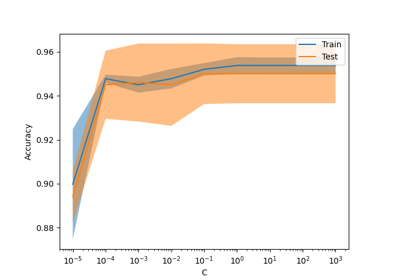

LogisticRegression #

Logistic Regression (aka logit, MaxEnt) classifier.

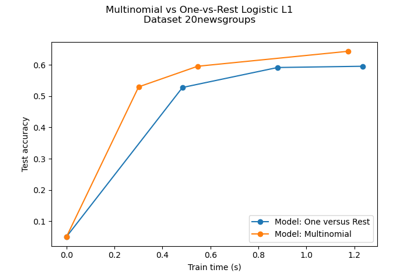

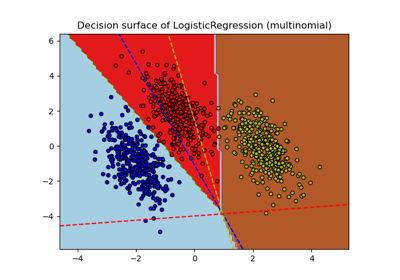

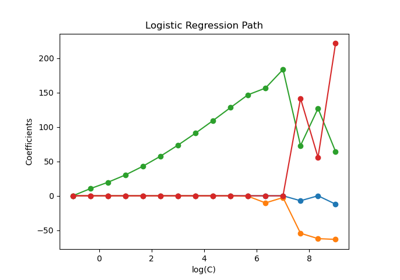

In the multiclass case, the training algorithm uses the one-vs-rest (OvR) scheme if the ‘multi_class’ option is set to ‘ovr’, and uses the cross-entropy loss if the ‘multi_class’ option is set to ‘multinomial’. (Currently the ‘multinomial’ option is supported only by the ‘lbfgs’, ‘sag’, ‘saga’ and ‘newton-cg’ solvers.)

This class implements regularized logistic regression using the ‘liblinear’ library, ‘newton-cg’, ‘sag’, ‘saga’ and ‘lbfgs’ solvers. Note that regularization is applied by default . It can handle both dense and sparse input. Use C-ordered arrays or CSR matrices containing 64-bit floats for optimal performance; any other input format will be converted (and copied).

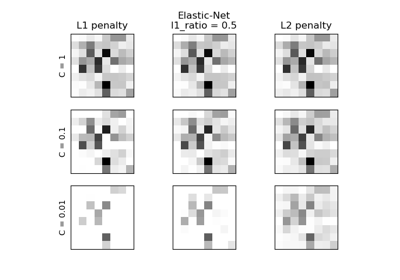

The ‘newton-cg’, ‘sag’, and ‘lbfgs’ solvers support only L2 regularization with primal formulation, or no regularization. The ‘liblinear’ solver supports both L1 and L2 regularization, with a dual formulation only for the L2 penalty. The Elastic-Net regularization is only supported by the ‘saga’ solver.

Read more in the User Guide .

Specify the norm of the penalty:

None : no penalty is added;

'l2' : add a L2 penalty term and it is the default choice;

'l1' : add a L1 penalty term;

'elasticnet' : both L1 and L2 penalty terms are added.

Some penalties may not work with some solvers. See the parameter solver below, to know the compatibility between the penalty and solver.

Added in version 0.19: l1 penalty with SAGA solver (allowing ‘multinomial’ + L1)

Dual (constrained) or primal (regularized, see also this equation ) formulation. Dual formulation is only implemented for l2 penalty with liblinear solver. Prefer dual=False when n_samples > n_features.

Tolerance for stopping criteria.

Inverse of regularization strength; must be a positive float. Like in support vector machines, smaller values specify stronger regularization.

Specifies if a constant (a.k.a. bias or intercept) should be added to the decision function.

Useful only when the solver ‘liblinear’ is used and self.fit_intercept is set to True. In this case, x becomes [x, self.intercept_scaling], i.e. a “synthetic” feature with constant value equal to intercept_scaling is appended to the instance vector. The intercept becomes intercept_scaling * synthetic_feature_weight .

Note! the synthetic feature weight is subject to l1/l2 regularization as all other features. To lessen the effect of regularization on synthetic feature weight (and therefore on the intercept) intercept_scaling has to be increased.

Weights associated with classes in the form {class_label: weight} . If not given, all classes are supposed to have weight one.

The “balanced” mode uses the values of y to automatically adjust weights inversely proportional to class frequencies in the input data as n_samples / (n_classes * np.bincount(y)) .

Note that these weights will be multiplied with sample_weight (passed through the fit method) if sample_weight is specified.

Added in version 0.17: class_weight=’balanced’

Used when solver == ‘sag’, ‘saga’ or ‘liblinear’ to shuffle the data. See Glossary for details.

Algorithm to use in the optimization problem. Default is ‘lbfgs’. To choose a solver, you might want to consider the following aspects:

For small datasets, ‘liblinear’ is a good choice, whereas ‘sag’ and ‘saga’ are faster for large ones;

For multiclass problems, only ‘newton-cg’, ‘sag’, ‘saga’ and ‘lbfgs’ handle multinomial loss;

‘liblinear’ and ‘newton-cholesky’ can only handle binary classification by default. To apply a one-versus-rest scheme for the multiclass setting one can wrapt it with the OneVsRestClassifier .

‘newton-cholesky’ is a good choice for n_samples >> n_features , especially with one-hot encoded categorical features with rare categories. Be aware that the memory usage of this solver has a quadratic dependency on n_features because it explicitly computes the Hessian matrix.

The choice of the algorithm depends on the penalty chosen and on (multinomial) multiclass support:

solver | penalty | multinomial multiclass |

|---|---|---|

‘lbfgs’ | ‘l2’, None | yes |

‘liblinear’ | ‘l1’, ‘l2’ | no |

‘newton-cg’ | ‘l2’, None | yes |

‘newton-cholesky’ | ‘l2’, None | no |

‘sag’ | ‘l2’, None | yes |

‘saga’ | ‘elasticnet’, ‘l1’, ‘l2’, None | yes |

‘sag’ and ‘saga’ fast convergence is only guaranteed on features with approximately the same scale. You can preprocess the data with a scaler from sklearn.preprocessing .

Refer to the User Guide for more information regarding LogisticRegression and more specifically the Table summarizing solver/penalty supports.

Added in version 0.17: Stochastic Average Gradient descent solver.

Added in version 0.19: SAGA solver.

Changed in version 0.22: The default solver changed from ‘liblinear’ to ‘lbfgs’ in 0.22.

Added in version 1.2: newton-cholesky solver.

Maximum number of iterations taken for the solvers to converge.

If the option chosen is ‘ovr’, then a binary problem is fit for each label. For ‘multinomial’ the loss minimised is the multinomial loss fit across the entire probability distribution, even when the data is binary . ‘multinomial’ is unavailable when solver=’liblinear’. ‘auto’ selects ‘ovr’ if the data is binary, or if solver=’liblinear’, and otherwise selects ‘multinomial’.

Added in version 0.18: Stochastic Average Gradient descent solver for ‘multinomial’ case.

Changed in version 0.22: Default changed from ‘ovr’ to ‘auto’ in 0.22.

Deprecated since version 1.5: multi_class was deprecated in version 1.5 and will be removed in 1.7. From then on, the recommended ‘multinomial’ will always be used for n_classes >= 3 . Solvers that do not support ‘multinomial’ will raise an error. Use sklearn.multiclass.OneVsRestClassifier(LogisticRegression()) if you still want to use OvR.

For the liblinear and lbfgs solvers set verbose to any positive number for verbosity.

When set to True, reuse the solution of the previous call to fit as initialization, otherwise, just erase the previous solution. Useless for liblinear solver. See the Glossary .

Added in version 0.17: warm_start to support lbfgs , newton-cg , sag , saga solvers.

Number of CPU cores used when parallelizing over classes if multi_class=’ovr’”. This parameter is ignored when the solver is set to ‘liblinear’ regardless of whether ‘multi_class’ is specified or not. None means 1 unless in a joblib.parallel_backend context. -1 means using all processors. See Glossary for more details.

The Elastic-Net mixing parameter, with 0 <= l1_ratio <= 1 . Only used if penalty='elasticnet' . Setting l1_ratio=0 is equivalent to using penalty='l2' , while setting l1_ratio=1 is equivalent to using penalty='l1' . For 0 < l1_ratio <1 , the penalty is a combination of L1 and L2.

A list of class labels known to the classifier.

Coefficient of the features in the decision function.

coef_ is of shape (1, n_features) when the given problem is binary. In particular, when multi_class='multinomial' , coef_ corresponds to outcome 1 (True) and -coef_ corresponds to outcome 0 (False).

Intercept (a.k.a. bias) added to the decision function.

If fit_intercept is set to False, the intercept is set to zero. intercept_ is of shape (1,) when the given problem is binary. In particular, when multi_class='multinomial' , intercept_ corresponds to outcome 1 (True) and -intercept_ corresponds to outcome 0 (False).

Number of features seen during fit .

Added in version 0.24.

Names of features seen during fit . Defined only when X has feature names that are all strings.

Added in version 1.0.

Actual number of iterations for all classes. If binary or multinomial, it returns only 1 element. For liblinear solver, only the maximum number of iteration across all classes is given.

Changed in version 0.20: In SciPy <= 1.0.0 the number of lbfgs iterations may exceed max_iter . n_iter_ will now report at most max_iter .

Incrementally trained logistic regression (when given the parameter loss="log_loss" ).

Logistic regression with built-in cross validation.

The underlying C implementation uses a random number generator to select features when fitting the model. It is thus not uncommon, to have slightly different results for the same input data. If that happens, try with a smaller tol parameter.

Predict output may not match that of standalone liblinear in certain cases. See differences from liblinear in the narrative documentation.

Ciyou Zhu, Richard Byrd, Jorge Nocedal and Jose Luis Morales. http://users.iems.northwestern.edu/~nocedal/lbfgsb.html

https://www.csie.ntu.edu.tw/~cjlin/liblinear/

Minimizing Finite Sums with the Stochastic Average Gradient https://hal.inria.fr/hal-00860051/document

“SAGA: A Fast Incremental Gradient Method With Support for Non-Strongly Convex Composite Objectives”

methods for logistic regression and maximum entropy models. Machine Learning 85(1-2):41-75. https://www.csie.ntu.edu.tw/~cjlin/papers/maxent_dual.pdf

Predict confidence scores for samples.

The confidence score for a sample is proportional to the signed distance of that sample to the hyperplane.

The data matrix for which we want to get the confidence scores.

Confidence scores per (n_samples, n_classes) combination. In the binary case, confidence score for self.classes_[1] where >0 means this class would be predicted.

Convert coefficient matrix to dense array format.

Converts the coef_ member (back) to a numpy.ndarray. This is the default format of coef_ and is required for fitting, so calling this method is only required on models that have previously been sparsified; otherwise, it is a no-op.

Fitted estimator.

Fit the model according to the given training data.

Training vector, where n_samples is the number of samples and n_features is the number of features.

Target vector relative to X.

Array of weights that are assigned to individual samples. If not provided, then each sample is given unit weight.

Added in version 0.17: sample_weight support to LogisticRegression.

The SAGA solver supports both float64 and float32 bit arrays.