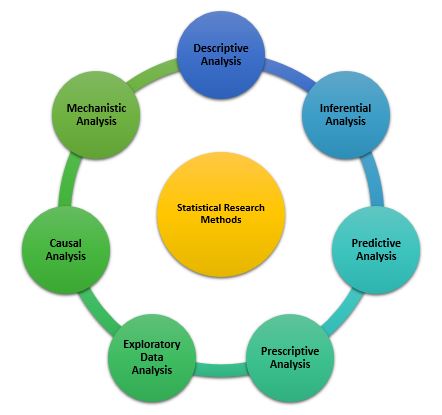

Statistical Methods for Data Analysis: a Comprehensive Guide

In today’s data-driven world, understanding statistical methods for data analysis is like having a superpower.

Whether you’re a student, a professional, or just a curious mind, diving into the realm of data can unlock insights and decisions that propel success.

Statistical methods for data analysis are the tools and techniques used to collect, analyze, interpret, and present data in a meaningful way.

From businesses optimizing operations to researchers uncovering new discoveries, these methods are foundational to making informed decisions based on data.

In this blog post, we’ll embark on a journey through the fascinating world of statistical analysis, exploring its key concepts, methodologies, and applications.

Introduction to Statistical Methods

At its core, statistical methods are the backbone of data analysis, helping us make sense of numbers and patterns in the world around us.

Whether you’re looking at sales figures, medical research, or even your fitness tracker’s data, statistical methods are what turn raw data into useful insights.

But before we dive into complex formulas and tests, let’s start with the basics.

Data comes in two main types: qualitative and quantitative data .

Quantitative data is all about numbers and quantities (like your height or the number of steps you walked today), while qualitative data deals with categories and qualities (like your favorite color or the breed of your dog).

And when we talk about measuring these data points, we use different scales like nominal, ordinal , interval , and ratio.

These scales help us understand the nature of our data—whether we’re ranking it (ordinal), simply categorizing it (nominal), or measuring it with a true zero point (ratio).

In a nutshell, statistical methods start with understanding the type and scale of your data.

This foundational knowledge sets the stage for everything from summarizing your data to making complex predictions.

Descriptive Statistics: Simplifying Data

Imagine you’re at a party and you meet a bunch of new people.

When you go home, your roommate asks, “So, what were they like?” You could describe each person in detail, but instead, you give a summary: “Most were college students, around 20-25 years old, pretty fun crowd!”

That’s essentially what descriptive statistics does for data.

It summarizes and describes the main features of a collection of data in an easy-to-understand way. Let’s break this down further.

The Basics: Mean, Median, and Mode

- Mean is just a fancy term for the average. If you add up everyone’s age at the party and divide by the number of people, you’ve got your mean age.

- Median is the middle number in a sorted list. If you line up everyone from the youngest to the oldest and pick the person in the middle, their age is your median. This is super handy when someone’s age is way off the chart (like if your grandma crashed the party), as it doesn’t skew the data.

- Mode is the most common age at the party. If you notice a lot of people are 22, then 22 is your mode. It’s like the age that wins the popularity contest.

Spreading the News: Range, Variance, and Standard Deviation

- Range gives you an idea of how spread out the ages are. It’s the difference between the oldest and the youngest. A small range means everyone’s around the same age, while a big range means a wider variety.

- Variance is a bit more complex. It measures how much the ages differ from the average age. A higher variance means ages are more spread out.

- Standard Deviation is the square root of variance. It’s like variance but back on a scale that makes sense. It tells you, on average, how far each person’s age is from the mean age.

Picture Perfect: Graphical Representations

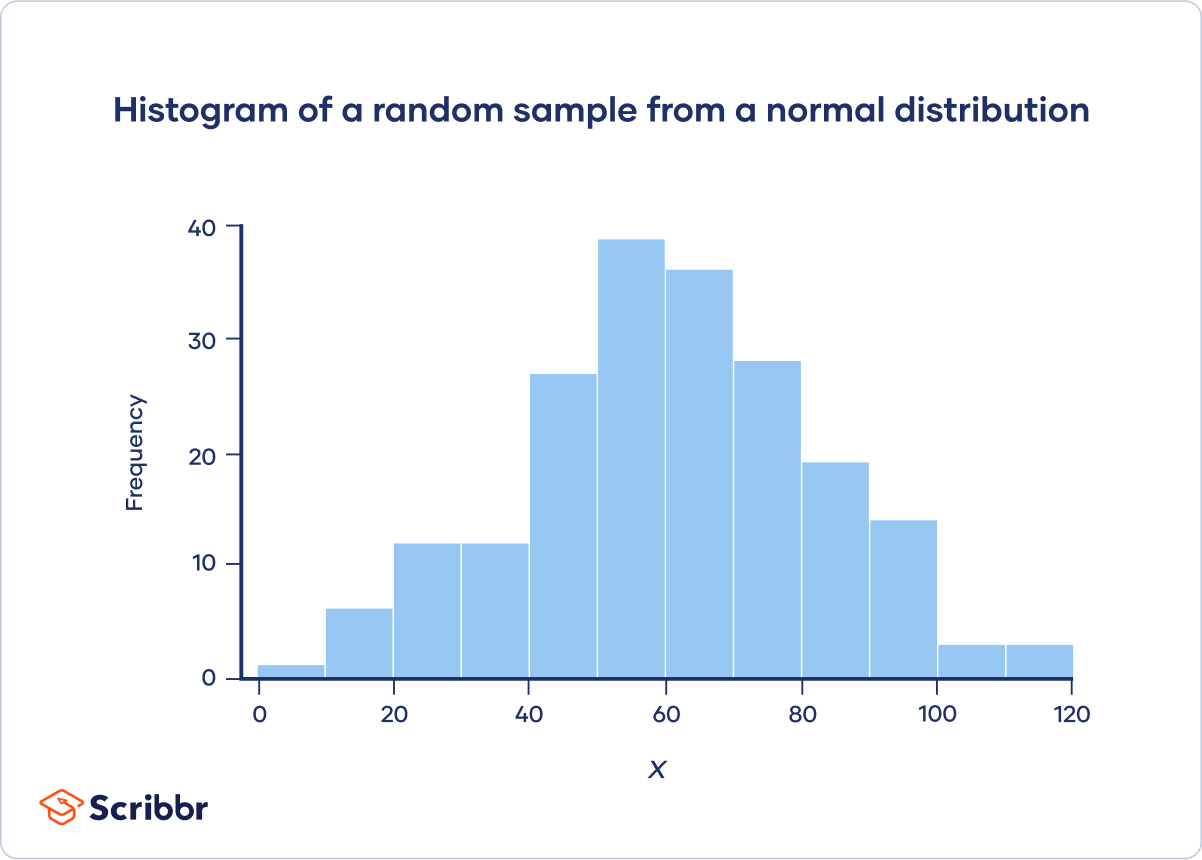

- Histograms are like bar charts showing how many people fall into different age groups. They give you a quick glance at how ages are distributed.

- Bar Charts are great for comparing different categories, like how many men vs. women were at the party.

- Box Plots (or box-and-whisker plots) show you the median, the range, and if there are any outliers (like grandma).

- Scatter Plots are used when you want to see if there’s a relationship between two things, like if bringing more snacks means people stay longer at the party.

Why Descriptive Statistics Matter?

Descriptive statistics are your first step in data analysis.

They help you understand your data at a glance and prepare you for deeper analysis.

Without them, you’re like someone trying to guess what a party was like without any context.

Whether you’re looking at survey responses, test scores, or party attendees, descriptive statistics give you the tools to summarize and describe your data in a way that’s easy to grasp.

This approach is crucial in educational settings, particularly for enhancing math learning outcomes. For those looking to deepen their understanding of math or seeking additional support, check out this link: https://www.mathnasium.com/ math-tutors-near-me .

Remember, the goal of descriptive statistics is to simplify the complex.

Inferential Statistics: Beyond the Basics

Let’s keep the party analogy rolling, but this time, imagine you couldn’t attend the party yourself.

You’re curious if the party was as fun as everyone said it would be.

Instead of asking every single attendee, you decide to ask a few friends who went.

Based on their experiences, you try to infer what the entire party was like.

This is essentially what inferential statistics does with data.

It allows you to make predictions or draw conclusions about a larger group (the population) based on a smaller group (a sample). Let’s dive into how this works.

Probability

Inferential statistics is all about playing the odds.

When you make an inference, you’re saying, “Based on my sample, there’s a certain probability that my conclusion about the whole population is correct.”

It’s like betting on whether the party was fun, based on a few friends’ opinions.

The Central Limit Theorem (CLT)

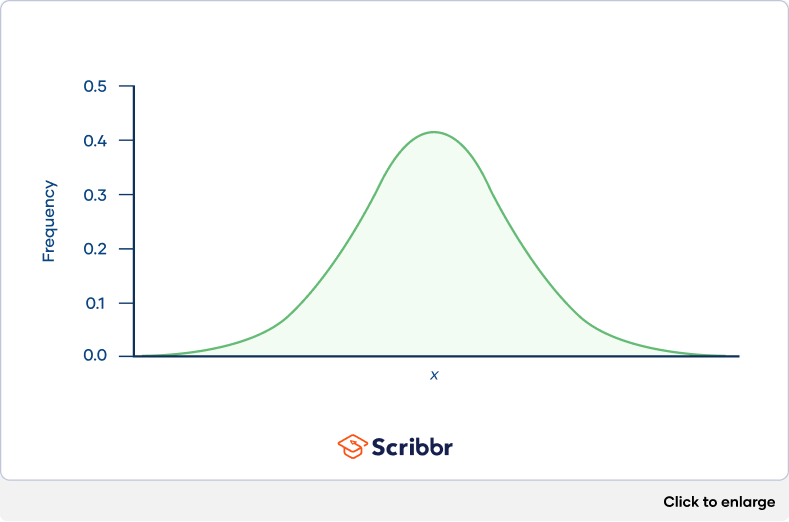

The Central Limit Theorem is the superhero of statistics.

It tells us that if you take enough samples from a population, the sample means (averages) will form a normal distribution (a bell curve), no matter what the population distribution looks like.

This is crucial because it allows us to use sample data to make inferences about the population mean with a known level of uncertainty.

Confidence Intervals

Imagine you’re pretty sure the party was fun, but you want to know how fun.

A confidence interval gives you a range of values within which you believe the true mean fun level of the party lies.

It’s like saying, “I’m 95% confident the party’s fun rating was between 7 and 9 out of 10.”

Hypothesis Testing

This is where you get to be a bit of a detective. You start with a hypothesis (a guess) about the population.

For example, your null hypothesis might be “the party was average fun.” Then you use your sample data to test this hypothesis.

If the data strongly suggests otherwise, you might reject the null hypothesis and accept the alternative hypothesis, which could be “the party was super fun.”

The p-value tells you how likely it is that your data would have occurred by random chance if the null hypothesis were true.

A low p-value (typically less than 0.05) indicates that your findings are significant—that is, unlikely to have happened by chance.

It’s like saying, “The chance that all my friends are exaggerating about the party being fun is really low, so the party probably was fun.”

Why Inferential Statistics Matter?

Inferential statistics let us go beyond just describing our data.

They allow us to make educated guesses about a larger population based on a sample.

This is incredibly useful in almost every field—science, business, public health, and yes, even planning your next party.

By using probability, the Central Limit Theorem, confidence intervals, hypothesis testing, and p-values, we can make informed decisions without needing to ask every single person in the population.

It saves time, resources, and helps us understand the world more scientifically.

Remember, while inferential statistics gives us powerful tools for making predictions, those predictions come with a level of uncertainty.

Being a good data scientist means understanding and communicating that uncertainty clearly.

So next time you hear about a party you missed, use inferential statistics to figure out just how much FOMO (fear of missing out) you should really feel!

Common Statistical Tests: Choosing Your Data’s Best Friend

Alright, now that we’ve covered the basics of descriptive and inferential statistics, it’s time to talk about how we actually apply these concepts to make sense of data.

It’s like deciding on the best way to find out who was the life of the party.

You have several tools (tests) at your disposal, and choosing the right one depends on what you’re trying to find out and the type of data you have.

Let’s explore some of the most common statistical tests and when to use them.

T-Tests: Comparing Averages

Imagine you want to know if the average fun level was higher at this year’s party compared to last year’s.

A t-test helps you compare the means (averages) of two groups to see if they’re statistically different.

There are a couple of flavors:

- Independent t-test : Use this when comparing two different groups, like this year’s party vs. last year’s party.

- Paired t-test : Use this when comparing the same group at two different times or under two different conditions, like if you measured everyone’s fun level before and after the party.

ANOVA : When Three’s Not a Crowd.

But what if you had three or more parties to compare? That’s where ANOVA (Analysis of Variance) comes in handy.

It lets you compare the means across multiple groups at once to see if at least one of them is significantly different.

It’s like comparing the fun levels across several years’ parties to see if one year stood out.

Chi-Square Test: Categorically Speaking

Now, let’s say you’re interested in whether the type of music (pop, rock, electronic) affects party attendance.

Since you’re dealing with categories (types of music) and counts (number of attendees), you’ll use the Chi-Square test.

It’s great for seeing if there’s a relationship between two categorical variables.

Correlation and Regression: Finding Relationships

What if you suspect that the amount of snacks available at the party affects how long guests stay? To explore this, you’d use:

- Correlation analysis to see if there’s a relationship between two continuous variables (like snacks and party duration). It tells you how closely related two things are.

- Regression analysis goes a step further by not only showing if there’s a relationship but also how one variable predicts the other. It’s like saying, “For every extra bag of chips, guests stay an average of 10 minutes longer.”

Non-parametric Tests: When Assumptions Don’t Hold

All the tests mentioned above assume your data follows a normal distribution and meets other criteria.

But what if your data doesn’t play by these rules?

Enter non-parametric tests, like the Mann-Whitney U test (for comparing two groups when you can’t use a t-test) or the Kruskal-Wallis test (like ANOVA but for non-normal distributions).

Picking the Right Test

Choosing the right statistical test is crucial and depends on:

- The type of data you have (categorical vs. continuous).

- Whether you’re comparing groups or looking for relationships.

- The distribution of your data (normal vs. non-normal).

Why These Tests Matter?

Just like you’d pick the right tool for a job, selecting the appropriate statistical test helps you make valid and reliable conclusions about your data.

Whether you’re trying to prove a point, make a decision, or just understand the world a bit better, these tests are your gateway to insights.

By mastering these tests, you become a detective in the world of data, ready to uncover the truth behind the numbers!

Regression Analysis: Predicting the Future

Ever wondered if you could predict how much fun you’re going to have at a party based on the number of friends going, or how the amount of snacks available might affect the overall party vibe?

That’s where regression analysis comes into play, acting like a crystal ball for your data.

What is Regression Analysis?

Regression analysis is a powerful statistical method that allows you to examine the relationship between two or more variables of interest.

Think of it as detective work, where you’re trying to figure out if, how, and to what extent certain factors (like snacks and music volume) predict an outcome (like the fun level at a party).

The Two Main Characters: Independent and Dependent Variables

- Independent Variable(s): These are the predictors or factors that you suspect might influence the outcome. For example, the quantity of snacks.

- Dependent Variable: This is the outcome you’re interested in predicting. In our case, it could be the fun level of the party.

Linear Regression: The Straight Line Relationship

The most basic form of regression analysis is linear regression .

It predicts the outcome based on a linear relationship between the independent and dependent variables.

If you plot this on a graph, you’d ideally see a straight line where, as the amount of snacks increases, so does the fun level (hopefully!).

- Simple Linear Regression involves just one independent variable. It’s like saying, “Let’s see if just the number of snacks can predict the fun level.”

- Multiple Linear Regression takes it up a notch by including more than one independent variable. Now, you’re looking at whether the quantity of snacks, type of music, and number of guests together can predict the fun level.

Logistic Regression: When Outcomes are Either/Or

Not all predictions are about numbers.

Sometimes, you just want to know if something will happen or not—will the party be a hit or a flop?

Logistic regression is used for these binary outcomes.

Instead of predicting a precise fun level, it predicts the probability of the party being a hit based on the same predictors (snacks, music, guests).

Making Sense of the Results

- Coefficients: In regression analysis, each predictor has a coefficient, telling you how much the dependent variable is expected to change when that predictor changes by one unit, all else being equal.

- R-squared : This value tells you how much of the variation in your dependent variable can be explained by the independent variables. A higher R-squared means a better fit between your model and the data.

Why Regression Analysis Rocks?

Regression analysis is like having a superpower. It helps you understand which factors matter most, which can be ignored, and how different factors come together to influence the outcome.

This insight is invaluable whether you’re planning a party, running a business, or conducting scientific research.

Bringing It All Together

Imagine you’ve gathered data on several parties, including the number of guests, type of music, and amount of snacks, along with a fun level rating for each.

By running a regression analysis, you can start to predict future parties’ success, tailoring your planning to maximize fun.

It’s a practical tool for making informed decisions based on past data, helping you throw legendary parties, optimize business strategies, or understand complex relationships in your research.

In essence, regression analysis helps turn your data into actionable insights, guiding you towards smarter decisions and better predictions.

So next time you’re knee-deep in data, remember: regression analysis might just be the key to unlocking its secrets.

Non-parametric Methods: Playing By Different Rules

So far, we’ve talked a lot about statistical methods that rely on certain assumptions about your data, like it being normally distributed (forming that classic bell curve) or having a specific scale of measurement.

But what happens when your data doesn’t fit these molds?

Maybe the scores from your last party’s karaoke contest are all over the place, or you’re trying to compare the popularity of various party games but only have rankings, not scores.

This is where non-parametric methods come to the rescue.

Breaking Free from Assumptions

Non-parametric methods are the rebels of the statistical world.

They don’t assume your data follows a normal distribution or that it meets strict requirements regarding measurement scales.

These methods are perfect for dealing with ordinal data (like rankings), nominal data (like categories), or when your data is skewed or has outliers that would throw off other tests.

When to Use Non-parametric Methods?

- Your data is not normally distributed, and transformations don’t help.

- You have ordinal data (like survey responses that range from “Strongly Disagree” to “Strongly Agree”).

- You’re dealing with ranks or categories rather than precise measurements.

- Your sample size is small, making it hard to meet the assumptions required for parametric tests.

Some Popular Non-parametric Tests

- Mann-Whitney U Test: Think of it as the non-parametric counterpart to the independent samples t-test. Use this when you want to compare the differences between two independent groups on a ranking or ordinal scale.

- Kruskal-Wallis Test: This is your go-to when you have three or more groups to compare, and it’s similar to an ANOVA but for ranked/ordinal data or when your data doesn’t meet ANOVA’s assumptions.

- Spearman’s Rank Correlation: When you want to see if there’s a relationship between two sets of rankings, Spearman’s got your back. It’s like Pearson’s correlation for continuous data but designed for ranks.

- Wilcoxon Signed-Rank Test: Use this for comparing two related samples when you can’t use the paired t-test, typically because the differences between pairs are not normally distributed.

The Beauty of Flexibility

The real charm of non-parametric methods is their flexibility.

They let you work with data that’s not textbook perfect, which is often the case in the real world.

Whether you’re analyzing customer satisfaction surveys, comparing the effectiveness of different marketing strategies, or just trying to figure out if people prefer pizza or tacos at parties, non-parametric tests provide a robust way to get meaningful insights.

Keeping It Real

It’s important to remember that while non-parametric methods are incredibly useful, they also come with their own limitations.

They might be more conservative, meaning you might need a larger effect to detect a significant result compared to parametric tests.

Plus, because they often work with ranks rather than actual values, some information about your data might get lost in translation.

Non-parametric methods are your statistical toolbox’s Swiss Army knife, ready to tackle data that doesn’t fit into the neat categories required by more traditional tests.

They remind us that in the world of data analysis, there’s more than one way to uncover insights and make informed decisions.

So, the next time you’re faced with skewed distributions or rankings instead of scores, remember that non-parametric methods have got you covered, offering a way to navigate the complexities of real-world data.

Data Cleaning and Preparation: The Unsung Heroes of Data Analysis

Before any party can start, there’s always a bit of housecleaning to do—sweeping the floors, arranging the furniture, and maybe even hiding those laundry piles you’ve been ignoring all week.

Similarly, in the world of data analysis, before we can dive into the fun stuff like statistical tests and predictive modeling, we need to roll up our sleeves and get our data nice and tidy.

This process of data cleaning and preparation might not be the most glamorous part of data science, but it’s absolutely critical.

Let’s break down what this involves and why it’s so important.

Why Clean and Prepare Data?

Imagine trying to analyze party RSVPs when half the responses are “yes,” a quarter are “Y,” and the rest are a creative mix of “yup,” “sure,” and “why not?”

Without standardization, it’s hard to get a clear picture of how many guests to expect.

The same goes for any data set. Cleaning ensures that your data is consistent, accurate, and ready for analysis.

Preparation involves transforming this clean data into a format that’s useful for your specific analysis needs.

The Steps to Sparkling Clean Data

- Dealing with Missing Values: Sometimes, data is incomplete. Maybe a survey respondent skipped a question, or a sensor failed to record a reading. You’ll need to decide whether to fill in these gaps (imputation), ignore them, or drop the observations altogether.

- Identifying and Handling Outliers: Outliers are data points that are significantly different from the rest. They might be errors, or they might be valuable insights. The challenge is determining which is which and deciding how to handle them—remove, adjust, or analyze separately.

- Correcting Inconsistencies: This is like making sure all your RSVPs are in the same format. It could involve standardizing text entries, correcting typos, or converting all measurements to the same units.

- Formatting Data: Your analysis might require data in a specific format. This could mean transforming data types (e.g., converting dates into a uniform format) or restructuring data tables to make them easier to work with.

- Reducing Dimensionality: Sometimes, your data set might have more information than you actually need. Reducing dimensionality (through methods like Principal Component Analysis) can help simplify your data without losing valuable information.

- Creating New Variables: You might need to derive new variables from your existing ones to better capture the relationships in your data. For example, turning raw survey responses into a numerical satisfaction score.

The Tools of the Trade

There are many tools available to help with data cleaning and preparation, ranging from spreadsheet software like Excel to programming languages like Python and R.

These tools offer functions and libraries specifically designed to make data cleaning as painless as possible.

Why It Matters

Skipping the data cleaning and preparation stage is like trying to cook without prepping your ingredients first.

Sure, you might end up with something edible, but it’s not going to be as good as it could have been.

Clean and well-prepared data leads to more accurate, reliable, and meaningful analysis results.

It’s the foundation upon which all good data analysis is built.

Data cleaning and preparation might not be the flashiest part of data science, but it’s where all successful data analysis projects begin.

By taking the time to thoroughly clean and prepare your data, you’re setting yourself up for clearer insights, better decisions, and, ultimately, more impactful outcomes.

Software Tools for Statistical Analysis: Your Digital Assistants

Diving into the world of data without the right tools can feel like trying to cook a gourmet meal without a kitchen.

Just as you need pots, pans, and a stove to create a culinary masterpiece, you need the right software tools to analyze data and uncover the insights hidden within.

These digital assistants range from user-friendly applications for beginners to powerful suites for the pros.

Let’s take a closer look at some of the most popular software tools for statistical analysis.

R and RStudio: The Dynamic Duo

- R is like the Swiss Army knife of statistical analysis. It’s a programming language designed specifically for data analysis, graphics, and statistical modeling. Think of R as the kitchen where you’ll be cooking up your data analysis.

- RStudio is an integrated development environment (IDE) for R. It’s like having the best kitchen setup with organized countertops (your coding space) and all your tools and ingredients within reach (packages and datasets).

Why They Rock:

R is incredibly powerful and can handle almost any data analysis task you throw at it, from the basics to the most advanced statistical models.

Plus, there’s a vast community of users, which means a wealth of tutorials, forums, and free packages to add on.

Python with pandas and scipy: The Versatile Virtuoso

- Python is not just for programming; with the right libraries, it becomes an excellent tool for data analysis. It’s like a kitchen that’s not only great for baking but also equipped for gourmet cooking.

- pandas is a library that provides easy-to-use data structures and data analysis tools for Python. Imagine it as your sous-chef, helping you to slice and dice data with ease.

- scipy is another library used for scientific and technical computing. It’s like having a set of precision knives for the more intricate tasks.

Why They Rock: Python is known for its readability and simplicity, making it accessible for beginners. When combined with pandas and scipy, it becomes a powerhouse for data manipulation, analysis, and visualization.

SPSS: The Point-and-Click Professional

SPSS (Statistical Package for the Social Sciences) is a software package used for interactive, or batched, statistical analysis. Long produced by SPSS Inc., it was acquired by IBM in 2009.

Why It Rocks: SPSS is particularly user-friendly with its point-and-click interface, making it a favorite among non-programmers and researchers in the social sciences. It’s like having a kitchen gadget that does the job with the push of a button—no manual setup required.

SAS: The Corporate Chef

SAS (Statistical Analysis System) is a software suite developed for advanced analytics, multivariate analysis, business intelligence, data management, and predictive analytics.

Why It Rocks: SAS is a powerhouse in the corporate world, known for its stability, deep analytical capabilities, and support for large data sets. It’s like the industrial kitchen used by professional chefs to serve hundreds of guests.

Excel: The Accessible Apprentice

Excel might not be a specialized statistical software, but it’s widely accessible and capable of handling basic statistical analyses. Think of Excel as the microwave in your kitchen—it might not be fancy, but it gets the job done for quick and simple tasks.

Why It Rocks: Almost everyone has access to Excel and knows the basics, making it a great starting point for those new to data analysis. Plus, with add-ons like the Analysis ToolPak, Excel’s capabilities can be extended further into statistical territory.

Choosing Your Tool

Selecting the right software tool for statistical analysis is like choosing the right kitchen for your cooking style—it depends on your needs, expertise, and the complexity of your recipes (data).

Whether you’re a coding chef ready to tackle R or Python, or someone who prefers the straightforwardness of SPSS or Excel, there’s a tool out there that’s perfect for your data analysis kitchen.

Ethical Considerations

Embarking on a data analysis journey is like setting sail on the vast ocean of information.

Just as a captain needs a compass to navigate the seas safely and responsibly, a data analyst requires a strong sense of ethics to guide their exploration of data.

Ethical considerations in data analysis are the moral compass that ensures we respect privacy, consent, and integrity while uncovering the truths hidden within data. Let’s delve into why ethics are so crucial and what principles you should keep in mind.

Respect for Privacy

Imagine you’ve found a diary filled with personal secrets.

Reading it without permission would be a breach of privacy.

Similarly, when you’re handling data, especially personal or sensitive information, it’s essential to ensure that privacy is protected.

This means not only securing data against unauthorized access but also anonymizing data to prevent individuals from being identified.

Informed Consent

Before you can set sail, you need the ship owner’s permission.

In the world of data, this translates to informed consent. Participants should be fully aware of what their data will be used for and voluntarily agree to participate.

This is particularly important in research or when collecting data directly from individuals. It’s like asking for permission before you start the journey.

Data Integrity

Maintaining data integrity is like keeping the ship’s log accurate and unaltered during your voyage.

It involves ensuring the data is not corrupted or modified inappropriately and that any data analysis is conducted accurately and reliably.

Tampering with data or cherry-picking results to fit a narrative is not just unethical—it’s like falsifying the ship’s log, leading to mistrust and potentially dangerous outcomes.

Avoiding Bias

The sea is vast, and your compass must be calibrated correctly to avoid going off course. Similarly, avoiding bias in data analysis ensures your findings are valid and unbiased.

This means being aware of and actively addressing any personal, cultural, or statistical biases that might skew your analysis.

It’s about striving for objectivity and ensuring your journey is guided by truth, not preconceived notions.

Transparency and Accountability

A trustworthy captain is open about their navigational choices and ready to take responsibility for them.

In data analysis, this translates to transparency about your methods and accountability for your conclusions.

Sharing your methodologies, data sources, and any limitations of your analysis helps build trust and allows others to verify or challenge your findings.

Ethical Use of Findings

Finally, just as a captain must consider the impact of their journey on the wider world, you must consider how your data analysis will be used.

This means thinking about the potential consequences of your findings and striving to ensure they are used to benefit, not harm, society.

It’s about being mindful of the broader implications of your work and using data for good.

Navigating with a Moral Compass

In the realm of data analysis, ethical considerations form the moral compass that guides us through complex moral waters.

They ensure that our work respects individuals’ rights, contributes positively to society, and upholds the highest standards of integrity and professionalism.

Just as a captain navigates the seas with respect for the ocean and its dangers, a data analyst must navigate the world of data with a deep commitment to ethical principles.

This commitment ensures that the insights gained from data analysis serve to enlighten and improve, rather than exploit or harm.

Conclusion and Key Takeaways

And there you have it—a whirlwind tour through the fascinating landscape of statistical methods for data analysis.

From the grounding principles of descriptive and inferential statistics to the nuanced details of regression analysis and beyond, we’ve explored the tools and ethical considerations that guide us in turning raw data into meaningful insights.

The Takeaway

Think of data analysis as embarking on a grand adventure, one where numbers and facts are your map and compass.

Just as every explorer needs to understand the terrain, every aspiring data analyst must grasp these foundational concepts.

Whether it’s summarizing data sets with descriptive statistics, making predictions with inferential statistics, choosing the right statistical test, or navigating the ethical considerations that ensure our analyses benefit society, each aspect is a crucial step on your journey.

The Importance of Preparation

Remember, the key to a successful voyage is preparation.

Cleaning and preparing your data sets the stage for a smooth journey, while choosing the right software tools ensures you have the best equipment at your disposal.

And just as every responsible navigator respects the sea, every data analyst must navigate the ethical dimensions of their work with care and integrity.

Charting Your Course

As you embark on your own data analysis adventures, remember that the path you chart is unique to you.

Your questions will guide your journey, your curiosity will fuel your exploration, and the insights you gain will be your treasure.

The world of data is vast and full of mysteries waiting to be uncovered. With the tools and principles we’ve discussed, you’re well-equipped to start uncovering those mysteries, one data set at a time.

The Journey Ahead

The journey of statistical methods for data analysis is ongoing, and the landscape is ever-evolving.

As new methods emerge and our understanding deepens, there will always be new horizons to explore and new insights to discover.

But the fundamentals we’ve covered will remain your steadfast guide, helping you navigate the challenges and opportunities that lie ahead.

So set your sights on the questions that spark your curiosity, arm yourself with the tools of the trade, and embark on your data analysis journey with confidence.

About The Author

Silvia Valcheva

Silvia Valcheva is a digital marketer with over a decade of experience creating content for the tech industry. She has a strong passion for writing about emerging software and technologies such as big data, AI (Artificial Intelligence), IoT (Internet of Things), process automation, etc.

Leave a Reply Cancel Reply

This site uses Akismet to reduce spam. Learn how your comment data is processed .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Anaesth

- v.60(9); 2016 Sep

Basic statistical tools in research and data analysis

Zulfiqar ali.

Department of Anaesthesiology, Division of Neuroanaesthesiology, Sheri Kashmir Institute of Medical Sciences, Soura, Srinagar, Jammu and Kashmir, India

S Bala Bhaskar

1 Department of Anaesthesiology and Critical Care, Vijayanagar Institute of Medical Sciences, Bellary, Karnataka, India

Statistical methods involved in carrying out a study include planning, designing, collecting data, analysing, drawing meaningful interpretation and reporting of the research findings. The statistical analysis gives meaning to the meaningless numbers, thereby breathing life into a lifeless data. The results and inferences are precise only if proper statistical tests are used. This article will try to acquaint the reader with the basic research tools that are utilised while conducting various studies. The article covers a brief outline of the variables, an understanding of quantitative and qualitative variables and the measures of central tendency. An idea of the sample size estimation, power analysis and the statistical errors is given. Finally, there is a summary of parametric and non-parametric tests used for data analysis.

INTRODUCTION

Statistics is a branch of science that deals with the collection, organisation, analysis of data and drawing of inferences from the samples to the whole population.[ 1 ] This requires a proper design of the study, an appropriate selection of the study sample and choice of a suitable statistical test. An adequate knowledge of statistics is necessary for proper designing of an epidemiological study or a clinical trial. Improper statistical methods may result in erroneous conclusions which may lead to unethical practice.[ 2 ]

Variable is a characteristic that varies from one individual member of population to another individual.[ 3 ] Variables such as height and weight are measured by some type of scale, convey quantitative information and are called as quantitative variables. Sex and eye colour give qualitative information and are called as qualitative variables[ 3 ] [ Figure 1 ].

Classification of variables

Quantitative variables

Quantitative or numerical data are subdivided into discrete and continuous measurements. Discrete numerical data are recorded as a whole number such as 0, 1, 2, 3,… (integer), whereas continuous data can assume any value. Observations that can be counted constitute the discrete data and observations that can be measured constitute the continuous data. Examples of discrete data are number of episodes of respiratory arrests or the number of re-intubations in an intensive care unit. Similarly, examples of continuous data are the serial serum glucose levels, partial pressure of oxygen in arterial blood and the oesophageal temperature.

A hierarchical scale of increasing precision can be used for observing and recording the data which is based on categorical, ordinal, interval and ratio scales [ Figure 1 ].

Categorical or nominal variables are unordered. The data are merely classified into categories and cannot be arranged in any particular order. If only two categories exist (as in gender male and female), it is called as a dichotomous (or binary) data. The various causes of re-intubation in an intensive care unit due to upper airway obstruction, impaired clearance of secretions, hypoxemia, hypercapnia, pulmonary oedema and neurological impairment are examples of categorical variables.

Ordinal variables have a clear ordering between the variables. However, the ordered data may not have equal intervals. Examples are the American Society of Anesthesiologists status or Richmond agitation-sedation scale.

Interval variables are similar to an ordinal variable, except that the intervals between the values of the interval variable are equally spaced. A good example of an interval scale is the Fahrenheit degree scale used to measure temperature. With the Fahrenheit scale, the difference between 70° and 75° is equal to the difference between 80° and 85°: The units of measurement are equal throughout the full range of the scale.

Ratio scales are similar to interval scales, in that equal differences between scale values have equal quantitative meaning. However, ratio scales also have a true zero point, which gives them an additional property. For example, the system of centimetres is an example of a ratio scale. There is a true zero point and the value of 0 cm means a complete absence of length. The thyromental distance of 6 cm in an adult may be twice that of a child in whom it may be 3 cm.

STATISTICS: DESCRIPTIVE AND INFERENTIAL STATISTICS

Descriptive statistics[ 4 ] try to describe the relationship between variables in a sample or population. Descriptive statistics provide a summary of data in the form of mean, median and mode. Inferential statistics[ 4 ] use a random sample of data taken from a population to describe and make inferences about the whole population. It is valuable when it is not possible to examine each member of an entire population. The examples if descriptive and inferential statistics are illustrated in Table 1 .

Example of descriptive and inferential statistics

Descriptive statistics

The extent to which the observations cluster around a central location is described by the central tendency and the spread towards the extremes is described by the degree of dispersion.

Measures of central tendency

The measures of central tendency are mean, median and mode.[ 6 ] Mean (or the arithmetic average) is the sum of all the scores divided by the number of scores. Mean may be influenced profoundly by the extreme variables. For example, the average stay of organophosphorus poisoning patients in ICU may be influenced by a single patient who stays in ICU for around 5 months because of septicaemia. The extreme values are called outliers. The formula for the mean is

where x = each observation and n = number of observations. Median[ 6 ] is defined as the middle of a distribution in a ranked data (with half of the variables in the sample above and half below the median value) while mode is the most frequently occurring variable in a distribution. Range defines the spread, or variability, of a sample.[ 7 ] It is described by the minimum and maximum values of the variables. If we rank the data and after ranking, group the observations into percentiles, we can get better information of the pattern of spread of the variables. In percentiles, we rank the observations into 100 equal parts. We can then describe 25%, 50%, 75% or any other percentile amount. The median is the 50 th percentile. The interquartile range will be the observations in the middle 50% of the observations about the median (25 th -75 th percentile). Variance[ 7 ] is a measure of how spread out is the distribution. It gives an indication of how close an individual observation clusters about the mean value. The variance of a population is defined by the following formula:

where σ 2 is the population variance, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The variance of a sample is defined by slightly different formula:

where s 2 is the sample variance, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. The formula for the variance of a population has the value ‘ n ’ as the denominator. The expression ‘ n −1’ is known as the degrees of freedom and is one less than the number of parameters. Each observation is free to vary, except the last one which must be a defined value. The variance is measured in squared units. To make the interpretation of the data simple and to retain the basic unit of observation, the square root of variance is used. The square root of the variance is the standard deviation (SD).[ 8 ] The SD of a population is defined by the following formula:

where σ is the population SD, X is the population mean, X i is the i th element from the population and N is the number of elements in the population. The SD of a sample is defined by slightly different formula:

where s is the sample SD, x is the sample mean, x i is the i th element from the sample and n is the number of elements in the sample. An example for calculation of variation and SD is illustrated in Table 2 .

Example of mean, variance, standard deviation

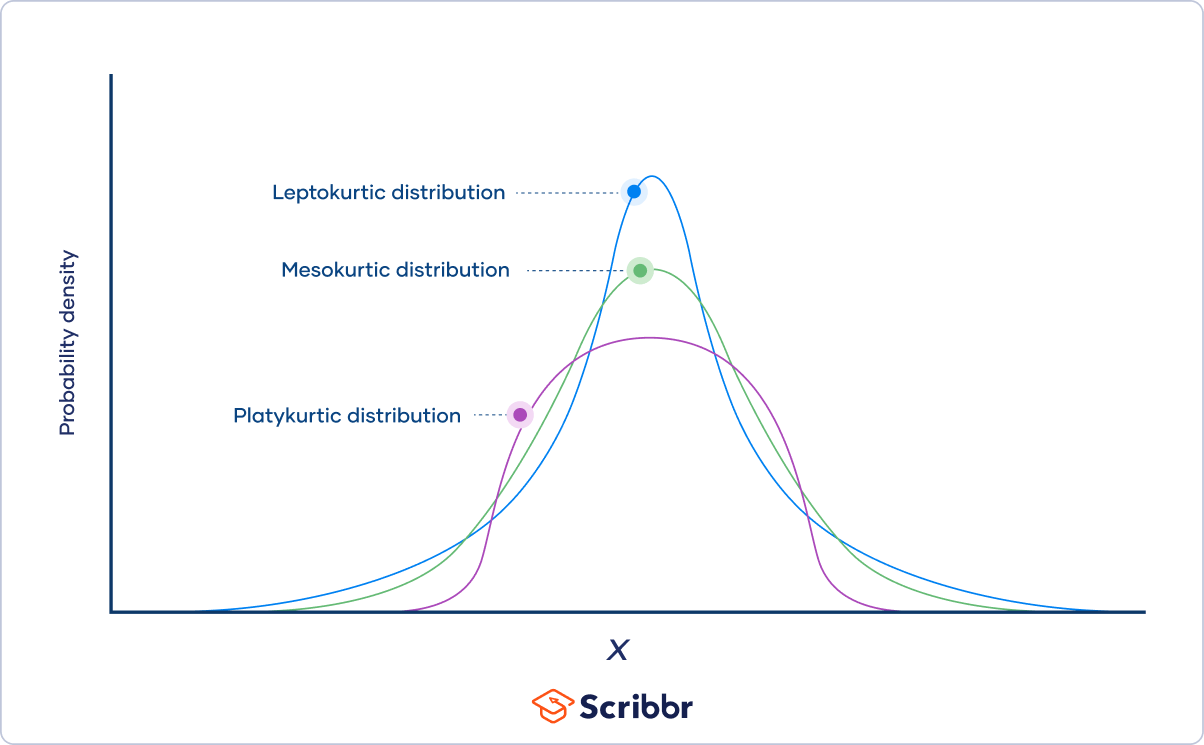

Normal distribution or Gaussian distribution

Most of the biological variables usually cluster around a central value, with symmetrical positive and negative deviations about this point.[ 1 ] The standard normal distribution curve is a symmetrical bell-shaped. In a normal distribution curve, about 68% of the scores are within 1 SD of the mean. Around 95% of the scores are within 2 SDs of the mean and 99% within 3 SDs of the mean [ Figure 2 ].

Normal distribution curve

Skewed distribution

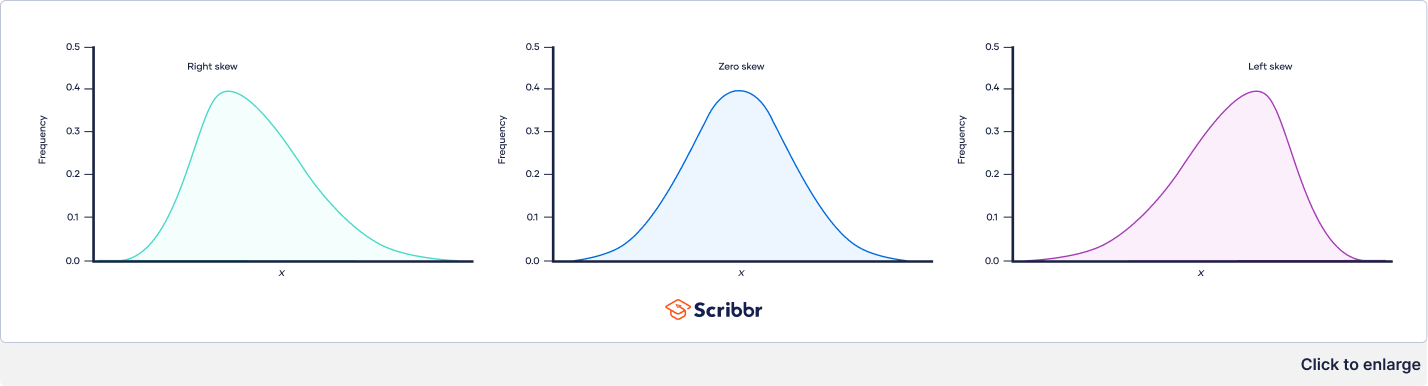

It is a distribution with an asymmetry of the variables about its mean. In a negatively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the right of Figure 1 . In a positively skewed distribution [ Figure 3 ], the mass of the distribution is concentrated on the left of the figure leading to a longer right tail.

Curves showing negatively skewed and positively skewed distribution

Inferential statistics

In inferential statistics, data are analysed from a sample to make inferences in the larger collection of the population. The purpose is to answer or test the hypotheses. A hypothesis (plural hypotheses) is a proposed explanation for a phenomenon. Hypothesis tests are thus procedures for making rational decisions about the reality of observed effects.

Probability is the measure of the likelihood that an event will occur. Probability is quantified as a number between 0 and 1 (where 0 indicates impossibility and 1 indicates certainty).

In inferential statistics, the term ‘null hypothesis’ ( H 0 ‘ H-naught ,’ ‘ H-null ’) denotes that there is no relationship (difference) between the population variables in question.[ 9 ]

Alternative hypothesis ( H 1 and H a ) denotes that a statement between the variables is expected to be true.[ 9 ]

The P value (or the calculated probability) is the probability of the event occurring by chance if the null hypothesis is true. The P value is a numerical between 0 and 1 and is interpreted by researchers in deciding whether to reject or retain the null hypothesis [ Table 3 ].

P values with interpretation

If P value is less than the arbitrarily chosen value (known as α or the significance level), the null hypothesis (H0) is rejected [ Table 4 ]. However, if null hypotheses (H0) is incorrectly rejected, this is known as a Type I error.[ 11 ] Further details regarding alpha error, beta error and sample size calculation and factors influencing them are dealt with in another section of this issue by Das S et al .[ 12 ]

Illustration for null hypothesis

PARAMETRIC AND NON-PARAMETRIC TESTS

Numerical data (quantitative variables) that are normally distributed are analysed with parametric tests.[ 13 ]

Two most basic prerequisites for parametric statistical analysis are:

- The assumption of normality which specifies that the means of the sample group are normally distributed

- The assumption of equal variance which specifies that the variances of the samples and of their corresponding population are equal.

However, if the distribution of the sample is skewed towards one side or the distribution is unknown due to the small sample size, non-parametric[ 14 ] statistical techniques are used. Non-parametric tests are used to analyse ordinal and categorical data.

Parametric tests

The parametric tests assume that the data are on a quantitative (numerical) scale, with a normal distribution of the underlying population. The samples have the same variance (homogeneity of variances). The samples are randomly drawn from the population, and the observations within a group are independent of each other. The commonly used parametric tests are the Student's t -test, analysis of variance (ANOVA) and repeated measures ANOVA.

Student's t -test

Student's t -test is used to test the null hypothesis that there is no difference between the means of the two groups. It is used in three circumstances:

where X = sample mean, u = population mean and SE = standard error of mean

where X 1 − X 2 is the difference between the means of the two groups and SE denotes the standard error of the difference.

- To test if the population means estimated by two dependent samples differ significantly (the paired t -test). A usual setting for paired t -test is when measurements are made on the same subjects before and after a treatment.

The formula for paired t -test is:

where d is the mean difference and SE denotes the standard error of this difference.

The group variances can be compared using the F -test. The F -test is the ratio of variances (var l/var 2). If F differs significantly from 1.0, then it is concluded that the group variances differ significantly.

Analysis of variance

The Student's t -test cannot be used for comparison of three or more groups. The purpose of ANOVA is to test if there is any significant difference between the means of two or more groups.

In ANOVA, we study two variances – (a) between-group variability and (b) within-group variability. The within-group variability (error variance) is the variation that cannot be accounted for in the study design. It is based on random differences present in our samples.

However, the between-group (or effect variance) is the result of our treatment. These two estimates of variances are compared using the F-test.

A simplified formula for the F statistic is:

where MS b is the mean squares between the groups and MS w is the mean squares within groups.

Repeated measures analysis of variance

As with ANOVA, repeated measures ANOVA analyses the equality of means of three or more groups. However, a repeated measure ANOVA is used when all variables of a sample are measured under different conditions or at different points in time.

As the variables are measured from a sample at different points of time, the measurement of the dependent variable is repeated. Using a standard ANOVA in this case is not appropriate because it fails to model the correlation between the repeated measures: The data violate the ANOVA assumption of independence. Hence, in the measurement of repeated dependent variables, repeated measures ANOVA should be used.

Non-parametric tests

When the assumptions of normality are not met, and the sample means are not normally, distributed parametric tests can lead to erroneous results. Non-parametric tests (distribution-free test) are used in such situation as they do not require the normality assumption.[ 15 ] Non-parametric tests may fail to detect a significant difference when compared with a parametric test. That is, they usually have less power.

As is done for the parametric tests, the test statistic is compared with known values for the sampling distribution of that statistic and the null hypothesis is accepted or rejected. The types of non-parametric analysis techniques and the corresponding parametric analysis techniques are delineated in Table 5 .

Analogue of parametric and non-parametric tests

Median test for one sample: The sign test and Wilcoxon's signed rank test

The sign test and Wilcoxon's signed rank test are used for median tests of one sample. These tests examine whether one instance of sample data is greater or smaller than the median reference value.

This test examines the hypothesis about the median θ0 of a population. It tests the null hypothesis H0 = θ0. When the observed value (Xi) is greater than the reference value (θ0), it is marked as+. If the observed value is smaller than the reference value, it is marked as − sign. If the observed value is equal to the reference value (θ0), it is eliminated from the sample.

If the null hypothesis is true, there will be an equal number of + signs and − signs.

The sign test ignores the actual values of the data and only uses + or − signs. Therefore, it is useful when it is difficult to measure the values.

Wilcoxon's signed rank test

There is a major limitation of sign test as we lose the quantitative information of the given data and merely use the + or – signs. Wilcoxon's signed rank test not only examines the observed values in comparison with θ0 but also takes into consideration the relative sizes, adding more statistical power to the test. As in the sign test, if there is an observed value that is equal to the reference value θ0, this observed value is eliminated from the sample.

Wilcoxon's rank sum test ranks all data points in order, calculates the rank sum of each sample and compares the difference in the rank sums.

Mann-Whitney test

It is used to test the null hypothesis that two samples have the same median or, alternatively, whether observations in one sample tend to be larger than observations in the other.

Mann–Whitney test compares all data (xi) belonging to the X group and all data (yi) belonging to the Y group and calculates the probability of xi being greater than yi: P (xi > yi). The null hypothesis states that P (xi > yi) = P (xi < yi) =1/2 while the alternative hypothesis states that P (xi > yi) ≠1/2.

Kolmogorov-Smirnov test

The two-sample Kolmogorov-Smirnov (KS) test was designed as a generic method to test whether two random samples are drawn from the same distribution. The null hypothesis of the KS test is that both distributions are identical. The statistic of the KS test is a distance between the two empirical distributions, computed as the maximum absolute difference between their cumulative curves.

Kruskal-Wallis test

The Kruskal–Wallis test is a non-parametric test to analyse the variance.[ 14 ] It analyses if there is any difference in the median values of three or more independent samples. The data values are ranked in an increasing order, and the rank sums calculated followed by calculation of the test statistic.

Jonckheere test

In contrast to Kruskal–Wallis test, in Jonckheere test, there is an a priori ordering that gives it a more statistical power than the Kruskal–Wallis test.[ 14 ]

Friedman test

The Friedman test is a non-parametric test for testing the difference between several related samples. The Friedman test is an alternative for repeated measures ANOVAs which is used when the same parameter has been measured under different conditions on the same subjects.[ 13 ]

Tests to analyse the categorical data

Chi-square test, Fischer's exact test and McNemar's test are used to analyse the categorical or nominal variables. The Chi-square test compares the frequencies and tests whether the observed data differ significantly from that of the expected data if there were no differences between groups (i.e., the null hypothesis). It is calculated by the sum of the squared difference between observed ( O ) and the expected ( E ) data (or the deviation, d ) divided by the expected data by the following formula:

A Yates correction factor is used when the sample size is small. Fischer's exact test is used to determine if there are non-random associations between two categorical variables. It does not assume random sampling, and instead of referring a calculated statistic to a sampling distribution, it calculates an exact probability. McNemar's test is used for paired nominal data. It is applied to 2 × 2 table with paired-dependent samples. It is used to determine whether the row and column frequencies are equal (that is, whether there is ‘marginal homogeneity’). The null hypothesis is that the paired proportions are equal. The Mantel-Haenszel Chi-square test is a multivariate test as it analyses multiple grouping variables. It stratifies according to the nominated confounding variables and identifies any that affects the primary outcome variable. If the outcome variable is dichotomous, then logistic regression is used.

SOFTWARES AVAILABLE FOR STATISTICS, SAMPLE SIZE CALCULATION AND POWER ANALYSIS

Numerous statistical software systems are available currently. The commonly used software systems are Statistical Package for the Social Sciences (SPSS – manufactured by IBM corporation), Statistical Analysis System ((SAS – developed by SAS Institute North Carolina, United States of America), R (designed by Ross Ihaka and Robert Gentleman from R core team), Minitab (developed by Minitab Inc), Stata (developed by StataCorp) and the MS Excel (developed by Microsoft).

There are a number of web resources which are related to statistical power analyses. A few are:

- StatPages.net – provides links to a number of online power calculators

- G-Power – provides a downloadable power analysis program that runs under DOS

- Power analysis for ANOVA designs an interactive site that calculates power or sample size needed to attain a given power for one effect in a factorial ANOVA design

- SPSS makes a program called SamplePower. It gives an output of a complete report on the computer screen which can be cut and paste into another document.

It is important that a researcher knows the concepts of the basic statistical methods used for conduct of a research study. This will help to conduct an appropriately well-designed study leading to valid and reliable results. Inappropriate use of statistical techniques may lead to faulty conclusions, inducing errors and undermining the significance of the article. Bad statistics may lead to bad research, and bad research may lead to unethical practice. Hence, an adequate knowledge of statistics and the appropriate use of statistical tests are important. An appropriate knowledge about the basic statistical methods will go a long way in improving the research designs and producing quality medical research which can be utilised for formulating the evidence-based guidelines.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

The Best PhD and Masters Consulting Company

Introduction to Statistical Analysis: A Beginner’s Guide.

Statistical analysis is a crucial component of research work across various disciplines, helping researchers derive meaningful insights from data. Whether you’re conducting scientific studies, social research, or data-driven investigations, having a solid understanding of statistical analysis is essential. In this beginner’s guide, we will explore the fundamental concepts and techniques of statistical analysis specifically tailored for research work, providing you with a strong foundation to enhance the quality and credibility of your research findings.

1. Importance of Statistical Analysis in Research:

Research aims to uncover knowledge and make informed conclusions. Statistical analysis plays a pivotal role in achieving this by providing tools and methods to analyze and interpret data accurately. It helps researchers identify patterns, test hypotheses, draw inferences, and quantify the strength of relationships between variables. Understanding the significance of statistical analysis empowers researchers to make evidence-based decisions.

2. Data Collection and Organization:

Before diving into statistical analysis, researchers must collect and organize their data effectively. We will discuss the importance of proper sampling techniques, data quality assurance, and data preprocessing. Additionally, we will explore methods to handle missing data and outliers, ensuring that your dataset is reliable and suitable for analysis.

3. Exploratory Data Analysis (EDA):

Exploratory Data Analysis is a preliminary step that involves visually exploring and summarizing the main characteristics of the data. We will cover techniques such as data visualization, descriptive statistics, and data transformations to gain insights into the distribution, central tendencies, and variability of the variables in your dataset. EDA helps researchers understand the underlying structure of the data and identify potential relationships for further investigation.

4. Statistical Inference and Hypothesis Testing:

Statistical inference allows researchers to make generalizations about a population based on a sample. We will delve into hypothesis testing, covering concepts such as null and alternative hypotheses, p-values, and significance levels. By understanding these concepts, you will be able to test your research hypotheses and determine if the observed results are statistically significant.

5. Parametric and Non-parametric Tests:

Parametric and non-parametric tests are statistical techniques used to analyze data based on different assumptions about the underlying population distribution. We will explore commonly used parametric tests, such as t-tests and analysis of variance (ANOVA), as well as non-parametric tests like the Mann-Whitney U test and Kruskal-Wallis test. Understanding when to use each type of test is crucial for selecting the appropriate analysis method for your research questions.

6. Correlation and Regression Analysis:

Correlation and regression analysis allow researchers to explore relationships between variables and make predictions. We will cover Pearson correlation coefficients, multiple regression analysis, and logistic regression. These techniques enable researchers to quantify the strength and direction of associations and identify predictive factors in their research.

7. Sample Size Determination and Power Analysis:

Sample size determination is a critical aspect of research design, as it affects the validity and reliability of your findings. We will discuss methods for estimating sample size based on statistical power analysis, ensuring that your study has sufficient statistical power to detect meaningful effects. Understanding sample size determination is essential for planning robust research studies.

Conclusion:

Statistical analysis is an indispensable tool for conducting high-quality research. This beginner’s guide has provided an overview of key concepts and techniques specifically tailored for research work, enabling you to enhance the credibility and reliability of your findings. By understanding the importance of statistical analysis, collecting and organizing data effectively, performing exploratory data analysis, conducting hypothesis testing, utilizing parametric and non-parametric tests, and considering sample size determination, you will be well-equipped to carry out rigorous research and contribute valuable insights to your field. Remember, continuous learning, practice, and seeking guidance from statistical experts will further enhance your skills in statistical analysis for research.

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?

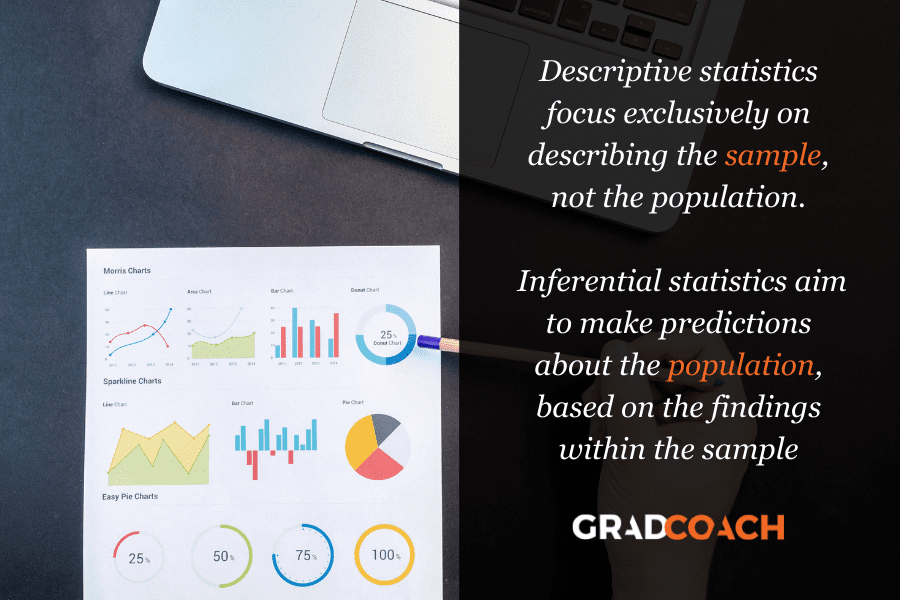

Well, descriptive statistics focus on describing the sample , while inferential statistics aim to make predictions about the population, based on the findings within the sample. In other words, we use one group of statistical methods – descriptive statistics – to investigate the slice of cake, and another group of methods – inferential statistics – to draw conclusions about the entire cake. There I go with the cake analogy again…

With that out the way, let’s take a closer look at each of these branches in more detail.

Branch 1: Descriptive Statistics

Descriptive statistics serve a simple but critically important role in your research – to describe your data set – hence the name. In other words, they help you understand the details of your sample . Unlike inferential statistics (which we’ll get to soon), descriptive statistics don’t aim to make inferences or predictions about the entire population – they’re purely interested in the details of your specific sample .

When you’re writing up your analysis, descriptive statistics are the first set of stats you’ll cover, before moving on to inferential statistics. But, that said, depending on your research objectives and research questions , they may be the only type of statistics you use. We’ll explore that a little later.

So, what kind of statistics are usually covered in this section?

Some common statistical tests used in this branch include the following:

- Mean – this is simply the mathematical average of a range of numbers.

- Median – this is the midpoint in a range of numbers when the numbers are arranged in numerical order. If the data set makes up an odd number, then the median is the number right in the middle of the set. If the data set makes up an even number, then the median is the midpoint between the two middle numbers.

- Mode – this is simply the most commonly occurring number in the data set.

- In cases where most of the numbers are quite close to the average, the standard deviation will be relatively low.

- Conversely, in cases where the numbers are scattered all over the place, the standard deviation will be relatively high.

- Skewness . As the name suggests, skewness indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph, or do they skew to the left or right?

Feeling a bit confused? Let’s look at a practical example using a small data set.

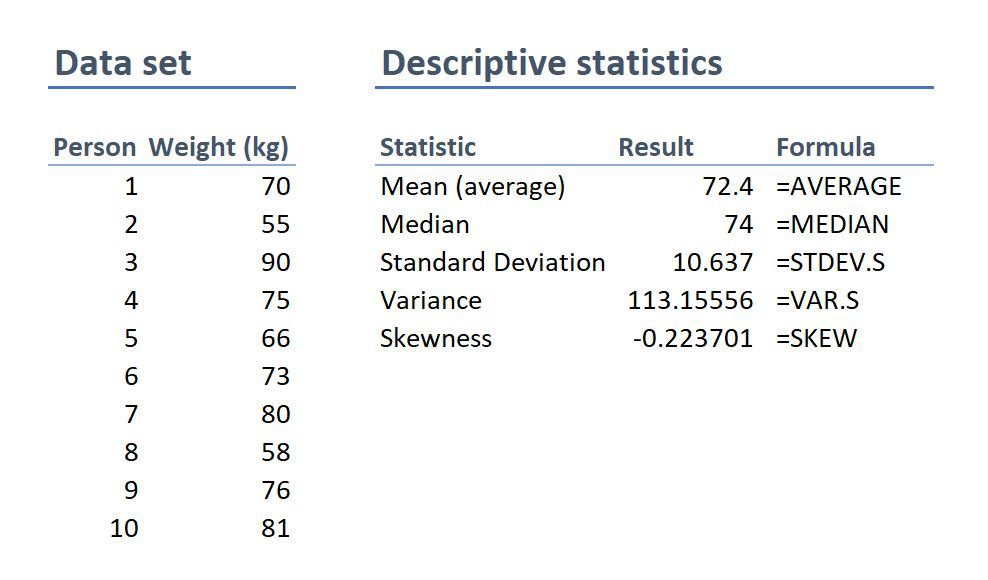

On the left-hand side is the data set. This details the bodyweight of a sample of 10 people. On the right-hand side, we have the descriptive statistics. Let’s take a look at each of them.

First, we can see that the mean weight is 72.4 kilograms. In other words, the average weight across the sample is 72.4 kilograms. Straightforward.

Next, we can see that the median is very similar to the mean (the average). This suggests that this data set has a reasonably symmetrical distribution (in other words, a relatively smooth, centred distribution of weights, clustered towards the centre).

In terms of the mode , there is no mode in this data set. This is because each number is present only once and so there cannot be a “most common number”. If there were two people who were both 65 kilograms, for example, then the mode would be 65.

Next up is the standard deviation . 10.6 indicates that there’s quite a wide spread of numbers. We can see this quite easily by looking at the numbers themselves, which range from 55 to 90, which is quite a stretch from the mean of 72.4.

And lastly, the skewness of -0.2 tells us that the data is very slightly negatively skewed. This makes sense since the mean and the median are slightly different.

As you can see, these descriptive statistics give us some useful insight into the data set. Of course, this is a very small data set (only 10 records), so we can’t read into these statistics too much. Also, keep in mind that this is not a list of all possible descriptive statistics – just the most common ones.

But why do all of these numbers matter?

While these descriptive statistics are all fairly basic, they’re important for a few reasons:

- Firstly, they help you get both a macro and micro-level view of your data. In other words, they help you understand both the big picture and the finer details.

- Secondly, they help you spot potential errors in the data – for example, if an average is way higher than you’d expect, or responses to a question are highly varied, this can act as a warning sign that you need to double-check the data.

- And lastly, these descriptive statistics help inform which inferential statistical techniques you can use, as those techniques depend on the skewness (in other words, the symmetry and normality) of the data.

Simply put, descriptive statistics are really important , even though the statistical techniques used are fairly basic. All too often at Grad Coach, we see students skimming over the descriptives in their eagerness to get to the more exciting inferential methods, and then landing up with some very flawed results.

Don’t be a sucker – give your descriptive statistics the love and attention they deserve!

Branch 2: Inferential Statistics

As I mentioned, while descriptive statistics are all about the details of your specific data set – your sample – inferential statistics aim to make inferences about the population . In other words, you’ll use inferential statistics to make predictions about what you’d expect to find in the full population.

What kind of predictions, you ask? Well, there are two common types of predictions that researchers try to make using inferential stats:

- Firstly, predictions about differences between groups – for example, height differences between children grouped by their favourite meal or gender.

- And secondly, relationships between variables – for example, the relationship between body weight and the number of hours a week a person does yoga.

In other words, inferential statistics (when done correctly), allow you to connect the dots and make predictions about what you expect to see in the real world population, based on what you observe in your sample data. For this reason, inferential statistics are used for hypothesis testing – in other words, to test hypotheses that predict changes or differences.

Of course, when you’re working with inferential statistics, the composition of your sample is really important. In other words, if your sample doesn’t accurately represent the population you’re researching, then your findings won’t necessarily be very useful.

For example, if your population of interest is a mix of 50% male and 50% female , but your sample is 80% male , you can’t make inferences about the population based on your sample, since it’s not representative. This area of statistics is called sampling, but we won’t go down that rabbit hole here (it’s a deep one!) – we’ll save that for another post .

What statistics are usually used in this branch?

There are many, many different statistical analysis methods within the inferential branch and it’d be impossible for us to discuss them all here. So we’ll just take a look at some of the most common inferential statistical methods so that you have a solid starting point.

First up are T-Tests . T-tests compare the means (the averages) of two groups of data to assess whether they’re statistically significantly different. In other words, do they have significantly different means, standard deviations and skewness.

This type of testing is very useful for understanding just how similar or different two groups of data are. For example, you might want to compare the mean blood pressure between two groups of people – one that has taken a new medication and one that hasn’t – to assess whether they are significantly different.

Kicking things up a level, we have ANOVA, which stands for “analysis of variance”. This test is similar to a T-test in that it compares the means of various groups, but ANOVA allows you to analyse multiple groups , not just two groups So it’s basically a t-test on steroids…

Next, we have correlation analysis . This type of analysis assesses the relationship between two variables. In other words, if one variable increases, does the other variable also increase, decrease or stay the same. For example, if the average temperature goes up, do average ice creams sales increase too? We’d expect some sort of relationship between these two variables intuitively , but correlation analysis allows us to measure that relationship scientifically .

Lastly, we have regression analysis – this is quite similar to correlation in that it assesses the relationship between variables, but it goes a step further to understand cause and effect between variables, not just whether they move together. In other words, does the one variable actually cause the other one to move, or do they just happen to move together naturally thanks to another force? Just because two variables correlate doesn’t necessarily mean that one causes the other.

Stats overload…

I hear you. To make this all a little more tangible, let’s take a look at an example of a correlation in action.

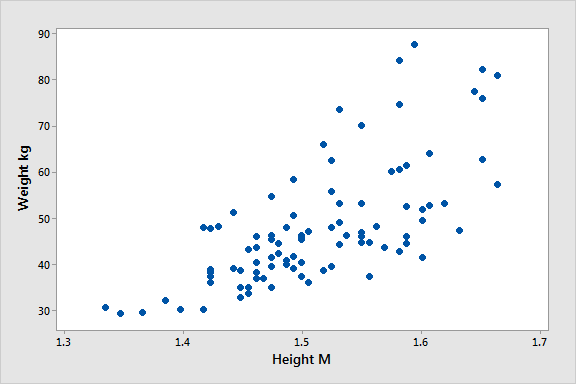

Here’s a scatter plot demonstrating the correlation (relationship) between weight and height. Intuitively, we’d expect there to be some relationship between these two variables, which is what we see in this scatter plot. In other words, the results tend to cluster together in a diagonal line from bottom left to top right.

As I mentioned, these are are just a handful of inferential techniques – there are many, many more. Importantly, each statistical method has its own assumptions and limitations .

For example, some methods only work with normally distributed (parametric) data, while other methods are designed specifically for non-parametric data. And that’s exactly why descriptive statistics are so important – they’re the first step to knowing which inferential techniques you can and can’t use.

How to choose the right analysis method

To choose the right statistical methods, you need to think about two important factors :

- The type of quantitative data you have (specifically, level of measurement and the shape of the data). And,

- Your research questions and hypotheses

Let’s take a closer look at each of these.

Factor 1 – Data type

The first thing you need to consider is the type of data you’ve collected (or the type of data you will collect). By data types, I’m referring to the four levels of measurement – namely, nominal, ordinal, interval and ratio. If you’re not familiar with this lingo, check out the video below.

Why does this matter?

Well, because different statistical methods and techniques require different types of data. This is one of the “assumptions” I mentioned earlier – every method has its assumptions regarding the type of data.

For example, some techniques work with categorical data (for example, yes/no type questions, or gender or ethnicity), while others work with continuous numerical data (for example, age, weight or income) – and, of course, some work with multiple data types.

If you try to use a statistical method that doesn’t support the data type you have, your results will be largely meaningless . So, make sure that you have a clear understanding of what types of data you’ve collected (or will collect). Once you have this, you can then check which statistical methods would support your data types here .