Site Search

- How to Search

- Advisory Group

- Editorial Board

- OEC Fellows

- History and Funding

- Using OEC Materials

- Collections

- Research Ethics Resources

- Ethics Projects

- Communities of Practice

- Get Involved

- Submit Content

- Open Access Membership

- Become a Partner

The Space Shuttle Challenger Disaster

A case study looking at the explosion of the Challenger Space Shuttle.

On January 28, 1986, seven astronauts were killed when the space shuttle they were piloting, the Challenger, exploded just over a minute into the flight. The failure of the solid rocket booster O-rings to seat properly allowed hot combustion gases to leak from the side of the booster and burn through the external fuel tank. The failure of the O-ring was attributed to several factors, including faulty design of the solid rocket boosters, insufficient low- temperature testing of the O-ring material and the joints that the O-ring sealed, and lack of proper communication between different levels of NASA management.

Instructor's Guide

Introduction to the case.

On January 28, 1986, seven astronauts were killed when the space shuttle they were piloting, the Challenger, exploded just over a minute into the flight. The failure of the solid rocket booster O-rings to seat properly allowed hot combustion gases to leak from the side of the booster and burn through the external fuel tank. The failure of the O-ring was attributed to several factors, including faulty design of the solid rocket boosters, insufficient low-temperature testing of the O-ring material and the joints that the O-ring sealed, and lack of proper communication between different levels of NASA management.

Instructor Guidelines

Prior to class discussion, ask the students to read the student handout outside of class. In class the details of the case can be reviewed with the aide of the overheads. Reserve about half of the class period for an open discussion of the issues. The issues covered in the student handout include the importance of an engineer's responsibility to public welfare, the need for this responsibility to hold precedence over any other responsibilities the engineer might have and the responsibilities of a manager/engineer. A final point is the fact that no matter how far removed from the public an engineer may think she is, all of her actions have potential impact.

Essay #6, "Loyalty and Professional Rights" appended at the end of the case listings in this report will be found relevant for instructors preparing to lead class discussion on this case. In addition, essays #1 through #4 appended at the end of the cases in this report will have relevant background information for the instructor preparing to lead classroom discussion. Their titles are, respectively: "Ethics and Professionalism in Engineering: Why the Interest in Engineering Ethics?;" "Basic Concepts and Methods in Ethics," "Moral Concepts and Theories," and "Engineering Design: Literature on Social Responsibility Versus Legal Liability."

Questions for Class Discussion

1. What could NASA management have done differently?

2. What, if anything, could their subordinates have done differently?

3. What should Roger Boisjoly have done differently (if anything)? In answering this question, keep in mind that at his age, the prospect of finding a new job if he was fired was slim. He also had a family to support.

4. What do you (the students) see as your future engineering professional responsibilities in relation to both being loyal to management and protecting the public welfare?

HOW DOES THE IMPLIED SOCIAL CONTRACT OF PROFESSIONALS APPLY TO THIS CASE?

WHAT PROFESSIONAL RESPONSIBILITIES WERE NEGLECTED, IF ANY?

SHOULD NASA HAVE DONE ANYTHING DIFFERENTLY IN THEIR LAUNCH DECISION PROCEDURE?

Student Handout - Synopsis

On January 28, 1986, seven astronauts were killed when the space shuttle they were piloting, the Challenger, exploded just over a minute into flight. The failure of the solid rocket booster O-rings to seat properly allowed hot combustion gases to leak from the side of the booster and burn through the external fuel tank. The failure of the O-ring was attributed to several factors, including faulty design of the solid rocket boosters, insufficient low temperature testing of the O-ring material and the joints that the O-ring sealed, and lack of communication between different levels of NASA management.

Organization and People Involved

Marshall Space Flight Center - In charge of booster rocket development

Larry Mulloy - Challenged the engineers' decision not to launch

Morton Thiokol - Contracted by NASA to build the Solid Rocket Booster

Alan McDonald - Director of the Solid Rocket Motors Project

Bob Lund - Engineering Vice President

Robert Ebeling - Engineer who worked under

McDonald Roger Boisjoly - Engineer who worked under McDonald

Joe Kilminster - Engineer in a management position

Jerald Mason - Senior Executive who encouraged Lund to reassess his decision not to launch.

1974 - Morton-Thiokol awarded contract to build solid rocket boosters.

1976 - NASA accepts Morton-Thiokol's booster design.

1977 - Morton-Thiokol discovers joint rotation problem.

November 1981 - O-ring erosion discovered after second shuttle flight.

January 24, 1985 - shuttle flight that exhibited the worst O ring blow-by.

July 1985 - Thiokol orders new steel billets for new field joint design.

August 19, 1985 - NASA LevelI management briefed on booster problem.

January 27, 1986 - night teleconference to discuss effects of cold temperature on booster performance.

January 28, 1986 - Challenger explodes 72 seconds after liftoff.

NASA managers were anxious to launch the Challenger for several reasons, including economic considerations, political pressures, and scheduling backlogs. Unforeseen competition from the European Space Agency put NASA in a position where it would have to fly the shuttle dependably on a very ambitious schedule in order to prove the Space Transportation System's cost effectiveness and potential for commercialization. This prompted NASA to schedule a record number of missions in 1986 to make a case for its budget requests. The shuttle mission just prior to the Challenger had been delayed a record number of times due to inclement weather and mechanical factors.

NASA wanted to launch the Challenger without any delays so the launch pad could be refurbished in time for the next mission, which would be carrying a probe that would examine Halley's Comet. If launched on time, this probe would have collected data a few days before a similar Russian probe would be launched. There was probably also pressure to launch Challenger so it could be in space when President Reagan gave his State of the Union address. Reagan's main topic was to be education, and he was expected to mention the shuttle and the first teacher in space, Christa McAuliffe. The shuttle solid rocket boosters (or SRBs), are key elements in the operation of the shuttle. Without the boosters, the shuttle cannot produce enough thrust to overcome the earth's gravitational pull and achieve orbit.

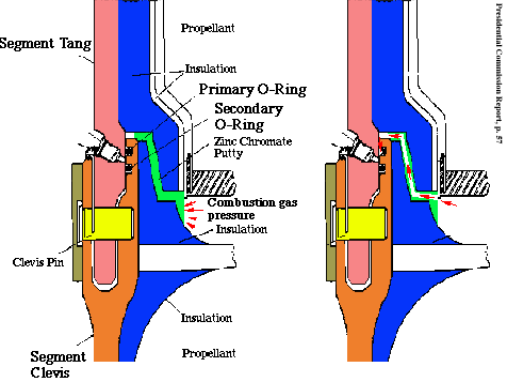

There is an SRB attached to each side of the external fuel tank. Each booster is 149 feet long and 12 feet in diameter. Before ignition, each booster weighs 2 million pounds. Solid rockets in general produce much more thrust per pound than their liquid fuel counterparts. The drawback is that once the solid rocket fuel has been ignited, it cannot be turned off or even controlled. So it was extremely important that the shuttle SRBs were properly designed. Morton Thiokol was awarded the contract to design and build the SRBs in 1974. Thiokol's design is a scaled-up version of a Titan missile which had been used successfully for years. NASA accepted the design in 1976. The booster is comprised of seven hollow metal cylinders. The solid rocket fuel is cast into the cylinders at the Thiokol plant in Utah, and the cylinders are assembled into pairs for transport to Kennedy Space Center in Florida. At KSC, the four booster segments are assembled into a completed booster rocket. The joints where the segments are joined together at KSC are known as field joints (See Figure 1).

These field joints consist of a tang and clevis joint. The tang and clevis are held together by 177 clevis pins. Each joint is sealed by two O rings, the bottom ring known as the primary O ring, and the top known as the secondary O-ring. (The Titan booster had only one O-ring. The second ring was added as a measure of redundancy since the boosters would be lifting humans into orbit. Except for the increased scale of the rocket's diameter, this was the only major difference between the shuttle booster and the Titan booster.) The purpose of the O-rings is to prevent hot combustion gasses from escaping from the inside of the motor. To provide a barrier between the rubber O-rings and the combustion gasses, a heat resistant putty is applied to the inner section of the joint prior to assembly. The gap between the tang and the clevis determines the amount of compression on the O-ring. To minimize the gap and increase the squeeze on the O-ring, shims are inserted between the tang and the outside leg of the clevis.

Launch Delays

The first delay of the Challenger mission was because of a weather front expected to move into the area, bringing rain and cold temperatures. Usually a mission wasn't postponed until inclement weather actually entered the area, but the Vice President was expected to be present for the launch and NASA officials wanted to avoid the necessity of the Vice President's having to make an unnecessary trip to Florida; so they postponed the launch early. The Vice President was a key spokesperson for the President on the space program, and NASA coveted his good will.

The weather front stalled, and the launch window had perfect weather conditions; but the launch had already been postponed to keep the Vice President from unnecessarily traveling to the launch site. The second launch delay was caused by a defective micro switch in the hatch locking mechanism and by problems in removing the hatch handle. By the time these problems had been sorted out, winds had become too high. The weather front had started moving again, and appeared to be bringing record-setting low temperatures to the Florida area.

NASA wanted to check with all of its contractors to determine if there would be any problems with launching in the cold temperatures. Alan McDonald, director of the Solid Rocket Motor Project at Morton Thiokol, was convinced that there were cold weather problems with the solid rocket motors and contacted two of the engineers working on the project, Robert Ebeling and Roger Boisjoly. Thiokol knew there was a problem with the boosters as early as 1977, and had initiated a redesign effort in 1985. NASA LevelI management had been briefed on the problem on August 19, 1985. Almost half of the shuttle flights had experienced O-ring erosion in the booster field joints. Ebeling and Boisjoly had complained to Thiokol that management was not supporting the redesign task force.

Engineering Design

The size of the gap is controlled by several factors, including the dimensional tolerances of the metal cylinders and their corresponding tang or clevis, the ambient temperature, the diameter of the O-ring, the thickness of the shims, the loads on the segment, and quality control during assembly. When the booster is ignited, the putty is displaced, compressing the air between the putty and the primary O-ring.

The air pressure forces the O-ring into the gap between the tang and clevis. Pressure loads are also applied to the walls of the cylinder, causing the cylinder to balloon slightly. This ballooning of the cylinder walls caused the gap between the tang and clevis gap to open. This effect has come to be known as joint rotation. Morton-Thiokol discovered this joint rotation as part of its testing program in 1977. Thiokol discussed the problem with NASA and started analyzing and testing to determine how to increase the O-ring compression, thereby decreasing the effect of joint rotation. Three design changes were implemented:

1. Dimensional tolerances of the metal joint were tightened.

2. The O-ring diameter was increased, and its dimensional tolerances were tightened.

3. The use of the shims mentioned above was introduced. Further testing by Thiokol revealed that the second seal, in some cases, might not seal at all. Additional changes in the shim thickness and O-ring diameter were made to correct the problem.

A new problem was discovered during November 1981, after the flight of the second shuttle mission. Examination of the booster field joints revealed that the O-rings were eroding during flight. The joints were still sealing effectively, but the O-ring material was being eaten away by hot gasses that escaped past the putty. Thiokol studied different types of putty and its application to study their effects on reducing O-ring erosion. The shuttle flight 51-C of January 24, 1985, was launched during some of the coldest weather in Florida history. Upon examination of the booster joints, engineers at Thiokol noticed black soot and grease on the outside of the booster casing, caused by actual gas blow-by. This prompted Thiokol to study the effects of O-ring resiliency at low temperatures. They conducted laboratory tests of O-ring compression and resiliency between 50lF and 100lF. In July 1985, Morton Thiokol ordered new steel billets which would be used for a redesigned case field joint. At the time of the accident, these new billets were not ready for Thiokol, because they take many months to manufacture.

The Night Before the Launch

Temperatures for the next launch date were predicted to be in the low 20°s. This prompted Alan McDonald to ask his engineers at Thiokol to prepare a presentation on the effects of cold temperature on booster performance. A teleconference was scheduled the evening before the re-scheduled launch in order to discuss the low temperature performance of the boosters. This teleconference was held between engineers and management from Kennedy Space Center, Marshall Space Flight Center in Alabama, and Morton-Thiokol in Utah. Boisjoly and another engineer, Arnie Thompson, knew this would be another opportunity to express their concerns about the boosters, but they had only a short time to prepare their data for the presentation.1

Thiokol's engineers gave an hour-long presentation, presenting a convincing argument that the cold weather would exaggerate the problems of joint rotation and delayed O-ring seating. The lowest temperature experienced by the O-rings in any previous mission was 53°F, the January 24, 1985 flight. With a predicted ambient temperature of 26°F at launch, the O-rings were estimated to be at 29°F. After the technical presentation, Thiokol's Engineering Vice President Bob Lund presented the conclusions and recommendations.

His main conclusion was that 53°F was the only low temperature data Thiokol had for the effects of cold on the operational boosters. The boosters had experienced O-ring erosion at this temperature. Since his engineers had no low temperature data below 53°F, they could not prove that it was unsafe to launch at lower temperatures. He read his recommendations and commented that the predicted temperatures for the morning's launch was outside the data base and NASA should delay the launch, so the ambient temperature could rise until the O-ring temperature was at least 53°F. This confused NASA managers because the booster design specifications called for booster operation as low as 31°F. (It later came out in the investigation that Thiokol understood that the 31°F limit temperature was for storage of the booster, and that the launch temperature limit was 40°F. Because of this, dynamic tests of the boosters had never been performed below 40°F.)

Marshall's Solid Rocket Booster Project Manager, Larry Mulloy, commented that the data was inconclusive and challenged the engineers' logic. A heated debate went on for several minutes before Mulloy bypassed Lund and asked Joe Kilminster for his opinion. Kilminster was in management, although he had an extensive engineering background. By bypassing the engineers, Mulloy was calling for a middle-management decision, but Kilminster stood by his engineers. Several other managers at Marshall expressed their doubts about the recommendations, and finally Kilminster asked for a meeting off of the net, so Thiokol could review its data.

Boisjoly and Thompson tried to convince their senior managers to stay with their original decision not to launch. A senior executive at Thiokol, Jerald Mason, commented that a management decision was required. The managers seemed to believe the O-rings could be eroded up to one third of their diameter and still seat properly, regardless of the temperature. The data presented to them showed no correlation between temperature and the blow-by gasses which eroded the O-rings in previous missions. According to testimony by Kilminster and Boisjoly, Mason finally turned to Bob Lund and said, "Take off your engineering hat and put on your management hat." Joe Kilminster wrote out the new recommendation and went back on line with the teleconference.

The new recommendation stated that the cold was still a safety concern, but their people had found that the original data was indeed inconclusive and their "engineering assessment" was that launch was recommended, even though the engineers had no part in writing the new recommendation and refused to sign it. Alan McDonald, who was present with NASA management in Florida, was surprised to see the recommendation to launch and appealed to NASA management not to launch. NASA managers decided to approve the boosters for launch despite the fact that the predicted launch temperature was outside of their operational specifications.

During the night, temperatures dropped to as low as 8°F, much lower than had been anticipated. In order to keep the water pipes in the launch platform from freezing, safety showers and fire hoses had been turned on. Some of this water had accumulated, and ice had formed all over the platform. There was some concern that the ice would fall off of the platform during launch and might damage the heat resistant tiles on the shuttle. The ice inspection team thought the situation was of great concern, but the launch director decided to go ahead with the countdown.

Note that safety limitations on low temperature launching had to be waived and authorized by key personnel several times during the final countdown. These key personnel were not aware of the teleconference about the solid rocket boosters that had taken place the night before. At launch, the impact of ignition broke loose a shower of ice from the launch platform. Some of the ice struck the left-hand booster, and some ice was actually sucked into the booster nozzle itself by an aspiration effect. Although there was no evidence of any ice damage to the Orbiter itself, NASA analysis of the ice problem was wrong. The booster ignition transient started six hundredths of a second after the igniter fired. The aft field joint on the right-hand booster was the coldest spot on the booster: about 28°F. The booster's segmented steel casing ballooned and the joint rotated, expanding inward as it had on all other shuttle flights.

The primary O-ring was too cold to seat properly, the cold-stiffened heat resistant putty that protected the rubber O-rings from the fuel collapsed, and gases at over 5000°F burned past both O-rings across seventy degrees of arc. Eight hundredths of a second after ignition, the shuttle lifted off. Engineering cameras focused on the right-hand booster showed about nine smoke puffs coming from the booster aft field joint. Before the shuttle cleared the tower, oxides from the burnt propellant temporarily sealed the field joint before flames could escape. Fifty-nine seconds into the flight, Challenger experienced the most violent wind shear ever encountered on a shuttle mission. The glassy oxides that sealed the field joint were shattered by the stresses of the wind shear, and within seconds flames from the field joint burned through the external fuel tank. Hundreds of tons of propellant ignited, tearing apart the shuttle. One hundred seconds into the flight, the last bit of telemetry data was transmitted from the Challenger.

Issues For Discussion

The Challenger disaster has several issues which are relevant to engineers. These issues raise many questions which may not have any definite answers, but can serve to heighten the awareness of engineers when faced with a similar situation. One of the most important issues deals with engineers who are placed in management positions. It is important that these managers not ignore their own engineering experience, or the expertise of their subordinate engineers. Often a manager, even if she has engineering experience, is not as up to date on current engineering practices as are the actual practicing engineers. She should keep this in mind when making any sort of decision that involves an understanding of technical matters. Another issue is the fact that managers encouraged launching due to the fact that there was insufficient low temperature data.

Since there was not enough data available to make an informed decision, this was not, in their opinion, grounds for stopping a launch. This was a reversal in the thinking that went on in the early years of the space program, which discouraged launching until all the facts were known about a particular problem. This same reasoning can be traced back to an earlier phase in the shuttle program, when upper-level NASA management was alerted to problems in the booster design, yet did not halt the program until the problem was solved. To better understand the responsibility of the engineer, some key elements of the professional responsibilities of an engineer should be examined. This will be done from two perspectives: the implicit social contract between engineers and society, and the guidance of the codes of ethics of professional societies.

As engineers test designs for ever-increasing speeds, loads, capacities and the like, they must always be aware of their obligation to society to protect the public welfare. After all, the public has provided engineers, through the tax base, with the means for obtaining an education and, through legislation, the means to license and regulate themselves. In return, engineers have a responsibility to protect the safety and well-being of the public in all of their professional efforts. This is part of the implicit social contract all engineers have agreed to when they accepted admission to an engineering college. The first canon in the ASME Code of Ethics urges engineers to "hold paramount the safety, health and welfare of the public in the performance of their professional duties." Every major engineering code of ethics reminds engineers of the importance of their responsibility to keep the safety and well being of the public at the top of their list of priorities. Although company loyalty is important, it must not be allowed to override the engineer's obligation to the public. Marcia Baron, in an excellent monograph on loyalty, states: "It is a sad fact about loyalty that it invites...single mindedness. Single-minded pursuit of a goal is sometimes delightfully romantic, even a real inspiration. But it is hardly something to advocate to engineers, whose impact on the safety of the public is so very significant. Irresponsibility, whether caused by selfishness or by magnificently unselfish loyalty, can have most unfortunate consequences."

Annotated Bibliography and Suggested References

Feynman, Richard Phillips, What Do You Care What Other People Think,: Further Adventures of a Curious Character, Bantam Doubleday Dell Pub, ISBN 0553347845, Dec 1992. Reference added by request of Sharath Bulusu, as being pertinent and excellent reading - 8-25-00.

Lewis, Richard S., Challenger: the final voyage , Columbia University Press, New York, 1988.

McConnell, Malcolm, Challenger: a major malfunction , Doubleday, Garden City, N.Y., 1987. Trento, Joseph J., Prescription for disaster, Crown, New York, c1987.

United States. Congress. House. Committee on Science and Technology, Investigation of the Challenger accident : hearings before the Committee on Science and Technology, U.S. House of Representatives, Ninety-ninth Congress, second session .... U.S. G.P.O.,Washington, 1986.

United States. Congress. House. Committee on Science and Technology, Investigation of the Challenger accident : report of the Committee on Science and Technology, House of Representative s, Ninety-ninth Congress, second session. U.S. G.P.O., Washington, 1986.

United States. Congress. House. Committee on Science, Space, and Technology, NASA's response to the committee's investigation of the "Challenger" accident : hearing before the Committee on Science, Space, and Technology, U.S. House of Representatives, One hundredth Congress, first session, February 26, 1987. U.S. G.P.O., Washington, 1987.

United States. Congress. Senate. Committee on Commerce, Science, and Transportation. Subcommittee on Science, Technology, and Space, Space shuttle accident : hearings before the Subcommittee on Science, Technology, and Space of the Committee on Commerce, Science, and Transportation , United States Senate, Ninety-ninth Congress, second session, on space shuttle accident and the Rogers Commission report, February 18, June 10, and 17, 1986. U.S. G.P.O., Washington, 1986.

1 "Challenger: A Major Malfunction." (See above) p. 194.

2 Baron, Marcia. The Moral Status of Loyalty . Illinois Institute of Technology: Center for the Study of Ethics in the Professions, 1984, p. 9. One of a series of monographs on applied ethics that deals specifically with the engineering profession. Provides arguments both for and against loyalty. 28 pages with notes and an annotated bibliography.

Department of Philosophy and Department of Mechanical Engineering, Texas A&M University. NSF Grant Number: DIR-9012252

Related Resources

Submit Content to the OEC Donate

This material is based upon work supported by the National Science Foundation under Award No. 2055332. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

You are here

Engineering ethics case study: the challenger disaster.

Course Introduction:

This course provides instruction in engineering ethics through a case study of the Space Shuttle Challenger disaster. The minimum technical details needed to understand the physical cause of the Shuttle failure are given. The disaster itself is chronicled through NASA photographs. Next the decision-making process—especially the discussions occurring during the teleconference held on the evening before the launch—is described. Direct quotations from engineers interviewed after the disaster are used to illustrate the ambiguities of the data and the pressures that the decision-makers faced in the months and hours preceding the launch. The course culminates in an extended treatment of six ethical issues raised by Challenger.

Learning Objectives:

This course teaches the following specific knowledge and skills: • Common errors to avoid in studying the history of an engineering failure: the retrospective fallacy and the myth of perfect engineering practice • Shuttle hardware involved in the disaster • Decisions made in the period preceding the launch • Ethical issue: NASA giving first priority to public safety over other concerns • Ethical issue: the contractor giving first priority to public safety over other concerns • Ethical issue: whistle blowing • Ethical issue: informed consent • Ethical issue: ownership of company records • Ethical issue: how the public perceives that an engineering decision involves an ethical violation

This course includes a true-false/multiple-choice quiz at the end, which is designed to highlight the general concepts of the course material.

This course is intended for all types of engineers.

The course materials are based on the pdf file, “Engineering Ethics Case Study: The Challenger Disaster.”

Course Material

[PDF, 1.37 MB]

Available Courses

- On SALE Specials

- Packaged Specials

- All Courses

- Advanced Florida Building Code

- Architectural Engineering

- Geotechnical

- Green Building & Sustainability

- Laws and Rules, Ethics

- Mechanical, HVAC

- Plumbing & Fire Protection

- Video Courses

Unregistered User

- State Approvals

- State Requirements

- Join Our Mailing Lists

- Shopping cart

User Login - Sign Up

- Create new account

- Request new password

Click seal to view our Continuing Education Certifications

Engineering Ethics Case Study: The Challenger Disaster

Topic outline.

Credits : 4 PDH

Pdh course description:.

- Common errors to avoid in studying the history of an engineering failure: the retrospective fallacy and the myth of perfect engineering practice

- Shuttle hardware involved in the disaster

- Decisions made in the period preceding the launch

- Ethical issue: NASA giving first priority to public safety over other concerns

- Ethical issue: the contractor giving first priority to public safety over other concerns

- Ethical issue: whistle blowing

- Ethical issue: informed consent

- Ethical issue: ownership of company records

- Ethical issue: how the public perceives that an engineering decision involves an ethical violation

To take this course:

Setup Menus in Admin Panel

No products in the cart.

E – 1142 – Engineering Ethics Case Study: The Challenger Disaster

- Course No E – 1142

- PDH Units 3.00

Intended Audience: all types of engineers

Pdh units: 3, learning objectives.

- Common errors to avoid in studying the history of an engineering failure

- Retrospective fallacy and the myth of perfect engineering practice

- Shuttle hardware involved in the disaster

- Decisions made in the period preceding the launch

- Ethical issues related to: NASA and contractors giving first priority to public safety over other concerns; whistle blowing; informed consent; ownership of company records; and when and why the public can perceive an engineering decision as involving an ethical violation (and what to do about it ).

Course Reviews

Great course. I especially liked the reminders that engineering does not happen in a vacuum and that things that are clear after the fact may not seem out of the ordinary during the course of day to day work.

Once completed, your order and certificate of completion will be available in your profile when you’re logged in to the site.

Ethics Courses

E – 1742 Ethics: Determining Negligence in Engineering Failures by Mark P. Rossow, PhD, P.E.

E – 2040 Engineering Ethics: “Hold Safety Paramount” to Prevent Loss of Life – the Case of the Ford Pinto Fires by Dr. Abolhassan Astaneh-Asl, Professor Emeritus. Ph.D., PE

Environmental engineering.

E – 1101 – Solar Energy Fundamentals by Dr. Harlan H. Bengtson. PhD, P.E.

E – 1164 Foundry Sand Facts for Civil Engineers by Mark P. Rossow, PhD, P.E.

Related courses.

E - 2043 Florida Laws and Rules for Professional Engineers Biennium Cycle 2023-2025

Mark P. Rossow, PhD, P.E.

E - 2040 Engineering Ethics: “Hold Safety Paramount” to Prevent Loss of Life - the Case of the Ford Pinto Fires

Dr. Abolhassan Astaneh-Asl, Professor Emeritus. Ph.D., PE

E - 2021 Engineering Ethics—Case Studies in Theft through Fraud

The August 2024 issue of IEEE Spectrum is here!

For IEEE Members

Ieee spectrum, follow ieee spectrum, support ieee spectrum, enjoy more free content and benefits by creating an account, saving articles to read later requires an ieee spectrum account, the institute content is only available for members, downloading full pdf issues is exclusive for ieee members, downloading this e-book is exclusive for ieee members, access to spectrum 's digital edition is exclusive for ieee members, following topics is a feature exclusive for ieee members, adding your response to an article requires an ieee spectrum account, create an account to access more content and features on ieee spectrum , including the ability to save articles to read later, download spectrum collections, and participate in conversations with readers and editors. for more exclusive content and features, consider joining ieee ., join the world’s largest professional organization devoted to engineering and applied sciences and get access to all of spectrum’s articles, archives, pdf downloads, and other benefits. learn more about ieee →, join the world’s largest professional organization devoted to engineering and applied sciences and get access to this e-book plus all of ieee spectrum’s articles, archives, pdf downloads, and other benefits. learn more about ieee →, access thousands of articles — completely free, create an account and get exclusive content and features: save articles, download collections, and talk to tech insiders — all free for full access and benefits, join ieee as a paying member., the challenger disaster: a case of subjective engineering, from the archives: nasa’s resistance to probabilistic risk analysis contributed to the challenger disaster.

Editor’s Note: Today is the 30 th anniversary of the loss of the space shuttle Challenger, which was destroyed 73 seconds in its flight, killing all onboard. To mark the anniversary, IEEE Spectrum is republishing this seminal article which first appeared in June 1989 as part of a special report on risk. The article has been widely cited in both histories of the space program and in analyses of engineering risk management.

“Statistics don’t count for anything,” declared Will Willoughby, the National Aeronautics and Space Administration’s former head of reliability and safety during the Apollo moon landing program. “They have no place in engineering anywhere.” Now director of reliability management and quality assurance for the U.S. Navy, Washington, D.C., he still holds that risk is minimized not by statistical test programs, but by “attention taken in design, where it belongs.” His design-oriented view prevailed in NASA in the 1970s, when the space shuttle was designed and built by many of the engineers who had worked on the Apollo program.

“The real value of probabilistic risk analysis is in understanding the system and its vulnerabilities,” said Benjamin Buchbinder, manager of NASA’s two-year-old risk management program. He maintains that probabilistic risk analysis can go beyond design-oriented qualitative techniques in looking at the interactions of subsystems, ascertaining the effects of human activity and environmental conditions, and detecting common-cause failures.

NASA started experimenting with this program in response to the Jan. 28, 1986, Challenger accident that killed seven astronauts . The program’s goals are to establish a policy on risk management and to conduct risk assessments independent of normal engineering analyses. But success is slow because of past official policy that favored “engineering judgment” over “probability numbers,” resulting in NASA’s failure to collect the type of statistical test and flight data useful for quantitative risk assessment.

This Catch 22–the agency lacks appropriate statistical data because it did not believe in the technique requiring the data, so it did not gather the relevant data–is one example of how an organization’s underlying culture and explicit policy can affect the overall reliability of the projects it undertakes.

External forces such as politics further shape an organization’s response. Whereas the Apollo program was widely supported by the President and the U.S. Congress and had all the money it needed , the shuttle program was strongly criticized and underbudgeted from the beginning. Political pressures, coupled with the lack of hard numerical data, led to differences of more than three orders of magnitude in the few quantitative estimates of a shuttle launch failure that NASA was required by law to conduct.

Some observers still worry that, despite NASA’s late adoption of quantitative risk assessment, its internal culture and its fear of political opposition may be pushing it to repeat dangerous errors of the shuttle program in the new space station program.

Basic Facts

System: National Space Transportation System (NSTS)—the space shuttle

Risk assessments conducted during design and operation: preliminary hazards analysis; failure modes and effects analysis with critical items list; various safety assessments, all qualitative at the system level, but with quantitative analyses conducted for specific subsystems.

Worst failure: In the January 1986 Challenger accident, primary and secondary O-rings in the field joint of the right solid-fuel rocket booster were burnt through by hot gases.

Consequences: loss of $3 billion vehicle and crew.

Predictability: long history of erosion in O-rings, not envisaged in the original design.

Causes: inadequate original design (booster joint rotated farther open than intended); faulty judgment (managers decided to launch despite record low temperatures and ice on launch pad); possible unanticipated external events (severe wind shear may have been a contributing factor).

Lessons learned: in design, to use probabilistic risk assessment more in evaluating and assigning priorities to risks; in operation, to establish certain launch commit criteria that cannot be waived by anyone.

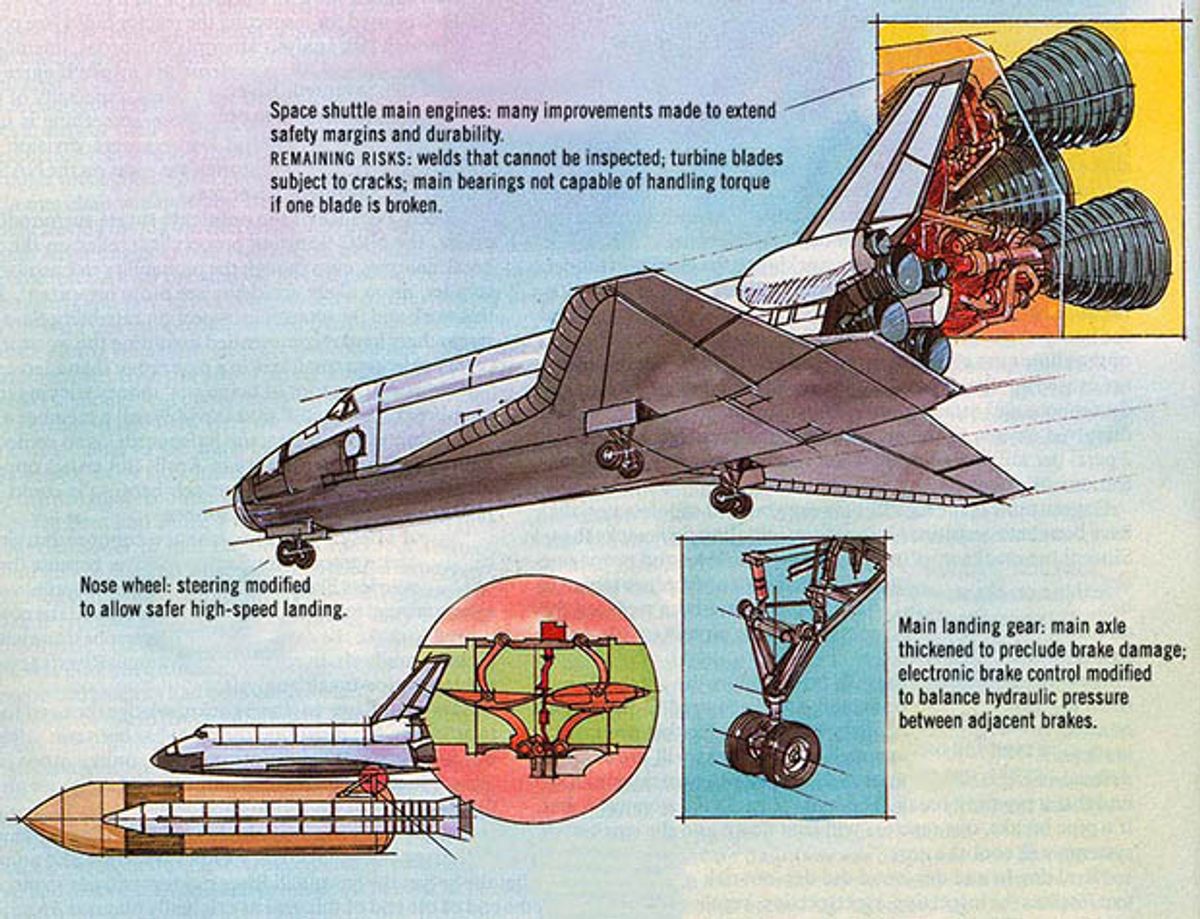

Other outcomes: redesign of booster joint and other shuttle subsystems that also had a high level of risk or unanticipated failures; reassessment of critical items.

NASA’s preference for a design approach to reliability to the exclusion of quantitative risk analysis was strengthened by a negative early brush with the field. According to Haggai Cohen, who during the Apollo days was NASA’s deputy chief engineer, NASA contracted with General Electric Co. in Daytona Beach, Fla., to do a “full numerical PRA [probabilistic risk assessment]” to assess the likelihood of success in landing a man on the moon and returning him safely to earth. The GE study indicated the chance of success was “less than 5 percent.” When the NASA Administrator was presented with the results, he felt that if made public, “the numbers could do irreparable harm, and he disbanded the effort,” Cohen said. “We studiously stayed away from [numerical risk assessment] as a result.”

“That’s when we threw all that garbage out and got down to work,” Willoughby agreed. The study’s proponents, he said, contended “ ‘you build up confidence by statistical test programs. ’ We said, ‘No, go fly a kite, we’ll build up confidence by design.’ Testing gives you only a snapshot under particular conditions. Reality may not give you the same set of circumstances, and you can be lulled into a false sense of security or insecurity.”

As a result, NASA adopted qualitative failure modes and effects analysis (FMEA) as its principal means of identifying design features whose worst-case failure could lead to a catastrophe. The worst cases were ranked as Criticality 1 if they threatened the life of the crew members or the existence of the vehicle; Criticality 2 if they threatened the mission; and Criticality 3 for anything less. An R designated a redundant system [see “How NASA determined shuttle risk,”]. Quantitative techniques were limited to calculating the probability of the occurrence of an individual failure mode “if we had to present a rationale on how to live with a single failure point,” Cohen explained.

The politics of risk

By the late 1960s and early 1970s the space shuttle was being portrayed as a reusable airliner capable of carrying 15-ton payloads into orbit and 5-ton payloads back to earth. Shuttle astronauts would wear shirtsleeves during takeoff and landing instead of the bulky spacesuits of the Gemini and Apollo days. And eventually the shuttle would carry just plain folks: non-astronaut scientists, politicians, schoolteachers, and journalists.

NASA documents show that the airline vision also applied to risk. For example, in the 1969 NASA Space Shuttle Task Group Report , the authors wrote: “It is desirable that the vehicle configuration provide for crew/passenger safety in a manner and to the degree as provided in present day commercial jet aircraft.”

Statistically an airliner is the least risky form of transportation, which implies high reliability. And in the early 1970s, when President Richard M. Nixon, Congress, and the Office of Management and Budget (OMB) were all skeptical of the shuttle, proving high reliability was crucial to the program’s continued funding.

OMB even directed NASA to hire an outside contractor to do an economic analysis of how the shuttle compared with other launch systems for cost-effectiveness, observed John M. Logsdon, director of the graduate program in science, technology, and public policy at George Washington University in Washington, D.C. “No previous space programme had been subject to independent professional economic evaluation,” Logsdon wrote in the journal Space Policy in May 1986. “It forced NASA into a belief that it had to propose a Shuttle that could launch all foreseeable payloads ... [and] would be less expensive than alternative launch systems” and that, indeed, would supplant all expendable rockets. It also was politically necessary to show that the shuttle would be cheap and routine, rather than large and risky, with respect to both technology and cost, Logsdon pointed out.

Amid such political unpopularity, which threatened the program’s very existence, “some NASA people began to confuse desire with reality,” said Adelbert Tischler, retired NASA director of launch vehicles and propulsion. “One result was to assess risk in terms of what was thought acceptable without regard for verifying the assessment.” He added: “Note that under such circumstances real risk management is shut out.”

‘Disregarding data’

By the early 1980s many figures were being quoted for the overall risk to the shuttle, with estimates of a catastrophic failure ranging from less than 1 chance in 100 to 1 chance in 100 000. “The higher figures [1 in 100] come from working engineers, and the very low figures [1 in 100 000] from management,” wrote physicist Richard P. Feynman in his appendix “Personal Observations on Reliability of Shuttle” to the 1986 Report of the Presidential Commission on the Space Shuttle Challenger Accident .

The probabilities originated in a series of quantitative risk assessments NASA was required to conduct by the Interagency Nuclear Safety Review Panel (INSRP), in anticipation of the launch of the Galileo spacecraft on its voyage to Jupiter, originally scheduled for the early 1980s. Galileo was powered by a plutonium-fueled radioisotope thermoelectric generator, and Presidential Directive/NSC-25 ruled that either the U.S. President or the director of the office of science and technology policy must examine the safety of any launch of nuclear material before approving it. The INSRP (which consisted of representatives of NASA as the launching agency, the Department of Energy, which manages nuclear devices, and the Department of Defense, whose Air Force manages range safety at launch) was charged with ascertaining the quantitative risks of a catastrophic launch dispersing the radioactive poison into the atmosphere. There were a number of studies because the upper stage for boosting Galileo into interplanetary space was reconfigured several times.

The first study was conducted by the J. H. Wiggins Co. of Redondo Beach, Calif., and published in three volumes between 1979 and 1982. It put the overall risk of losing a shuttle with its spacecraft payload during launch at between 1 chance in 1000 and 1 in 10, 000. The greatest risk was posed by the solid-fuel rocket boosters (SRBs). The Wiggins author noted that the history of other solid-fuel rockets showed them as undergoing catastrophic launches somewhere between 1 time in 59 and 1 time in 34, but that the study’s contract overseers, the Space Shuttle Range Safety Ad Hoc Committee, made an “engineering judgment” and “decided that a reduction in the failure probability estimate was warranted for the Space Shuttle SRBs” because “the historical data includes motors developed 10 to 20 years ago.” The Ad Hoc Committee therefore “decided to assume a failure probability of 1 x 10 -3 for each SRB. “ In addition, the Wiggins author pointed out, “it was decided by the Ad-Hoc Committee that a second probability should be considered… which is one order of magnitude less” or 1 in 10, 000, “justified due to unique improvements made in the design and manufacturing process used for these motors to achieve man rating.”

In 1983 a second study was conducted by Teledyne Energy Systems Inc., Timonium, Md., for the Air Force Weapons Laboratory at Kirtland Air Force Base, N.M. It described the Wiggins analysis as consisting of “an interesting presentation of launch data from several Navy, Air Force, and NASA missile programs and the disregarding of that data and arbitrary assignment of risk levels apparently per sponsor direction” with “no quantitative justification at all.” After reanalyzing the data, the Teledyne authors concluded that the boosters ’ track record “suggest[s] a failure rate of around one-in-a-hundred.”

When risk analysis isn’t

NASA conducted its own internal safety analysis for Galileo, which was published in 1985 by the Johnson Space Center. The Johnson authors went through failure mode worksheets assigning probability levels. A fracture in the solid-rocket motor case or case joints —similar to the accident that destroyed Challenger —was assigned a probability level of 2; which a separate table defined as corresponding to a chance of 1 in 100, 000 and described as “remote,” or “so unlikely, it can be assumed that this hazard will not be experienced.”

The Johnson authors’ value of 1 in 100 000 implied, as Feynman spelled out, that “one could put a Shuttle up each day for 300 years expecting to lose only one.” Yet even after the Challenger accident, NASA’s chief engineer Milton Silveira, in a hearing on the Galileo thermonuclear generator held March 4, 1986, before the U.S. House of Representatives Committee on Science and Technology, said: “We think that using a number like 10 to the minus 3, as suggested, is probably a little pessimistic.” In his view, the actual risk “would be 10 to the minus 5, and that is our design objective.” When asked how the number was deduced, Silveira replied, “We came to those probabilities based on engineering judgment in review of the design rather than taking a statistical data base, because we didn’t feel we had that.”

After the Challenger accident, the 1986 presidential commission learned the O-rings in the field joints of the shuttle’s solid-fuel rocket boosters had a history of damage correlated with low air temperature at launch. So the commission repeatedly asked the witnesses it called to hearings why systematic temperature-correlation data had been unavailable before launch.

NASA’s “management methodology” for collection of data and determination of risk was laid out in NASA’s 1985 safety analysis for Galileo. The Johnson space center authors explained: “Early in the program it was decided not to use reliability (or probability) numbers in the design of the Shuttle” because the magnitude of testing required to statistically verify the numerical predictions “is not considered practical.” Furthermore, they noted, “experience has shown that with the safety, reliability, and quality assurance requirements imposed on manned spaceflight contractors, standard failure rate data are pessimistic.”

“In lieu of using probability numbers, the NSTS [National Space Transportation System] relies on engineering judgment using rigid and well-documented design, configuration, safety, reliability, and quality assurance controls,” the Johnson authors continued. This outlook determined the data NASA managers required engineers to collect. For example, no “lapsed-time indicators” were kept on shuttle components, subsystems, and systems, although “a fairly accurate estimate of time and/or cycles could be derived,” the Johnson authors added.

One reason was economic. According to George Rodney, NASA’s associate administrator of safety, reliability, maintainability and quality assurance, it is not hard to get time and cycle data, “but it’s expensive and a big bookkeeping problem.”

Another reason was NASA’s “normal program development: you don’t continue to take data; you certify the components and get on with it,” said Rodney’s deputy, James Ehl. “People think that since we’ve flown 28 times, then we have 28 times as much data, but we don ’t. We have maybe three or four tests from the first development flights.”

In addition, Rodney noted, “For everyone in NASA that’s a big PRA [probabilistic risk assessment] seller, I can find you 10 that are equally convinced that PRA is oversold… [They] are so dubious of its importance that they won ’t convince themselves that the end product is worthwhile.”

Risk and the organizational culture

One reason NASA has so strongly resisted probabilistic risk analysis may be the fact that “PRA runs against all traditions of engineering, where you handle reliability by safety factors,” said Elisabeth Paté-Cornell, associate professor in the department of industrial engineering and engineering management at Stanford University in California, who is now studying organizational factors and risk assessment in NASA. In addition, with NASA’s strong pride in design, PRA may be “perceived as an insult to their capabilities, that the system they ’ve designed is not 100 percent perfect and absolutely safe,” she added. Thus, the character of an organization influences the reliability and failure of the systems it builds because its structure, policy, and culture determine the priorities, incentives, and communication paths for the engineers and managers doing the work, she said.

“Part of the problem is getting the engineers to understand that they are using subjective methods for determining risk, because they don’t like to admit that,” said Ray A. Williamson, senior associate at the U.S. Congress Office of Technology Assessment in Washington, D.C. “Yet they talk in terms of sounding objective and fool themselves into thinking they are being objective.”

“It’s not that simple,” Buchbinder said. “A probabilistic way of thinking is not something that most people are attuned to. We don’t know what will happen precisely each time. We can only say what is likely to happen a certain percentage of the time.” Unless engineers and managers become familiar with probability theory, they don ’t know what to make of “large uncertainties that represent the state of current knowledge,” he said. “And that is no comfort to the poor decision-maker who wants a simple answer to the question, ‘Is this system safe enough? ’”

As an example of how the “mindset” in the agency is now changing in favor of “a willingness to explore other things,” Buchbinder cited the new risk management program, the workshops it has been holding to train engineers and others in quantitative risk assessment techniques, and a new management instruction policy that requires NASA to “provide disciplined and documented management of risks throughout program life cycles.”

Hidden risks to the space station

NASA is now at work on its big project for the 1990s: a space station, projected to cost $30 billion and to be assembled in orbit , 220 nautical miles above the earth, from modules carried aloft in some two dozen shuttle launches. A National Research Council committee evaluated the space station program and concluded in a study in September 1987: “If the probability of damaging an Orbiter beyond repair on any single Shuttle flight is 1 percent— the demonstrated rate is now one loss in 25 launches, or 4 percent —the probability of losing an Orbiter before [the space station's first phase] is complete is about 60 percent.”

The probability is within the right order of magnitude, to judge by the latest INSRP-mandated study completed in December for Buchbinder’s group in NASA by Planning Research Corp., McLean, Va. The study, which reevaluates the risk of the long-delayed launch of the Galileo spacecraft on its voyage to Jupiter, now scheduled for later this year, estimates the chance of losing a shuttle from launch through payload deployment at 1 in 78, or between 1 and 2 percent, with an uncertainty of a factor of 2.

Those figures frighten some observers because of the dire consequences of losing part of the space station. “The space station has no redundancy — no backup parts,” said Jerry Grey, director of science and technology policy for the American Institute of Aeronautics and Astronautics in Washington, D.C.

The worst case would be loss of the shuttle carrying the logistics module, which is needed for reboost, Grey pointed out. The space station’s orbit will subject it to atmospheric drag such that, if not periodically boosted higher, it will drift downward and with in eight months plunge back to earth and be destroyed, as was the Skylab space station in July 1979. “If you lost the shuttle with the logistics module, you don ’t have a spare, and you can ’t build one in eight months,” Grey said, “so you may lose not only that one payload, but also whatever was put up there earlier.”

Why are there no backup parts? “Politically the space station is under fire [from the U.S. Congress] all the time because NASA hasn’t done an adequate job of justifying it,” said Grey. “NASA is apprehensive that Congress might cancel the entire program”— and so is trying to trim costs as much as possible.

Grey estimated that spares of the crucial modules might add another 10 percent to the space station’s cost. “But NASA is not willing to go to bat for that extra because they ’re unwilling to take the political risk,” he said— a replay, he fears, of NASA’s response to the political negativism over the shuttle in the 1970s.

The NRC space station committee warned: “It is dangerous and misleading to assume there will be no losses and thus fail to plan for such events.”

“Let’s face it, space is a risky business,” commented former Apollo safety officer Cohen. “I always considered every launch a barely controlled explosion.”

“The real problem is: whatever the numbers are, acceptance of that risk and planning for it is what needs to be done,” Grey said. He fears that “NASA doesn’t do that yet.”

In addition to the sources named in the text, the authors would like to acknowledge the information and insights afforded by the following: E. William Colglazier (director of the Energy, Environment and Resources Center at the University of Tennessee, Knoxville) and Robert K. Weatherwax (president of Sierra Energy & Risk Assessment Inc., Roseville, Calif.), the two authors of the 1983 Teledyne/Air Force Weapons Laboratory study; Larry Crawford, director of reliability and trends analysis at NASA headquarters in Washington, D.C.; Joseph R. Fragola, vice president, Science Applications International Corp., New York City; Byron Peter Leonard, president, L Systems Inc., El Segundo, Calif.; George E. Mueller, former NASA associate administrator for manned spaceflight; and Marcia Smith, specialist in aerospace policy, Congressional Research Service, Washington, D.C.

This article first appeared in print in June 1989 as part of the special report “Managing Risk In Large Complex Systems” under the title “The space shuttle: a case of subjective engineering.”

How NASA Determined Shuttle Risk

At the start of the space shuttle’s design, the National Aeronautics and Space Administration defined risk as “the chance (qualitative) of loss of personnel capability, toss of system, or damage to or loss of equipment or property.” NASA accordingly relied on several techniques for determining reliability and potential design problems, concluded the U.S. National Research Council’s Committee on Shuttle Criticality Review and Hazard Analysis Audit in its January 1988 report Post-Challenger Evaluation of Space Shuttle Risk Assessment and Management . But, the report noted, the analyses did “not address the relative probabilities of a particular hazardous condition arising from failure modes, human errors, or external situations,” so did not measure risk.

A failure modes and effects analysis (FMEA) was the heart of NASA’s effort to ensure reliability, the NRC report noted. An FMEA, carried out by the contractor building each shuttle element or subsystem, was performed on all flight hardware and on ground support equipment that interfaced with flight hard ware. Its chief purpose was to i dentify hardware critical to the performance and safety of the mission.

Items that did not meet certain design, reliability and safety requirements specified by NASA’s top management and whose failure could threaten the toss of crew, vehicle, or mission, made up a critical i tems list (CIL).

Although the FMEA/CIL was first viewed as a design tool, NASA now uses it during operations and management as well, to analyze problems, assess whether corrective actions are effective, identify where and when inspection an d maintenance are needed, and reveal trends in failures.

Second, NASA conducted hazards analyses, performed jointly by shuttle engineers and by NASA’s safety and operations organizations. They made use of the FMEA/ CIL, various design reviews, safety analyses, and other studies. They considered not only the failure modes identified In the FMEA, but also other threats p osed by the mission activities, crewmachine interfaces, and the environment. After hazards and their causes were identified, NASA engineers and managers had to make one of three decisions: to eliminate the cause of each hazard, to control the cause if it could not be eliminated, or to accept the hazards that could not be controlled.

NASA also conducted an element i nterface functional analysis (EIFA) to look at the shuttle more nearly as a com plete system. Both the FMEA and the hazards analyses concentrated only on i ndividual elements of the shuttle: the space shuttle’s main engines i n the orbiter, the rest of the orbiter, the external tank, and the solid fuel rocket boosters. The EIFA assessed hazards at the mating of the elements.

Also to examine the shuttle as a system, NASA conducted a one-time critical functions assessment in 1978, which searched for multiple and cascading failures. The information from all these studies fed one way into an overall mission safety assessment.

The NRC committee had several criticisms. In practice, the FMEA was the sole basis for some engineering change decisions and all engineering waivers and rationales for re taining certain high-risk design features. However, the NRC report noted, hazard analyses for some important, high-risk subsystems “were not updated for years at a time even though design changes had occurred or dangerous failures were experienced.” On one procedural flow chart, the report noted, “the ‘Hazard Analysis As Required ’ is a dead-end box with inputs but no output with respect to waiver approval decisions.”

The NAC committee concluded that “the isolation of the hazard analysis within NASA’s risk assessment and management process to date can be seen as reflecting the past weakness of the entire safety organization” —T.E.B. and K.E.

Two Companies Plan to Fuel Cargo Ships With Ammonia

Video friday: uc berkeley's little humanoid, can a large language model recognize itself.

- PDH Online Courses

- PDH Discount Packages

- Ethics, Laws and Rules

- PDH Live Webinars

- PDH Video Presentations

- PDH Interactive Courses

- OH Timed & Monitored

- Free 1 PDH Course

- CED Newsletter Subscription

- Corporate Enrollment Programs

- PE Referral

- How It Works

- User Registration

- State Requirements

- 100% Satisfaction Guarantee

- Corporate Profile

- Approved Sponsor

- Accepted Courses

- Course Provider Biographies

- Course Provider Registration

- Course Provider Qualifications

- How to Provide a Course

- Course Provider Earnings

- Course Provider Search

Online Courses

- Biomass Fuels

- Hydro Power

- Renewable Sources

- Solar Energy

- Wind Energy

- ADA Requirements

- Assessment and Planning

- Building Design Fundamentals

- Facility Design

- Business Planning

- Conflict Resolution

- Finance and Economics

- Management and Leadership

- Marketing and Communication

- Performance and Productivity

- Public Influence

- Self Development

- Chemical Fundamentals

- Cooling Water Treatment

- Disinfection Systems

- Industrial Water Treatment

- Wastewater Collection

- Wastewater Treatment

- Water Desalination

- Water Treatment

- Area Drainage

- Erosion Control

- Pavement Design

- Site Development

- Stormwater Management

- Water Supply

- AC/DC Currents

- Circuit Measurements

- Communication Systems

- Control Systems

- Electrical Distribution

- Electrical Equipment

- Electrical Fundamentals

- Electrical Substations

- Equipment Testing

- Motors and Generators

- Protection Systems

- Voltage Regulation

- Contract Administration

- Engineering MegaProjects

- Engineering Patents

- Maintenance Management

- Plant Management

- Project Management

- Reliability Management

- Value Engineering

- Air Pollution

- Building Contaminants

- Environmental Impacts

- Hazardous Waste

- Indoor Environmental Quality

- Site Remediation/Protection

- Solid Waste

- Engineering Catastrophes

- Ethics: General

- Ethics: State Specific

- Deep Foundations

- Retaining Structures

- Rock Mechanics

- Shallow Foundations

- Slope Stability

- Soil Mechanics

- Special Conditions

- HVAC Applications

- HVAC Distribution

- HVAC for Facilities

- HVAC Fundamentals

- Systems and Equipment

- Ventilation

- Industrial Systems

- Nuclear Systems

- Power Plants

- Steam Systems

- Cathodic Protection

- Coating and Lubrication

- Material Applications

- Material Properties

- Thermal Insulation

- Compressed Air Systems

- Fire Protection

- Gears and Bearings

- Mechanical Fundamentals

- Piping Systems

- Plumbing Systems

- Pumping Systems

- Thermodynamics

- Vibration Control

- Mineral Resources

- Oil and Gas

- Underground Storage Tanks

- CBR Protection

- Chemical Hazards

- Electrical Hazards

- Excavation Safety

- Fall Protection

- Safety Fundamentals

- Bridge Design

- Bridge Inspections

- Bridge Maintenance

- Flood Construction

- High-Wind Construction

- Seismic Construction

- Structural Analysis

- Energy Efficient Homes

- Energy Management

- Green Roofs and Exteriors

- High Performance Buildings

- HVAC System Improvements

- Lighting Assessments

- MEP Energy Savings

- Lane Use Management

- Roadway Design

- Roundabout Design

- Traffic Control

- Traffic Signals

- Transportation Planning

- Transportation Safety

Discount Packages

State Specific

- District of Columbia

- Mississippi

- New Hampshire

- North Carolina

- North Dakota

- Pennsylvania

- South Carolina

- South Dakota

- West Virginia

General Packages

- Alt./Ren. Energy

- Building Design

- Chemical Engineering

- Civil Engineering

- Construction Safety

- Electrical Engineering

- Environmental Engineering

- Geotechnical Engineering

- HVAC Engineering

- Hydrology/Hydraulics

- Materials Engineering

- Mechanical Engineering

- Petroleum Engineering

- Stormwater Engineering

- Structural Engineering

- Transportation Engineering

- Wastewater Engineering

Live Webinars

- All Webinars

- Engineering Management

- Ethics and Catastrophies

- Material Engineering

Video Presentations

- Business Skills

- Industrial Engineering

- Sustainable Design

Interactive Courses

- Safety Engineering

OH Timed & Monitored

Courses by provider, phone: 1-877-322-5800, e-mail: [email protected], engineering ethics case study: the challenger disaster.

This online engieering PDH course provides instruction in engineering ethics through a case study of the Space Shuttle Challenger disaster. The minimum technical details needed to understand the physical cause of the Shuttle failure are presented. The disaster itself is chronicled through NASA photographs. Next the decision-making process, especially the discussions occurring during the teleconference held on the evening before the launch, is described. Direct quotations from engineers interviewed after the disaster are used to illustrate the ambiguities of the data and the pressures that the decision-makers faced in the months and hours preceding the launch. The course culminates in an extended treatment of six ethical issues raised by Challenger.

This 3 PDH online course is intended for all engineers who are interested in gaining a better understanding about the ethical issues that lead to the Challenger disaster and how they could have been avoided.

This PE continuing education course is intended to provide you with the following specific knowledge and skills:

- Common errors to avoid in studying the history of an engineering failure: the retrospective fallacy and the myth of perfect engineering practice

- Shuttle hardware involved in the disaster

- Decisions made in the period preceding the launch

- Ethical issue: NASA giving first priority to public safety over other concerns

- Ethical issue: the contractor giving first priority to public safety over other concerns

- Ethical issue: whistle blowing

- Ethical issue: informed consent

- Ethical issue: ownership of company records

- Ethical issue: how the public perceives that an engineering decision involves an ethical violation

In this professional engineering CEU course, you need to review the course document titled, "Engineering Ethics Case Study: The Challenger Disaster".

Upon successful completion of the quiz, print your Certificate of Completion instantly. (Note: if you are paying by check or money order, you will be able to print it after we receive your payment.) For your convenience, we will also email it to you. Please note that you can log in to your account at any time to access and print your Certificate of Completion.

- Privacy Policy

- Terms of Use

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Engineering Ethics Case Study: The Challenger Disaster Course No: LE3-001 Credit: 3 PDH

Related Papers

tee shi feng

Junichi Murata

One of the most important tasks of engineering ethics is to give engineers the tools required to act ethically to prevent possible disastrous accidents which could result from engineers' decisions and actions. The space shuttle Challenger disaster is referred to as a typical case in almost every textbook. This case is seen as one from which engineers can learn important lessons, as it shows impressively how engineers should act as professionals, to prevent accidents. The Columbia disaster came seventeen years later in 2003. According to the report of the Columbia accident investigation board, the main cause of the accident was not individual actions which violated certain safety rules but rather was to be found in the history and culture of NASA. A culture is seen as one which desensitized managers and engineers to potential hazards as they dealt with problems of uncertainty. This view of the disaster is based on Dian Vaughan's analysis of the Challenger disaster, where in...

12th AIAA Aviation Technology, Integration, and Operations (ATIO) Conference and 14th AIAA/ISSMO Multidisciplinary Analysis and Optimization Conference

Taiki Matsumura

MidHath Nigar

Since long time ago, the human being has been curious to understand what happens to his surroundings and to be able to use this knowledge for manipulating the environment for his benefit. The spark of doubt, inherent to the nature of man, is so strong that he not only would settle for understanding his immediate environment. There is always the enthusiasm of looking up to the sky to discover the mysteries that keeps the universe, what happens beyond what our eyes can see if we can reach for the stars. Moved by the exponential growth of technology and science, in the 20th-century human being undertook the so-called “Space Race.” It would take to another level the imagination and knowledge of engineers and scientists around the world to achieve one of the most bold and fearless objectives that humanity has proposed to itself: lead men into space. The winner of such race would proclaim to be the National Aeronautics and Space Administration (NASA) when in 1968 managed to take the first man on the Moon in the Apollo 11 mission. Space Shuttle Challenger leaps from the launch pad. Photo Credit: NASA [1]. It was expected that as a result of the event space agencies would not stop. Instead, it would be an experience full of motivation with views towards what could come if they continued to work correctly. In this context, NASA announced an ambitious project called “Space Shuttle” in 1976. This project presented the idea of a reusable manned spacecraft, able to make several return trips into space. The dream was becoming a reality, conquer of space was happening. The first trips of the Space Shuttle, although in the midst of uncertainties and details to improve, were promising, and with such enthusiasm, NASA dared to send continuous missions within relatively short periods of time. However, a tragedy occurred in the tenth trip of the project, which would be an event that marked and changed the history of space exploration forever. The catastrophe took place on January 28th, 1986, when the spacecraft of the “Shuttle Challenger” mission was destroyed only 73 seconds after launching in front of the eyes of the entire organization and a huge section of the American population. The Shuttle Challenger mission, which numbering was STS-51-L [2], had as objectives to take to orbit the second Tracking and Data Relay Satellite for American communication services. In addition to the placing in orbit of the SPARTAN-Halley, which was an astronomical platform that would carry out observations of the Comet Halley, which at that time was close to the Earth. The accident claimed the lives of the seven members of the crew, including a teacher of basic education who designated for teaching children about space when returning from the mission [3]. The impact that it caused on the population and the enthusiasm of the scientific community was so significant that many media named it as “the largest accident on the conquer of space” [4]. The crew of the Space Shuttle Challenger. Photo Credit: History.com In this essay, we will make a thorough study of the technical and administrative factors that contributed to the failure of the Shuttle Challenger project. Starting from paying attention from the planning stage, the implementation of the project, and even the consequences and further investigations, to be able to identify the lessons to be learned in both areas. It is expected that by completing this task, we can notice the critical factors that we need to pay particular attention to approaching ourselves as students that can develop projects successfully.

Ramon Llull Journal of Applied Ethics

Robert E . Allinson

For the purpose of this analysis, risk assessment becomes the primary term and risk management the secondary term. The concept of risk management as a primary term is based upon a false ontology. Risk management implies that risk is already there, not created by the decision, but lies already inherent in the situation that the decision sets into motion. The risk that already exists in the objective situation simply needs to be “managed”. By considering risk assessment as the primary term, the ethics of responsibility for risking the lives of others, the envi- ronment and future generations in the first place comes into the forefront. The issue of risk heeding is especially important as it highlights the need to pay attention to warnings of danger and to take action to redress problems before disasters occur. In this paper, the decision making that led to the choice of technology utilized and the implementation of such technology in the case of the space shuttle Challenger disaster will be used as a model to illustrate the need to take ethical factors into account when making decisions regarding the safety of technological systems and the heeding of danger warnings. While twenty-five years separates the decision to launch the Challenger and the Fukushima Daiichi nuclear plant dis- aster, the lessons of the Challenger disaster are still to be learned.

Eric Aluoch

abdelrahman abdelraouf

7th IET International Conference on System Safety, incorporating the Cyber Security Conference 2012

Sanjeev Appicharla

This paper presents the results of the desk-stop study to model and analyse the Space Shuttle Challenger Accident using Management Oversight and Risk Tree as a part of the SIRI Methodology. The study uses the NASA Summary Report of the Presidential Commission Investigations on the Space Shuttle Challenger Accident as an input document [8]. The aim of the case study is to learn all causal factors in producing the accident. It is assumed that popular explanations of the accident suffer from errors either blaming the launch decision or the behaviour of managers during the pre-launch decision making activity. Utility of the case study is in learning all the causal factors of the given effect, the Space Challenger Accident, which occurred on 28th January 1986 using the doctrine of causation (cause-effect reasoning) as the guiding principle [8],[12],[14],[17]. Note: The paper title contains a spelling mistake.

Musavvir Mahmud

Engineering is the science and technology to meet up the demands of society. But resources engineers have are limited. Also the knowledge of science and technologies are not perfect. So catastrophic incidents take place sometimes. This report discusses about the disaster of Columbia Shuttle, Tay Bridge and Japan airlines flight 123. All three incidents are discussed with a brief background followed by a brief description of the disaster. All the possible reasons behind the disasters were described. At the end the aftermath of the incidents were discussed

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

Policy and Society

Denis Fischbacher-Smith

Radlin Rintoul

John Downer

Astropolitics

David Lengyel

Public Administration Review

Paul Schulman

Journal of Space Safety Engineering

Dave Gingerich , Jeffrey Forrest

Garth Henning

Risk Management

Science Education

Dana Zeidler

Mohammad Abdullah Al Mamun

Science, Technology, & Human Values

Andrew Tudor

Practicing Anthropology

Alejandro Chávez

Dave Gingerich

Joel Cutcher-gershenfeld

Fatihah Lim

Şeyda Aksakal

Đorđe Đorđević

Joel Cutcher-Gershenfeld

Clark Turner

Ini Charles

Yavuz Akcan

Annals of Nuclear Energy

Athina Kyrtsi

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

- Share full article

For more audio journalism and storytelling, download New York Times Audio , a new iOS app available for news subscribers.

- Apple Podcasts

- Google Podcasts

An Escalating War in the Middle East

Tensions are on a knife edge after israel carried out a strike on the hezbollah leader allegedly behind an attack in the golan heights..

Hosted by Sabrina Tavernise

Featuring Ben Hubbard

Produced by Rachelle Bonja and Sydney Harper

With Shannon M. Lin and Will Reid

Edited by Lexie Diao and Patricia Willens

Original music by Dan Powell and Sophia Lanman

Engineered by Chris Wood

Listen and follow The Daily Apple Podcasts | Spotify | Amazon Music | YouTube

Warning: This episode contains audio of war.

Over the past few days, the simmering feud between Israel and the Lebanese militia Hezbollah, has reached a critical moment.

Ben Hubbard, the Istanbul bureau chief for The New York Times, explains why the latest tit-for-tat attacks are different and why getting them to stop could be so tough.

On today’s episode

Ben Hubbard , the Istanbul bureau chief for The New York Times.

Background reading

Israel says it killed a Hezbollah commander , Fuad Shukr, in an airstrike near Beirut.

The Israeli military blamed Mr. Shukr for an assault on Saturday that killed 12 children and teenagers in the Israeli-controlled Golan Heights.

There are a lot of ways to listen to The Daily. Here’s how.

We aim to make transcripts available the next workday after an episode’s publication. You can find them at the top of the page.