- Subscribe to journal Subscribe

- Get new issue alerts Get alerts

Secondary Logo

Journal logo.

Colleague's E-mail is Invalid

Your message has been successfully sent to your colleague.

Save my selection

Measurement in Nursing Research

Curtis, Alexa Colgrove PhD, MPH, FNP, PMHNP; Keeler, Courtney PhD

Alexa Colgrove Curtis is assistant dean and professor of graduate nursing and director of the MPH–DNP dual degree program and Courtney Keeler is an associate professor, both at the University of San Francisco School of Nursing and Health Professions. Contact author: Alexa Colgrove Curtis, [email protected] . Nursing Research, Step by Step is coordinated by Bernadette Capili, PhD, NP-C: [email protected] . The authors have disclosed no potential conflicts of interest, financial or otherwise. A podcast with the authors is available at www.ajnonline.com .

Editor's note: This is the fourth article in a series on clinical research by nurses. The series is designed to give nurses the knowledge and skills they need to participate in research, step by step. Each column will present the concepts that underpin evidence-based practice—from research design to data interpretation. The articles will be accompanied by a podcast offering more insight and context from the authors. To see all the articles in the series, go to https://links.lww.com/AJN/A204 .

Quantitative research examines associations between research variables as measured through numerical analysis, where study effects (outcomes) are analyzed using statistical techniques. Such techniques include descriptive statistics (for example, sample mean and standard deviation) and inferential statistics, which uses the laws of probability to evaluate for statistically significant differences between sample groups (for example, t test, ANOVA, and regression analysis). Qualitative research explores research questions through an analysis of nonnumerical data sources (for example, text sources collected directly or indirectly by the researcher) and reports outcomes as themes or concepts that describe a phenomenon or experience.

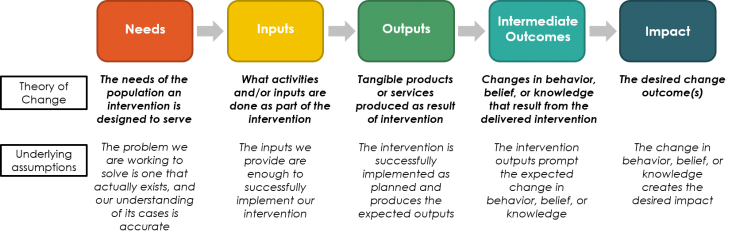

As described in the first installment of this series, “a common goal of clinical research is to understand health and illness and to discover novel methods to detect, diagnose, treat, and prevent disease”; with this in mind, research questions must “focus on clear approaches to measuring or quantifying change or outcome,” the research outcome being the “planned measure to determine the effect of an intervention on the population under study.” 1

In this article, we explore measurement in quantitative research. We will also consider the concepts of validity and reliability as they relate to quantitative research measurement. Qualitative analysis will be considered separately in a future article in this series, as this methodology does not typically use a prescribed mechanism for measurement of research variables.

DEFINING THE VARIABLE OF INTEREST

Measurement in research begins with defining the variables of interest. Often, researchers are interested in exploring how variation in one factor or phenomenon influences variation in another. The dependent variables (outcome variables) in a study reflect the primary phenomenon of interest and the independent variables (or explanatory variables) reflect the factors that are hypothesized to have an impact on the primary phenomenon of interest (the dependent variable). 2 For example, a researcher might rightly hypothesize that body mass index (BMI) influences blood pressure, further hypothesizing that increases in BMI are associated with increases in blood pressure. In a study testing this hypothesis, blood pressure is the dependent variable and BMI is an independent variable.

In identifying the variables of interest in a study, researchers are likely to have ideas of concepts they would like to explore. For instance, among other things the researcher is interested in in the above example is weight. A conceptual definition of a research variable provides a general theoretical understanding of that variable; regarding weight, a person might be considered “thin” or “overweight.” Nevertheless, in moving from theory to practice, the researcher must consider how to operationalize this theoretical definition—that is, the researcher needs to select specific mechanisms for measuring the proscribed variables conceptualized in the study. Thus, an operational definition provides a measurable definition of a variable. Continuing with the above example, BMI would be a means of operationalizing the weight variable, where a person with a BMI of 25 or above is categorized by the Centers for Disease Control and Prevention as overweight. 3 In operationalizing variables, first look in existing evidence-based literature, practices, and professional guidelines. For instance, the researcher might consider measuring depression using the validated and widely utilized Patient Health Questionnaire-9 (PHQ-9) depression assessment scale or assessing longitudinal hyperglycemic risk by using the accepted measurement of glycated hemoglobin (HbA 1c ) level.

MEASUREMENT TOOLS

Researchers rely on measurement tools and instruments to create quantitative assessments of the variables studied. In some cases, direct measurements can be made using biometric measurement instruments to collect physiologic data such as weight, blood pressure, oxygen saturation level, and serum laboratory values. These biometric assessments are considered direct measures . 4 To quantify more abstract concepts, such as mood states, attitudes, and theoretical concepts like “caring,” researchers must consider less obvious proxy measures. Proxy measures constructed to quantify more abstract concepts are considered indirect measures . 4 For instance, Hughes developed an instrument to assess peer group caring during informal peer interactions among undergraduate nursing students. 5 While unable to directly measure the theoretical concept of caring, Hughes was able to construct an indirect proxy assessment using a survey tool.

Indirect, and even direct, measures can be operationalized in several ways. For instance, a researcher may consider operationalizing the concept of depression using the PHQ-9 depression assessment scale, using the Center for Epidemiological Studies Depression scale, or by applying Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition , diagnostic criteria. The study findings may be affected by how a variable is operationalized and which measurement tools are utilized; therefore, researchers should give serious thought to study objectives, sample/target populations, and other important considerations when operationalizing a variable. More specifics on measurement formats and methods of administration will be explored in the next installment of this series.

LEVELS OF MEASUREMENT

Levels of measurement describe the structure of a variable (see Table 1 ).

| Variable Type | Definition | Examples |

|---|---|---|

| Nominal | Data are grouped into distinct and exclusive categories that cannot be rank ordered. | |

| Ordinal | Data are categorized into distinct and exclusive groups that can be placed in rank order. | |

| Interval | Data reflect a chronological sequence with equal distances between data points across a continuum but do not contain a true zero value (a zero value does not make sense). | |

| Ratio | Data are measured continuously with equal spacing between intervals and include a true zero value. |

Nominal level . The lowest form of measurement, the nominal level groups data into distinct and exclusive categories that cannot be rank ordered. 2 Gender identity, race/ethnicity, occupation, geographic location, and clinical diagnoses are all examples of categories that contain nominal level data. This type of variable may also be referred to as a categorical variable. 6

Ordinal level data can also be categorized into distinct and exclusive groups; however, unlike nominal data, ordinal data can be ordered by rank. Likert-type scale variables reflect a classic example of ordinal level data, where responses can be rank ordered by “strongly disagree,” “disagree,” “neutral,” “agree,” and “strongly agree.” The 0-to-10-point pain scale is another example of the ordinal level of measurement. Using this scale, a patient provides a subjective determination of the experience of pain, where 0 reflects no pain and 10 reflects the highest pain threshold. As with all ordinal data, the precise quantitative distance between the descriptor data points is impossible to assess—the differences between a pain determination of 3 and one of 4 and a pain determination of 7 and one of 8 cannot be precisely calculated. Further, the distance between each pain level (for example, jumping from a pain level of 3 to 4 or from a pain level of 7 to 8) is not assumed to be incrementally or objectively equal. 2 Despite these drawbacks, ordinal level data are frequently translated into a numerical expression so they can be analyzed as interval or ratio data. For example, a Likert scale can be translated into a scale ranging from “strongly disagree = 1” to “strongly agree = 5,” allowing for the calculation of a numerical mean satisfaction score.

Interval level data reflect a chronological sequencing of data points with distances that are assumed to be quantifiable and equal in magnitude, such as ambient temperature. As with ambient temperature measured in degrees Fahrenheit, the magnitude of the chronological difference between each data point is assumed to be equal along a continuum of continuous values. Of note, interval data do not include a true and meaningful zero, the total absence of the characteristic being measured. 2 For example, there is no such thing as the absence of temperature.

Ratio level data provide the final and most robust level of measurement. Ratio level data are measured continuously, with equal spacing between intervals and with a true zero. Examples include height, weight, heart rate, and serum laboratory values. A zero value is interpreted as the absence of the characteristic. Once again, researchers should be cautious in defining how a variable is operationalized because the level of measurement will influence the types of statistical analyses that can be performed in the evaluation of study outcomes. Interval and ratio levels of measurement result in the most robust statistical analyses and research results. Statistical analysis techniques will be discussed in more detail later in this series.

MEASUREMENT ERROR

For variables to provide a meaningful and appropriate representation of the underlying concept being measured, data measurement needs to be accurate and precise. Measurement error reflects the difference between the measured and true value of the underlying concept. The value of an individual measurement can be described as follows 7 :

Chance error (or random error) changes from measurement to measurement, while bias (or systematic error) influences “all measurements the same way, pushing them in the same direction.” 7 Chance errors are individually unpredictable and inconsistent and in the long run should cancel each other out. If there is no bias in one's measurement, the individual and exact values should ultimately be equal. Bias, however, is inherent in all models and causes a systematic deviation from the true, underlying value.

Weight offers an excellent example of measurement error. Suppose some patients are weighed in the morning, some in the afternoon; some wear coats while others do not; some have eaten while others have fasted, and so forth. This variation reflects random error—we'd expect this positive and negative, over- and underestimation, to average out once enough patients have been sampled. Further, suppose the scale is incorrectly calibrated, such that it reports that every person weighs five pounds more than her or his actual weight. This result reflects a positive bias in the estimates and will not be corrected no matter how many patients are sampled.

The potential for bias resulting from measurement error falls broadly under the category of information bias —are researchers measuring what they think they are measuring? Information bias is present if the study data collected are somehow incorrect. 8 This can occur because of faulty measurement practices that systematically result in the under- or overvaluation of a measure, as described in the scale example above, or because of systematic misreporting by respondents. There are many forms of information bias, including recall bias, interviewer bias, and misclassification, as well as systematic differences in soliciting, recording, and interpreting information. 8 For instance, consider a study of adolescent sexual behavior in which adolescents are interviewed in the home with parents or guardians present. One might assume that adolescents in these circumstances would underreport the number of sexual partners they have had; as a result, one might expect this systematic underreporting to represent a downward bias in the data collected.

Other forms of bias exist, such as selection bias (if study participants are systematically different from the target population, or population of interest). Selection bias, for instance, does not necessarily affect the internal validity of the study (the ability to collect valid data) but may affect the external validity of the study (can researchers truly generalize findings to the population of interest?). These forms of bias will be described in further detail elsewhere in this series.

Measurement error is study and model specific; it comes in many forms and the type of error affects the level or form of bias. In interpreting results and designing research, researchers need to be aware of potential measurement error, do what they can to minimize bias, and provide a thorough assessment of bias in presenting the limitations of their work.

VALIDITY AND RELIABILITY

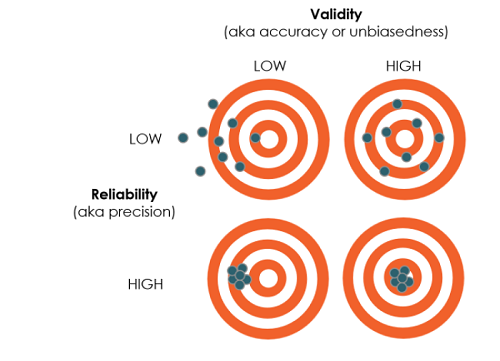

Validity refers to the degree to which a measurement accurately represents the underlying concept being measured. Basically, does the test operate as designed? Researchers need to consider the validity of use of the measurement instrument within the context of specific populations. For instance, in Hughes's study of caring among peer groups in undergraduate nursing populations, the author not only had to ensure that her instrument accurately measured caring among peer groups but also needed to verify that this measurement was accurate in nursing undergraduate populations. 5 In this instance, Hughes developed the survey explicitly with undergraduates in mind, making the second point easier to achieve.

Suppose, however, that a researcher wanted to use a version of the survey to gauge peer caring among nursing faculty. Would this be appropriate? Not without first assessing the validity of the survey within the new sample. The validity of an instrument can be assessed in several ways: by having the instrument reviewed by a content expert, comparing the instrument's results with those from an alternative assessment metric, assessing how well the instrument predicts current or future performance for the concept under consideration, and running a factor analysis (a statistical procedure that compares items or subscales within an instrument with each other and with the overall instrument outcome).

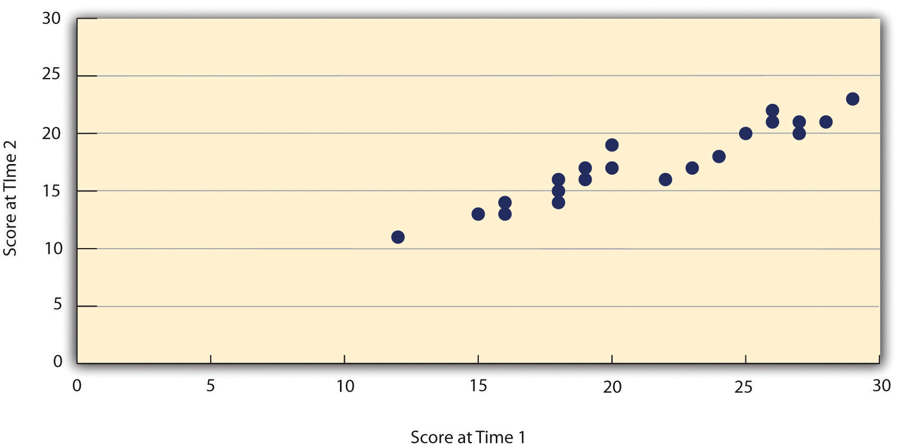

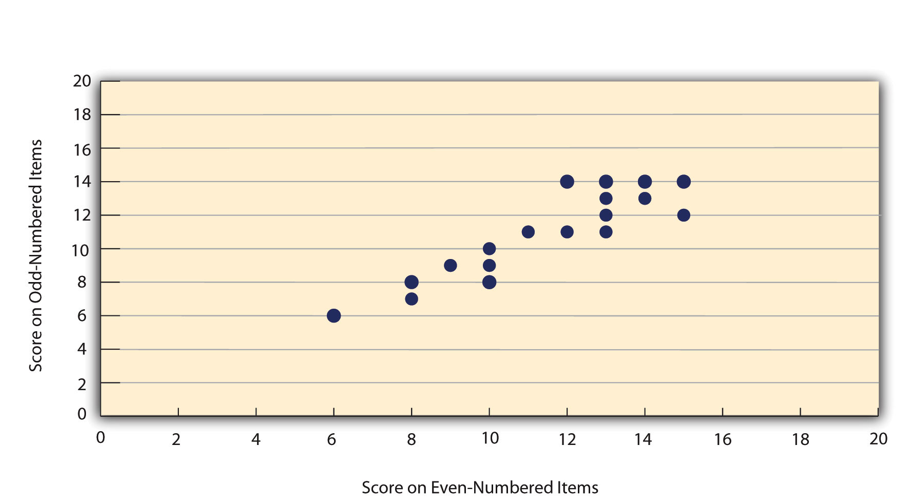

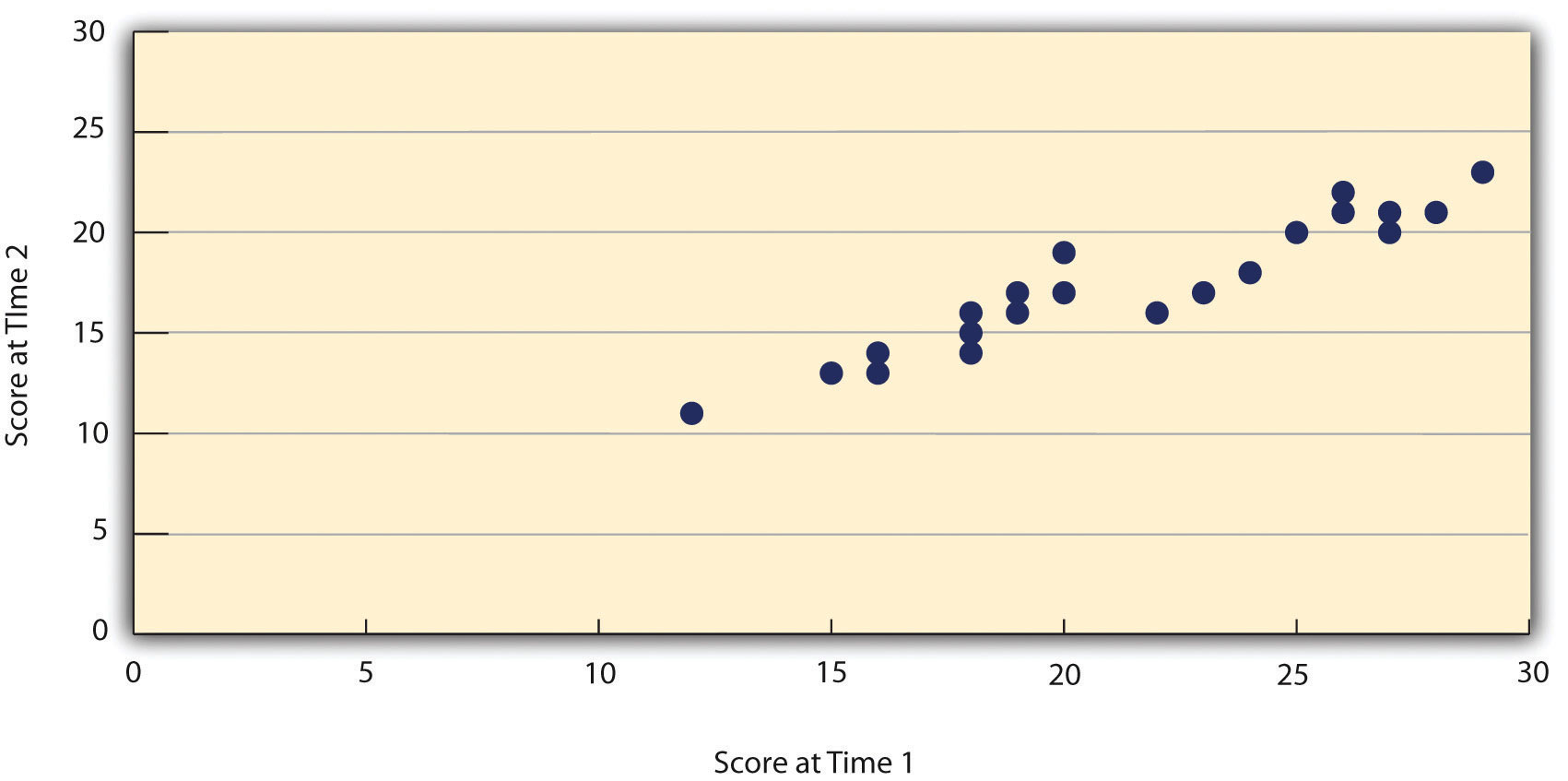

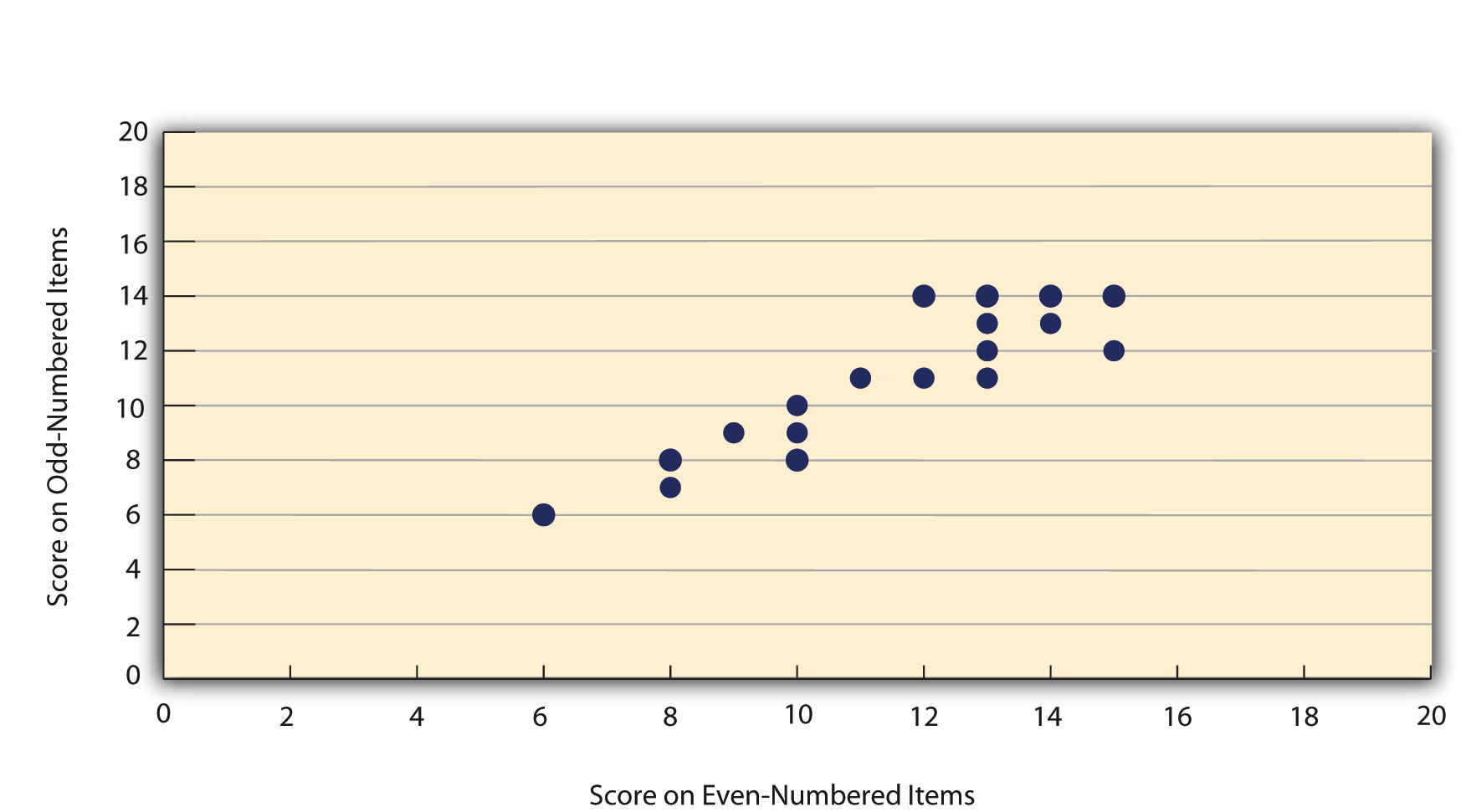

Reliability and validity go hand in hand. Reliability reflects the consistency of a measurement tool in reporting variable data. An instrument must be reliable to be valid. The reliability of an instrument can be gauged in three ways:

- stability (the consistency of outcomes with repeated implementation)

- interrater reliability (the consistency between different evaluators)

- internal consistency (the homogeneity of items within a scale as they relate to the measurement of the concept under investigation)

Cronbach α is a statistical procedure to assess instrument reliability by determining the internal consistency of items on a multi-item scale. 4, 9 Internal consistency evaluations examine how closely items on a scale represent the outcome concept under evaluation. Cronbach α scores range from 0.00 to 1.00—the higher the score the better the internal consistency. An acceptable Cronbach α as an evaluation of instrument reliability is often considered to be 0.70; however, a score of 0.80 or higher is preferable.

When choosing a measurement instrument for quantitative research, it is best to select one that has documented validity and reliability; alternatively, the researcher may independently complete and describe an assessment of the instrument's validity and reliability. Evaluation of a research study prior to practice implementation should also include assessment of the validity and reliability of the measurement instrument employed, which should be described within the research article.

This installment of AJN 's nursing research series explores how to measure both research outcomes and factors that are hypothesized to influence outcomes. Careful selection of measurement instruments will enhance the accuracy of research and maximize the ability of the research findings to meaningfully inform nursing practice and improve the well-being of patient populations. The next article in this series will further explore the selection and utilization of measurement instruments in the design and execution of nursing research.

- Cited Here |

- Google Scholar

Supplemental Digital Content

- http://links.lww.com/AJN/A204; [Other] (0 KB)

- + Favorites

- View in Gallery

Readers Of this Article Also Read

Sampling design in nursing research, selection of the study participants, interpretive methodologies in qualitative nursing research, introduction to statistical hypothesis testing in nursing research, cross-sectional studies.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

10.1 What is measurement?

Learning objectives.

Learners will be able to…

- Define measurement

- Explain where measurement fits into the process of designing research

- Apply Kaplan’s three categories to determine the complexity of measuring a given variable

Pre-awareness check (Knowledge)

What do you already know about measuring key variables in your research topic?

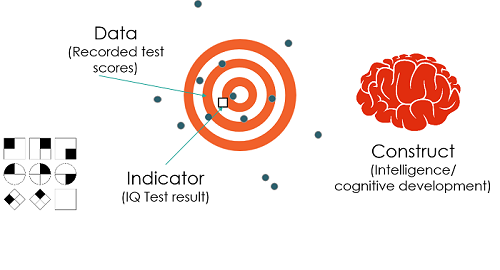

In social science, when we use the term measurement , we mean the process by which we describe and ascribe meaning to the key facts, concepts, or other phenomena that we are investigating. In this chapter, we’ll use the term “concept” to mean an abstraction that has meaning. Concepts can be understood from our own experiences or from particular facts, but they don’t have to be limited to real-life phenomenon. We can have a concept of anything we can imagine or experience such as weightlessness, friendship, or income. Understanding exactly what our concepts mean is necessary in order to measure them.

In research, measurement is a systematic procedure for assigning scores, meanings, and descriptions to concepts so that those scores represent the characteristic of interest. Social scientists can and do measure just about anything you can imagine observing or wanting to study. Of course, some things are easier to observe or measure than others.

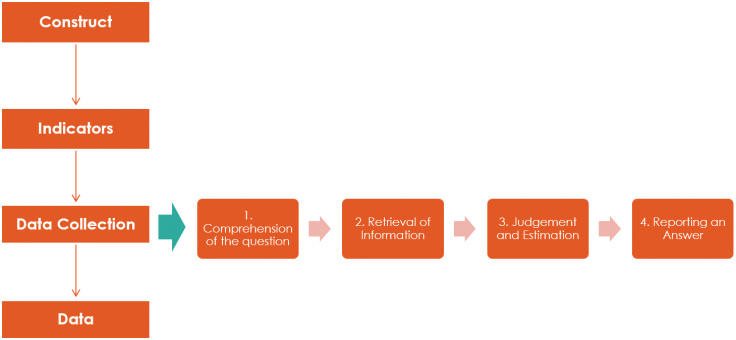

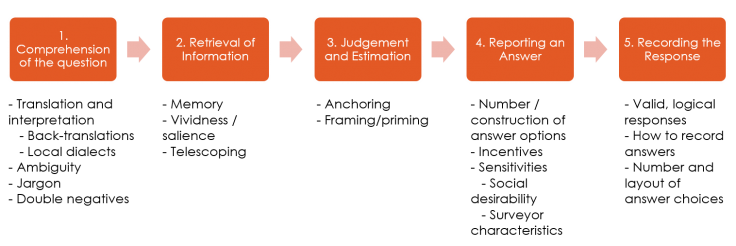

Where does measurement fit in the process of designing research?

Table 10.1 is intended as a partial review and outlines the general process researchers can follow to get from problem formulation to data collection, including measurement. Use the drop down feature in the table to view the examples for each component of the research process. Keep in mind that this process is iterative. For example, you may find something in your literature review that leads you to refine your conceptualizations, or you may discover as you attempt to conceptually define your terms that you need to return back to the literature for further information. Accordingly, this table should be seen as a suggested path to take rather than an inflexible rule about how research must be conducted.

Table 10.1. Components of the Research Process from Problem Formulation to Data Collection. Note. Information on attachment theory in this table came from: Bowlby, J. (1978). Attachment theory and its therapeutic implications. Adolescent Psychiatry, 6 , 5-33

Categories of concepts that social scientists measure

In 1964, philosopher Abraham Kaplan (1964) [1] wrote The Conduct of Inquiry , which has been cited over 8,500 times. [2] In his text, Kaplan describes different categories of things that behavioral scientists observe. One of those categories, which Kaplan called “observational terms,” is probably the simplest to measure in social science. Observational terms are simple concepts. They are the sorts of things that we can see with the naked eye simply by looking at them. Kaplan roughly defines them as concepts that are easy to identify and verify through direct observation. If, for example, we wanted to know how the conditions of playgrounds differ across different neighborhoods, we could directly observe the variety, amount, and condition of equipment at various playgrounds.

Indirect observables , on the other hand, are less straightforward concepts to assess. In Kaplan’s framework, they are conditions that are subtle and complex that we must use existing knowledge and intuition to define. If we conducted a study for which we wished to know a person’s income, we’d probably have to ask them their income, perhaps in an interview or a survey. Thus, we have observed income, even if it has only been observed indirectly. Birthplace might be another indirect observable. We can ask study participants where they were born, but chances are good we won’t have directly observed any of those people being born in the locations they report.

Sometimes the concepts that we are interested in are more complex and more abstract than observational terms or indirect observables. Because they are complex, constructs generally consist of more than one concept. Let’s take for example, the construct “bureaucracy.” We know this term has something to do with hierarchy, organizations, and how they operate but measuring such a construct is trickier than measuring something like a person’s income because of the complexity involved. Here’s another construct: racism. What is racism? How would you measure it? The constructs of racism and bureaucracy represent constructs whose meanings we have come to agree on.

Though we may not be able to observe constructs directly, we can observe their components. In Kaplan’s categorization, constructs are concepts that are “not observational either directly or indirectly” (Kaplan, 1964, p. 55), [3] but they can be defined based on observables. An example would be measuring the construct of depression. A diagnosis of depression can be made through the DSM-V which includes diagnostic criteria of fatigue, poor concentration, etc. Each of these components of depression can be observed indirectly. We are able to measure constructs by defining them in terms of what we can observe. Though we may not be able to observe them, we can observe their components.

TRACK 1 (IF YOU ARE CREATING A RESEARCH PROPOSAL FOR THIS CLASS):

Look at the variables in your research question.

- Classify them as direct observables, indirect observables, or constructs.

- Do you think measuring them will be easy or hard?

- What are your first thoughts about how to measure each variable? No wrong answers here, just write down a thought about each variable.

TRACK 2 (IF YOU AREN’T CREATING A RESEARCH PROPOSAL FOR THIS CLASS):

You are interested in studying older adults’ social-emotional well-being. Specifically, you would like to research the impact on levels of older adult loneliness of an intervention that pairs older adults living in assisted living communities with university student volunteers for a weekly conversation.

Develop a working research question for this topic. Then, look at the variables in your research question.

- Kaplan, A. (1964). The conduct of inquiry: Methodology for behavioral science. San Francisco, CA: Chandler Publishing Company. ↵

- Earl Babbie offers a more detailed discussion of Kaplan’s work in his text. You can read it in: Babbie, E. (2010). The practice of social research (12th ed.). Belmont, CA: Wadsworth. ↵

- Kaplan, A. (1964). The conduct of inquiry: Methodology for behavioral science . San Francisco, CA: Chandler Publishing Company. ↵

The process by which we describe and ascribe meaning to the key facts, concepts, or other phenomena under investigation in a research study.

In measurement, conditions that are easy to identify and verify through direct observation.

things that require subtle and complex observations to measure, perhaps we must use existing knowledge and intuition to define.

Conditions that are not directly observable and represent states of being, experiences, and ideas.

Doctoral Research Methods in Social Work Copyright © by Mavs Open Press. All Rights Reserved.

Share This Book

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 01 June 2023

Data, measurement and empirical methods in the science of science

- Lu Liu 1 , 2 , 3 , 4 ,

- Benjamin F. Jones ORCID: orcid.org/0000-0001-9697-9388 1 , 2 , 3 , 5 , 6 ,

- Brian Uzzi ORCID: orcid.org/0000-0001-6855-2854 1 , 2 , 3 &

- Dashun Wang ORCID: orcid.org/0000-0002-7054-2206 1 , 2 , 3 , 7

Nature Human Behaviour volume 7 , pages 1046–1058 ( 2023 ) Cite this article

19k Accesses

18 Citations

116 Altmetric

Metrics details

- Scientific community

The advent of large-scale datasets that trace the workings of science has encouraged researchers from many different disciplinary backgrounds to turn scientific methods into science itself, cultivating a rapidly expanding ‘science of science’. This Review considers this growing, multidisciplinary literature through the lens of data, measurement and empirical methods. We discuss the purposes, strengths and limitations of major empirical approaches, seeking to increase understanding of the field’s diverse methodologies and expand researchers’ toolkits. Overall, new empirical developments provide enormous capacity to test traditional beliefs and conceptual frameworks about science, discover factors associated with scientific productivity, predict scientific outcomes and design policies that facilitate scientific progress.

Similar content being viewed by others

SciSciNet: A large-scale open data lake for the science of science research

A dataset for measuring the impact of research data and their curation

Envisioning a “science diplomacy 2.0”: on data, global challenges, and multi-layered networks

Scientific advances are a key input to rising standards of living, health and the capacity of society to confront grand challenges, from climate change to the COVID-19 pandemic 1 , 2 , 3 . A deeper understanding of how science works and where innovation occurs can help us to more effectively design science policy and science institutions, better inform scientists’ own research choices, and create and capture enormous value for science and humanity. Building on these key premises, recent years have witnessed substantial development in the ‘science of science’ 4 , 5 , 6 , 7 , 8 , 9 , which uses large-scale datasets and diverse computational toolkits to unearth fundamental patterns behind scientific production and use.

The idea of turning scientific methods into science itself is long-standing. Since the mid-20th century, researchers from different disciplines have asked central questions about the nature of scientific progress and the practice, organization and impact of scientific research. Building on these rich historical roots, the field of the science of science draws upon many disciplines, ranging from information science to the social, physical and biological sciences to computer science, engineering and design. The science of science closely relates to several strands and communities of research, including metascience, scientometrics, the economics of science, research on research, science and technology studies, the sociology of science, metaknowledge and quantitative science studies 5 . There are noticeable differences between some of these communities, mostly around their historical origins and the initial disciplinary composition of researchers forming these communities. For example, metascience has its origins in the clinical sciences and psychology, and focuses on rigour, transparency, reproducibility and other open science-related practices and topics. The scientometrics community, born in library and information sciences, places a particular emphasis on developing robust and responsible measures and indicators for science. Science and technology studies engage the history of science and technology, the philosophy of science, and the interplay between science, technology and society. The science of science, which has its origins in physics, computer science and sociology, takes a data-driven approach and emphasizes questions on how science works. Each of these communities has made fundamental contributions to understanding science. While they differ in their origins, these differences pale in comparison to the overarching, common interest in understanding the practice of science and its societal impact.

Three major developments have encouraged rapid advances in the science of science. The first is in data 9 : modern databases include millions of research articles, grant proposals, patents and more. This windfall of data traces scientific activity in remarkable detail and at scale. The second development is in measurement: scholars have used data to develop many new measures of scientific activities and examine theories that have long been viewed as important but difficult to quantify. The third development is in empirical methods: thanks to parallel advances in data science, network science, artificial intelligence and econometrics, researchers can study relationships, make predictions and assess science policy in powerful new ways. Together, new data, measurements and methods have revealed fundamental new insights about the inner workings of science and scientific progress itself.

With multiple approaches, however, comes a key challenge. As researchers adhere to norms respected within their disciplines, their methods vary, with results often published in venues with non-overlapping readership, fragmenting research along disciplinary boundaries. This fragmentation challenges researchers’ ability to appreciate and understand the value of work outside of their own discipline, much less to build directly on it for further investigations.

Recognizing these challenges and the rapidly developing nature of the field, this paper reviews the empirical approaches that are prevalent in this literature. We aim to provide readers with an up-to-date understanding of the available datasets, measurement constructs and empirical methodologies, as well as the value and limitations of each. Owing to space constraints, this Review does not cover the full technical details of each method, referring readers to related guides to learn more. Instead, we will emphasize why a researcher might favour one method over another, depending on the research question.

Beyond a positive understanding of science, a key goal of the science of science is to inform science policy. While this Review mainly focuses on empirical approaches, with its core audience being researchers in the field, the studies reviewed are also germane to key policy questions. For example, what is the appropriate scale of scientific investment, in what directions and through what institutions 10 , 11 ? Are public investments in science aligned with public interests 12 ? What conditions produce novel or high-impact science 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 ? How do the reward systems of science influence the rate and direction of progress 13 , 21 , 22 , 23 , 24 , and what governs scientific reproducibility 25 , 26 , 27 ? How do contributions evolve over a scientific career 28 , 29 , 30 , 31 , 32 , and how may diversity among scientists advance scientific progress 33 , 34 , 35 , among other questions relevant to science policy 36 , 37 .

Overall, this review aims to facilitate entry to science of science research, expand researcher toolkits and illustrate how diverse research approaches contribute to our collective understanding of science. Section 2 reviews datasets and data linkages. Section 3 reviews major measurement constructs in the science of science. Section 4 considers a range of empirical methods, focusing on one study to illustrate each method and briefly summarizing related examples and applications. Section 5 concludes with an outlook for the science of science.

Historically, data on scientific activities were difficult to collect and were available in limited quantities. Gathering data could involve manually tallying statistics from publications 38 , 39 , interviewing scientists 16 , 40 , or assembling historical anecdotes and biographies 13 , 41 . Analyses were typically limited to a specific domain or group of scientists. Today, massive datasets on scientific production and use are at researchers’ fingertips 42 , 43 , 44 . Armed with big data and advanced algorithms, researchers can now probe questions previously not amenable to quantification and with enormous increases in scope and scale, as detailed below.

Publication datasets cover papers from nearly all scientific disciplines, enabling analyses of both general and domain-specific patterns. Commonly used datasets include the Web of Science (WoS), PubMed, CrossRef, ORCID, OpenCitations, Dimensions and OpenAlex. Datasets incorporating papers’ text (CORE) 45 , 46 , 47 , data entities (DataCite) 48 , 49 and peer review reports (Publons) 33 , 50 , 51 have also become available. These datasets further enable novel measurement, for example, representations of a paper’s content 52 , 53 , novelty 15 , 54 and interdisciplinarity 55 .

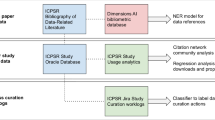

Notably, databases today capture more diverse aspects of science beyond publications, offering a richer and more encompassing view of research contexts and of researchers themselves (Fig. 1 ). For example, some datasets trace research funding to the specific publications these investments support 56 , 57 , allowing high-scale studies of the impact of funding on productivity and the return on public investment. Datasets incorporating job placements 58 , 59 , curriculum vitae 21 , 59 and scientific prizes 23 offer rich quantitative evidence on the social structure of science. Combining publication profiles with mentorship genealogies 60 , 61 , dissertations 34 and course syllabi 62 , 63 provides insights on mentoring and cultivating talent.

This figure presents commonly used data types in science of science research, information contained in each data type and examples of data sources. Datasets in the science of science research have not only grown in scale but have also expanded beyond publications to integrate upstream funding investments and downstream applications that extend beyond science itself.

Finally, today’s scope of data extends beyond science to broader aspects of society. Altmetrics 64 captures news media and social media mentions of scientific articles. Other databases incorporate marketplace uses of science, including through patents 10 , pharmaceutical clinical trials and drug approvals 65 , 66 . Policy documents 67 , 68 help us to understand the role of science in the halls of government 69 and policy making 12 , 68 .

While datasets of the modern scientific enterprise have grown exponentially, they are not without limitations. As is often the case for data-driven research, drawing conclusions from specific data sources requires scrutiny and care. Datasets are typically based on published work, which may favour easy-to-publish topics over important ones (the streetlight effect) 70 , 71 . The publication of negative results is also rare (the file drawer problem) 72 , 73 . Meanwhile, English language publications account for over 90% of articles in major data sources, with limited coverage of non-English journals 74 . Publication datasets may also reflect biases in data collection across research institutions or demographic groups. Despite the open science movement, many datasets require paid subscriptions, which can create inequality in data access. Creating more open datasets for the science of science, such as OpenAlex, may not only improve the robustness and replicability of empirical claims but also increase entry to the field.

As today’s datasets become larger in scale and continue to integrate new dimensions, they offer opportunities to unveil the inner workings and external impacts of science in new ways. They can enable researchers to reach beyond previous limitations while conducting original studies of new and long-standing questions about the sciences.

Measurement

Here we discuss prominent measurement approaches in the science of science, including their purposes and limitations.

Modern publication databases typically include data on which articles and authors cite other papers and scientists. These citation linkages have been used to engage core conceptual ideas in scientific research. Here we consider two common measures based on citation information: citation counts and knowledge flows.

First, citation counts are commonly used indicators of impact. The term ‘indicator’ implies that it only approximates the concept of interest. A citation count is defined as how many times a document is cited by subsequent documents and can proxy for the importance of research papers 75 , 76 as well as patented inventions 77 , 78 , 79 . Rather than treating each citation equally, measures may further weight the importance of each citation, for example by using the citation network structure to produce centrality 80 , PageRank 81 , 82 or Eigenfactor indicators 83 , 84 .

Citation-based indicators have also faced criticism 84 , 85 . Citation indicators necessarily oversimplify the construct of impact, often ignoring heterogeneity in the meaning and use of a particular reference, the variations in citation practices across fields and institutional contexts, and the potential for reputation and power structures in science to influence citation behaviour 86 , 87 . Researchers have started to understand more nuanced citation behaviours ranging from negative citations 86 to citation context 47 , 88 , 89 . Understanding what a citation actually measures matters in interpreting and applying many research findings in the science of science. Evaluations relying on citation-based indicators rather than expert judgements raise questions regarding misuse 90 , 91 , 92 . Given the importance of developing indicators that can reliably quantify and evaluate science, the scientometrics community has been working to provide guidance for responsible citation practices and assessment 85 .

Second, scientists use citations to trace knowledge flows. Each citation in a paper is a link to specific previous work from which we can proxy how new discoveries draw upon existing ideas 76 , 93 and how knowledge flows between fields of science 94 , 95 , research institutions 96 , regions and nations 97 , 98 , 99 , and individuals 81 . Combinations of citation linkages can also approximate novelty 15 , disruptiveness 17 , 100 and interdisciplinarity 55 , 95 , 101 , 102 . A rapidly expanding body of work further examines citations to scientific articles from other domains (for example, patents, clinical drug trials and policy documents) to understand the applied value of science 10 , 12 , 65 , 66 , 103 , 104 , 105 .

Individuals

Analysing individual careers allows researchers to answer questions such as: How do we quantify individual scientific productivity? What is a typical career lifecycle? How are resources and credits allocated across individuals and careers? A scholar’s career can be examined through the papers they publish 30 , 31 , 106 , 107 , 108 , with attention to career progression and mobility, publication counts and citation impact, as well as grant funding 24 , 109 , 110 and prizes 111 , 112 , 113 ,

Studies of individual impact focus on output, typically approximated by the number of papers a researcher publishes and citation indicators. A popular measure for individual impact is the h -index 114 , which takes both volume and per-paper impact into consideration. Specifically, a scientist is assigned the largest value h such that they have h papers that were each cited at least h times. Later studies build on the idea of the h -index and propose variants to address limitations 115 , these variants ranging from emphasizing highly cited papers in a career 116 , to field differences 117 and normalizations 118 , to the relative contribution of an individual in collaborative works 119 .

To study dynamics in output over the lifecycle, individuals can be studied according to age, career age or the sequence of publications. A long-standing literature has investigated the relationship between age and the likelihood of outstanding achievement 28 , 106 , 111 , 120 , 121 . Recent studies further decouple the relationship between age, publication volume and per-paper citation, and measure the likelihood of producing highly cited papers in the sequence of works one produces 30 , 31 .

As simple as it sounds, representing careers using publication records is difficult. Collecting the full publication list of a researcher is the foundation to study individuals yet remains a key challenge, requiring name disambiguation techniques to match specific works to specific researchers. Although algorithms are increasingly capable at identifying millions of career profiles 122 , they vary in accuracy and robustness. ORCID can help to alleviate the problem by offering researchers the opportunity to create, maintain and update individual profiles themselves, and it goes beyond publications to collect broader outputs and activities 123 . A second challenge is survivorship bias. Empirical studies tend to focus on careers that are long enough to afford statistical analyses, which limits the applicability of the findings to scientific careers as a whole. A third challenge is the breadth of scientists’ activities, where focusing on publications ignores other important contributions such as mentorship and teaching, service (for example, refereeing papers, reviewing grant proposals and editing journals) or leadership within their organizations. Although researchers have begun exploring these dimensions by linking individual publication profiles with genealogical databases 61 , 124 , dissertations 34 , grants 109 , curriculum vitae 21 and acknowledgements 125 , scientific careers beyond publication records remain under-studied 126 , 127 . Lastly, citation-based indicators only serve as an approximation of individual performance with similar limitations as discussed above. The scientific community has called for more appropriate practices 85 , 128 , ranging from incorporating expert assessment of research contributions to broadening the measures of impact beyond publications.

Over many decades, science has exhibited a substantial and steady shift away from solo authorship towards coauthorship, especially among highly cited works 18 , 129 , 130 . In light of this shift, a research field, the science of team science 131 , 132 , has emerged to study the mechanisms that facilitate or hinder the effectiveness of teams. Team size can be proxied by the number of coauthors on a paper, which has been shown to predict distinctive types of advance: whereas larger teams tend to develop ideas, smaller teams tend to disrupt current ways of thinking 17 . Team characteristics can be inferred from coauthors’ backgrounds 133 , 134 , 135 , allowing quantification of a team’s diversity in terms of field, age, gender or ethnicity. Collaboration networks based on coauthorship 130 , 136 , 137 , 138 , 139 offer nuanced network-based indicators to understand individual and institutional collaborations.

However, there are limitations to using coauthorship alone to study teams 132 . First, coauthorship can obscure individual roles 140 , 141 , 142 , which has prompted institutional responses to help to allocate credit, including authorship order and individual contribution statements 56 , 143 . Second, coauthorship does not reflect the complex dynamics and interactions between team members that are often instrumental for team success 53 , 144 . Third, collaborative contributions can extend beyond coauthorship in publications to include members of a research laboratory 145 or co-principal investigators (co-PIs) on a grant 146 . Initiatives such as CRediT may help to address some of these issues by recording detailed roles for each contributor 147 .

Institutions

Research institutions, such as departments, universities, national laboratories and firms, encompass wider groups of researchers and their corresponding outputs. Institutional membership can be inferred from affiliations listed on publications or patents 148 , 149 , and the output of an institution can be aggregated over all its affiliated researchers 150 . Institutional research information systems (CRIS) contain more comprehensive research outputs and activities from employees.

Some research questions consider the institution as a whole, investigating the returns to research and development investment 104 , inequality of resource allocation 22 and the flow of scientists 21 , 148 , 149 . Other questions focus on institutional structures as sources of research productivity by looking into the role of peer effects 125 , 151 , 152 , 153 , how institutional policies impact research outcomes 154 , 155 and whether interdisciplinary efforts foster innovation 55 . Institution-oriented measurement faces similar limitations as with analyses of individuals and teams, including name disambiguation for a given institution and the limited capacity of formal publication records to characterize the full range of relevant institutional outcomes. It is also unclear how to allocate credit among multiple institutions associated with a paper. Moreover, relevant institutional employees extend beyond publishing researchers: interns, technicians and administrators all contribute to research endeavours 130 .

In sum, measurements allow researchers to quantify scientific production and use across numerous dimensions, but they also raise questions of construct validity: Does the proposed metric really reflect what we want to measure? Testing the construct’s validity is important, as is understanding a construct’s limits. Where possible, using alternative measurement approaches, or qualitative methods such as interviews and surveys, can improve measurement accuracy and the robustness of findings.

Empirical methods

In this section, we review two broad categories of empirical approaches (Table 1 ), each with distinctive goals: (1) to discover, estimate and predict empirical regularities; and (2) to identify causal mechanisms. For each method, we give a concrete example to help to explain how the method works, summarize related work for interested readers, and discuss contributions and limitations.

Descriptive and predictive approaches

Empirical regularities and generalizable facts.

The discovery of empirical regularities in science has had a key role in driving conceptual developments and the directions of future research. By observing empirical patterns at scale, researchers unveil central facts that shape science and present core features that theories of scientific progress and practice must explain. For example, consider citation distributions. de Solla Price first proposed that citation distributions are fat-tailed 39 , indicating that a few papers have extremely high citations while most papers have relatively few or even no citations at all. de Solla Price proposed that citation distribution was a power law, while researchers have since refined this view to show that the distribution appears log-normal, a nearly universal regularity across time and fields 156 , 157 . The fat-tailed nature of citation distributions and its universality across the sciences has in turn sparked substantial theoretical work that seeks to explain this key empirical regularity 20 , 156 , 158 , 159 .

Empirical regularities are often surprising and can contest previous beliefs of how science works. For example, it has been shown that the age distribution of great achievements peaks in middle age across a wide range of fields 107 , 121 , 160 , rejecting the common belief that young scientists typically drive breakthroughs in science. A closer look at the individual careers also indicates that productivity patterns vary widely across individuals 29 . Further, a scholar’s highest-impact papers come at a remarkably constant rate across the sequence of their work 30 , 31 .

The discovery of empirical regularities has had important roles in shaping beliefs about the nature of science 10 , 45 , 161 , 162 , sources of breakthrough ideas 15 , 163 , 164 , 165 , scientific careers 21 , 29 , 126 , 127 , the network structure of ideas and scientists 23 , 98 , 136 , 137 , 138 , 139 , 166 , gender inequality 57 , 108 , 126 , 135 , 143 , 167 , 168 , and many other areas of interest to scientists and science institutions 22 , 47 , 86 , 97 , 102 , 105 , 134 , 169 , 170 , 171 . At the same time, care must be taken to ensure that findings are not merely artefacts due to data selection or inherent bias. To differentiate meaningful patterns from spurious ones, it is important to stress test the findings through different selection criteria or across non-overlapping data sources.

Regression analysis

When investigating correlations among variables, a classic method is regression, which estimates how one set of variables explains variation in an outcome of interest. Regression can be used to test explicit hypotheses or predict outcomes. For example, researchers have investigated whether a paper’s novelty predicts its citation impact 172 . Adding additional control variables to the regression, one can further examine the robustness of the focal relationship.

Although regression analysis is useful for hypothesis testing, it bears substantial limitations. If the question one wishes to ask concerns a ‘causal’ rather than a correlational relationship, regression is poorly suited to the task as it is impossible to control for all the confounding factors. Failing to account for such ‘omitted variables’ can bias the regression coefficient estimates and lead to spurious interpretations. Further, regression models often have low goodness of fit (small R 2 ), indicating that the variables considered explain little of the outcome variation. As regressions typically focus on a specific relationship in simple functional forms, regressions tend to emphasize interpretability rather than overall predictability. The advent of predictive approaches powered by large-scale datasets and novel computational techniques offers new opportunities for modelling complex relationships with stronger predictive power.

Mechanistic models

Mechanistic modelling is an important approach to explaining empirical regularities, drawing from methods primarily used in physics. Such models predict macro-level regularities of a system by modelling micro-level interactions among basic elements with interpretable and modifiable formulars. While theoretical by nature, mechanistic models in the science of science are often empirically grounded, and this approach has developed together with the advent of large-scale, high-resolution data.

Simplicity is the core value of a mechanistic model. Consider for example, why citations follow a fat-tailed distribution. de Solla Price modelled the citing behaviour as a cumulative advantage process on a growing citation network 159 and found that if the probability a paper is cited grows linearly with its existing citations, the resulting distribution would follow a power law, broadly aligned with empirical observations. The model is intentionally simplified, ignoring myriad factors. Yet the simple cumulative advantage process is by itself sufficient in explaining a power law distribution of citations. In this way, mechanistic models can help to reveal key mechanisms that can explain observed patterns.

Moreover, mechanistic models can be refined as empirical evidence evolves. For example, later investigations showed that citation distributions are better characterized as log-normal 156 , 173 , prompting researchers to introduce a fitness parameter to encapsulate the inherent differences in papers’ ability to attract citations 174 , 175 . Further, older papers are less likely to be cited than expected 176 , 177 , 178 , motivating more recent models 20 to introduce an additional aging effect 179 . By combining the cumulative advantage, fitness and aging effects, one can already achieve substantial predictive power not just for the overall properties of the system but also the citation dynamics of individual papers 20 .

In addition to citations, mechanistic models have been developed to understand the formation of collaborations 136 , 180 , 181 , 182 , 183 , knowledge discovery and diffusion 184 , 185 , topic selection 186 , 187 , career dynamics 30 , 31 , 188 , 189 , the growth of scientific fields 190 and the dynamics of failure in science and other domains 178 .

At the same time, some observers have argued that mechanistic models are too simplistic to capture the essence of complex real-world problems 191 . While it has been a cornerstone for the natural sciences, representing social phenomena in a limited set of mathematical equations may miss complexities and heterogeneities that make social phenomena interesting in the first place. Such concerns are not unique to the science of science, as they represent a broader theme in computational social sciences 192 , 193 , ranging from social networks 194 , 195 to human mobility 196 , 197 to epidemics 198 , 199 . Other observers have questioned the practical utility of mechanistic models and whether they can be used to guide decisions and devise actionable policies. Nevertheless, despite these limitations, several complex phenomena in the science of science are well captured by simple mechanistic models, showing a high degree of regularity beneath complex interacting systems and providing powerful insights about the nature of science. Mixing such modelling with other methods could be particularly fruitful in future investigations.

Machine learning

The science of science seeks in part to forecast promising directions for scientific research 7 , 44 . In recent years, machine learning methods have substantially advanced predictive capabilities 200 , 201 and are playing increasingly important parts in the science of science. In contrast to the previous methods, machine learning does not emphasize hypotheses or theories. Rather, it leverages complex relationships in data and optimizes goodness of fit to make predictions and categorizations.

Traditional machine learning models include supervised, semi-supervised and unsupervised learning. The model choice depends on data availability and the research question, ranging from supervised models for citation prediction 202 , 203 to unsupervised models for community detection 204 . Take for example mappings of scientific knowledge 94 , 205 , 206 . The unsupervised method applies network clustering algorithms to map the structures of science. Related visualization tools make sense of clusters from the underlying network, allowing observers to see the organization, interactions and evolution of scientific knowledge. More recently, supervised learning, and deep neural networks in particular, have witnessed especially rapid developments 207 . Neural networks can generate high-dimensional representations of unstructured data such as images and texts, which encode complex properties difficult for human experts to perceive.

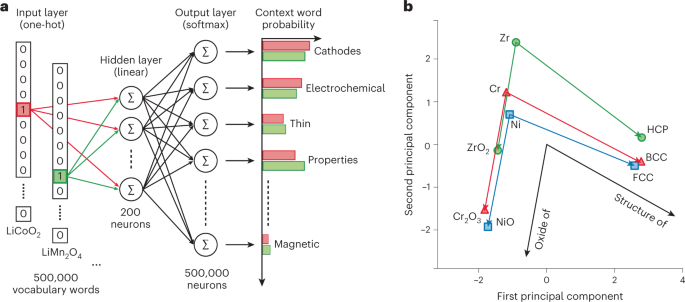

Take text analysis as an example. A recent study 52 utilizes 3.3 million paper abstracts in materials science to predict the thermoelectric properties of materials. The intuition is that the words currently used to describe a material may predict its hitherto undiscovered properties (Fig. 2 ). Compared with a random material, the materials predicted by the model are eight times more likely to be reported as thermoelectric in the next 5 years, suggesting that machine learning has the potential to substantially speed up knowledge discovery, especially as data continue to grow in scale and scope. Indeed, predicting the direction of new discoveries represents one of the most promising avenues for machine learning models, with neural networks being applied widely to biology 208 , physics 209 , 210 , mathematics 211 , chemistry 212 , medicine 213 and clinical applications 214 . Neural networks also offer a quantitative framework to probe the characteristics of creative products ranging from scientific papers 53 , journals 215 , organizations 148 , to paintings and movies 32 . Neural networks can also help to predict the reproducibility of papers from a variety of disciplines at scale 53 , 216 .

This figure illustrates the word2vec skip-gram methods 52 , where the goal is to predict useful properties of materials using previous scientific literature. a , The architecture and training process of the word2vec skip-gram model, where the 3-layer, fully connected neural network learns the 200-dimensional representation (hidden layer) from the sparse vector for each word and its context in the literature (input layer). b , The top two principal components of the word embedding. Materials with similar features are close in the 2D space, allowing prediction of a material’s properties. Different targeted words are shown in different colours. Reproduced with permission from ref. 52 , Springer Nature Ltd.

While machine learning can offer high predictive accuracy, successful applications to the science of science face challenges, particularly regarding interpretability. Researchers may value transparent and interpretable findings for how a given feature influences an outcome, rather than a black-box model. The lack of interpretability also raises concerns about bias and fairness. In predicting reproducible patterns from data, machine learning models inevitably include and reproduce biases embedded in these data, often in non-transparent ways. The fairness of machine learning 217 is heavily debated in applications ranging from the criminal justice system to hiring processes. Effective and responsible use of machine learning in the science of science therefore requires thoughtful partnership between humans and machines 53 to build a reliable system accessible to scrutiny and modification.

Causal approaches

The preceding methods can reveal core facts about the workings of science and develop predictive capacity. Yet, they fail to capture causal relationships, which are particularly useful in assessing policy interventions. For example, how can we test whether a science policy boosts or hinders the performance of individuals, teams or institutions? The overarching idea of causal approaches is to construct some counterfactual world where two groups are identical to each other except that one group experiences a treatment that the other group does not.

Towards causation

Before engaging in causal approaches, it is useful to first consider the interpretative challenges of observational data. As observational data emerge from mechanisms that are not fully known or measured, an observed correlation may be driven by underlying forces that were not accounted for in the analysis. This challenge makes causal inference fundamentally difficult in observational data. An awareness of this issue is the first step in confronting it. It further motivates intermediate empirical approaches, including the use of matching strategies and fixed effects, that can help to confront (although not fully eliminate) the inference challenge. We first consider these approaches before turning to more fully causal methods.

Matching. Matching utilizes rich information to construct a control group that is similar to the treatment group on as many observable characteristics as possible before the treatment group is exposed to the treatment. Inferences can then be made by comparing the treatment and the matched control groups. Exact matching applies to categorical values, such as country, gender, discipline or affiliation 35 , 218 . Coarsened exact matching considers percentile bins of continuous variables and matches observations in the same bin 133 . Propensity score matching estimates the probability of receiving the ‘treatment’ on the basis of the controlled variables and uses the estimates to match treatment and control groups, which reduces the matching task from comparing the values of multiple covariates to comparing a single value 24 , 219 . Dynamic matching is useful for longitudinally matching variables that change over time 220 , 221 .

Fixed effects. Fixed effects are a powerful and now standard tool in controlling for confounders. A key requirement for using fixed effects is that there are multiple observations on the same subject or entity (person, field, institution and so on) 222 , 223 , 224 . The fixed effect works as a dummy variable that accounts for the role of any fixed characteristic of that entity. Consider the finding where gender-diverse teams produce higher-impact papers than same-gender teams do 225 . A confounder may be that individuals who tend to write high-impact papers may also be more likely to work in gender-diverse teams. By including individual fixed effects, one accounts for any fixed characteristics of individuals (such as IQ, cultural background or previous education) that might drive the relationship of interest.

In sum, matching and fixed effects methods reduce potential sources of bias in interpreting relationships between variables. Yet, confounders may persist in these studies. For instance, fixed effects do not control for unobserved factors that change with time within the given entity (for example, access to funding or new skills). Identifying casual effects convincingly will then typically require distinct research methods that we turn to next.

Quasi-experiments

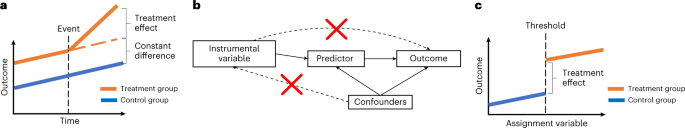

Researchers in economics and other fields have developed a range of quasi-experimental methods to construct treatment and control groups. The key idea here is exploiting randomness from external events that differentially expose subjects to a particular treatment. Here we review three quasi-experimental methods: difference-in-differences, instrumental variables and regression discontinuity (Fig. 3 ).

a – c , This figure presents illustrations of ( a ) differences-in-differences, ( b ) instrumental variables and ( c ) regression discontinuity methods. The solid line in b represents causal links and the dashed line represents the relationships that are not allowed, if the IV method is to produce causal inference.

Difference-in-differences. Difference-in-difference regression (DiD) investigates the effect of an unexpected event, comparing the affected group (the treated group) with an unaffected group (the control group). The control group is intended to provide the counterfactual path—what would have happened were it not for the unexpected event. Ideally, the treated and control groups are on virtually identical paths before the treatment event, but DiD can also work if the groups are on parallel paths (Fig. 3a ). For example, one study 226 examines how the premature death of superstar scientists affects the productivity of their previous collaborators. The control group are collaborators of superstars who did not die in the time frame. The two groups do not show significant differences in publications before a death event, yet upon the death of a star scientist, the treated collaborators on average experience a 5–8% decline in their quality-adjusted publication rates compared with the control group. DiD has wide applicability in the science of science, having been used to analyse the causal effects of grant design 24 , access costs to previous research 155 , 227 , university technology transfer policies 154 , intellectual property 228 , citation practices 229 , evolution of fields 221 and the impacts of paper retractions 230 , 231 , 232 . The DiD literature has grown especially rapidly in the field of economics, with substantial recent refinements 233 , 234 .

Instrumental variables. Another quasi-experimental approach utilizes ‘instrumental variables’ (IV). The goal is to determine the causal influence of some feature X on some outcome Y by using a third, instrumental variable. This instrumental variable is a quasi-random event that induces variation in X and, except for its impact through X , has no other effect on the outcome Y (Fig. 3b ). For example, consider a study of astronomy that seeks to understand how telescope time affects career advancement 235 . Here, one cannot simply look at the correlation between telescope time and career outcomes because many confounds (such as talent or grit) may influence both telescope time and career opportunities. Now consider the weather as an instrumental variable. Cloudy weather will, at random, reduce an astronomer’s observational time. Yet, the weather on particular nights is unlikely to correlate with a scientist’s innate qualities. The weather can then provide an instrumental variable to reveal a causal relationship between telescope time and career outcomes. Instrumental variables have been used to study local peer effects in research 151 , the impact of gender composition in scientific committees 236 , patents on future innovation 237 and taxes on inventor mobility 238 .

Regression discontinuity. In regression discontinuity, policies with an arbitrary threshold for receiving some benefit can be used to construct treatment and control groups (Fig. 3c ). Take the funding paylines for grant proposals as an example. Proposals with scores increasingly close to the payline are increasingly similar in their both observable and unobservable characteristics, yet only those projects with scores above the payline receive the funding. For example, a study 110 examines the effect of winning an early-career grant on the probability of winning a later, mid-career grant. The probability has a discontinuous jump across the initial grant’s payline, providing the treatment and control groups needed to estimate the causal effect of receiving a grant. This example utilizes the ‘sharp’ regression discontinuity that assumes treatment status to be fully determined by the cut-off. If we assume treatment status is only partly determined by the cut-off, we can use ‘fuzzy’ regression discontinuity designs. Here the probability of receiving a grant is used to estimate the future outcome 11 , 110 , 239 , 240 , 241 .

Although quasi-experiments are powerful tools, they face their own limitations. First, these approaches identify causal effects within a specific context and often engage small numbers of observations. How representative the samples are for broader populations or contexts is typically left as an open question. Second, the validity of the causal design is typically not ironclad. Researchers usually conduct different robustness checks to verify whether observable confounders have significant differences between the treated and control groups, before treatment. However, unobservable features may still differ between treatment and control groups. The quality of instrumental variables and the specific claim that they have no effect on the outcome except through the variable of interest, is also difficult to assess. Ultimately, researchers must rely partly on judgement to tell whether appropriate conditions are met for causal inference.

This section emphasized popular econometric approaches to causal inference. Other empirical approaches, such as graphical causal modelling 242 , 243 , also represent an important stream of work on assessing causal relationships. Such approaches usually represent causation as a directed acyclic graph, with nodes as variables and arrows between them as suspected causal relationships. In the science of science, the directed acyclic graph approach has been applied to quantify the causal effect of journal impact factor 244 and gender or racial bias 245 on citations. Graphical causal modelling has also triggered discussions on strengths and weaknesses compared to the econometrics methods 246 , 247 .

Experiments

In contrast to quasi-experimental approaches, laboratory and field experiments conduct direct randomization in assigning treatment and control groups. These methods engage explicitly in the data generation process, manipulating interventions to observe counterfactuals. These experiments are crafted to study mechanisms of specific interest and, by designing the experiment and formally randomizing, can produce especially rigorous causal inference.

Laboratory experiments. Laboratory experiments build counterfactual worlds in well-controlled laboratory environments. Researchers randomly assign participants to the treatment or control group and then manipulate the laboratory conditions to observe different outcomes in the two groups. For example, consider laboratory experiments on team performance and gender composition 144 , 248 . The researchers randomly assign participants into groups to perform tasks such as solving puzzles or brainstorming. Teams with a higher proportion of women are found to perform better on average, offering evidence that gender diversity is causally linked to team performance. Laboratory experiments can allow researchers to test forces that are otherwise hard to observe, such as how competition influences creativity 249 . Laboratory experiments have also been used to evaluate how journal impact factors shape scientists’ perceptions of rewards 250 and gender bias in hiring 251 .

Laboratory experiments allow for precise control of settings and procedures to isolate causal effects of interest. However, participants may behave differently in synthetic environments than in real-world settings, raising questions about the generalizability and replicability of the results 252 , 253 , 254 . To assess causal effects in real-world settings, researcher use randomized controlled trials.

Randomized controlled trials. A randomized controlled trial (RCT), or field experiment, is a staple for causal inference across a wide range of disciplines. RCTs randomly assign participants into the treatment and control conditions 255 and can be used not only to assess mechanisms but also to test real-world interventions such as policy change. The science of science has witnessed growing use of RCTs. For instance, a field experiment 146 investigated whether lower search costs for collaborators increased collaboration in grant applications. The authors randomly allocated principal investigators to face-to-face sessions in a medical school, and then measured participants’ chance of writing a grant proposal together. RCTs have also offered rich causal insights on peer review 256 , 257 , 258 , 259 , 260 and gender bias in science 261 , 262 , 263 .

While powerful, RCTs are difficult to conduct in the science of science, mainly for two reasons. The first concerns potential risks in a policy intervention. For instance, while randomizing funding across individuals could generate crucial causal insights for funders, it may also inadvertently harm participants’ careers 264 . Second, key questions in the science of science often require a long-time horizon to trace outcomes, which makes RCTs costly. It also raises the difficulty of replicating findings. A relative advantage of the quasi-experimental methods discussed earlier is that one can identify causal effects over potentially long periods of time in the historical record. On the other hand, quasi-experiments must be found as opposed to designed, and they often are not available for many questions of interest. While the best approaches are context dependent, a growing community of researchers is building platforms to facilitate RCTs for the science of science, aiming to lower their costs and increase their scale. Performing RCTs in partnership with science institutions can also contribute to timely, policy-relevant research that may substantially improve science decision-making and investments.

Research in the science of science has been empowered by the growth of high-scale data, new measurement approaches and an expanding range of empirical methods. These tools provide enormous capacity to test conceptual frameworks about science, discover factors impacting scientific productivity, predict key scientific outcomes and design policies that better facilitate future scientific progress. A careful appreciation of empirical techniques can help researchers to choose effective tools for questions of interest and propel the field. A better and broader understanding of these methodologies may also build bridges across diverse research communities, facilitating communication and collaboration, and better leveraging the value of diverse perspectives. The science of science is about turning scientific methods on the nature of science itself. The fruits of this work, with time, can guide researchers and research institutions to greater progress in discovery and understanding across the landscape of scientific inquiry.

Bush, V . S cience–the Endless Frontier: A Report to the President on a Program for Postwar Scientific Research (National Science Foundation, 1990).

Mokyr, J. The Gifts of Athena (Princeton Univ. Press, 2011).

Jones, B. F. in Rebuilding the Post-Pandemic Economy (eds Kearney, M. S. & Ganz, A.) 272–310 (Aspen Institute Press, 2021).

Wang, D. & Barabási, A.-L. The Science of Science (Cambridge Univ. Press, 2021).

Fortunato, S. et al. Science of science. Science 359 , eaao0185 (2018).

Article PubMed PubMed Central Google Scholar

Azoulay, P. et al. Toward a more scientific science. Science 361 , 1194–1197 (2018).

Article PubMed Google Scholar

Clauset, A., Larremore, D. B. & Sinatra, R. Data-driven predictions in the science of science. Science 355 , 477–480 (2017).

Article CAS PubMed Google Scholar

Zeng, A. et al. The science of science: from the perspective of complex systems. Phys. Rep. 714 , 1–73 (2017).

Article Google Scholar

Lin, Z., Yin. Y., Liu, L. & Wang, D. SciSciNet: a large-scale open data lake for the science of science research. Sci. Data, https://doi.org/10.1038/s41597-023-02198-9 (2023).

Ahmadpoor, M. & Jones, B. F. The dual frontier: patented inventions and prior scientific advance. Science 357 , 583–587 (2017).

Azoulay, P., Graff Zivin, J. S., Li, D. & Sampat, B. N. Public R&D investments and private-sector patenting: evidence from NIH funding rules. Rev. Econ. Stud. 86 , 117–152 (2019).

Yin, Y., Dong, Y., Wang, K., Wang, D. & Jones, B. F. Public use and public funding of science. Nat. Hum. Behav. 6 , 1344–1350 (2022).

Merton, R. K. The Sociology of Science: Theoretical and Empirical Investigations (Univ. Chicago Press, 1973).

Kuhn, T. The Structure of Scientific Revolutions (Princeton Univ. Press, 2021).

Uzzi, B., Mukherjee, S., Stringer, M. & Jones, B. Atypical combinations and scientific impact. Science 342 , 468–472 (2013).

Zuckerman, H. Scientific Elite: Nobel Laureates in the United States (Transaction Publishers, 1977).

Wu, L., Wang, D. & Evans, J. A. Large teams develop and small teams disrupt science and technology. Nature 566 , 378–382 (2019).

Wuchty, S., Jones, B. F. & Uzzi, B. The increasing dominance of teams in production of knowledge. Science 316 , 1036–1039 (2007).

Foster, J. G., Rzhetsky, A. & Evans, J. A. Tradition and innovation in scientists’ research strategies. Am. Sociol. Rev. 80 , 875–908 (2015).

Wang, D., Song, C. & Barabási, A.-L. Quantifying long-term scientific impact. Science 342 , 127–132 (2013).

Clauset, A., Arbesman, S. & Larremore, D. B. Systematic inequality and hierarchy in faculty hiring networks. Sci. Adv. 1 , e1400005 (2015).

Ma, A., Mondragón, R. J. & Latora, V. Anatomy of funded research in science. Proc. Natl Acad. Sci. USA 112 , 14760–14765 (2015).

Article CAS PubMed PubMed Central Google Scholar

Ma, Y. & Uzzi, B. Scientific prize network predicts who pushes the boundaries of science. Proc. Natl Acad. Sci. USA 115 , 12608–12615 (2018).

Azoulay, P., Graff Zivin, J. S. & Manso, G. Incentives and creativity: evidence from the academic life sciences. RAND J. Econ. 42 , 527–554 (2011).

Schor, S. & Karten, I. Statistical evaluation of medical journal manuscripts. JAMA 195 , 1123–1128 (1966).

Platt, J. R. Strong inference: certain systematic methods of scientific thinking may produce much more rapid progress than others. Science 146 , 347–353 (1964).

Ioannidis, J. P. Why most published research findings are false. PLoS Med. 2 , e124 (2005).

Simonton, D. K. Career landmarks in science: individual differences and interdisciplinary contrasts. Dev. Psychol. 27 , 119 (1991).

Way, S. F., Morgan, A. C., Clauset, A. & Larremore, D. B. The misleading narrative of the canonical faculty productivity trajectory. Proc. Natl Acad. Sci. USA 114 , E9216–E9223 (2017).

Sinatra, R., Wang, D., Deville, P., Song, C. & Barabási, A.-L. Quantifying the evolution of individual scientific impact. Science 354 , aaf5239 (2016).

Liu, L. et al. Hot streaks in artistic, cultural, and scientific careers. Nature 559 , 396–399 (2018).

Liu, L., Dehmamy, N., Chown, J., Giles, C. L. & Wang, D. Understanding the onset of hot streaks across artistic, cultural, and scientific careers. Nat. Commun. 12 , 5392 (2021).

Squazzoni, F. et al. Peer review and gender bias: a study on 145 scholarly journals. Sci. Adv. 7 , eabd0299 (2021).

Hofstra, B. et al. The diversity–innovation paradox in science. Proc. Natl Acad. Sci. USA 117 , 9284–9291 (2020).